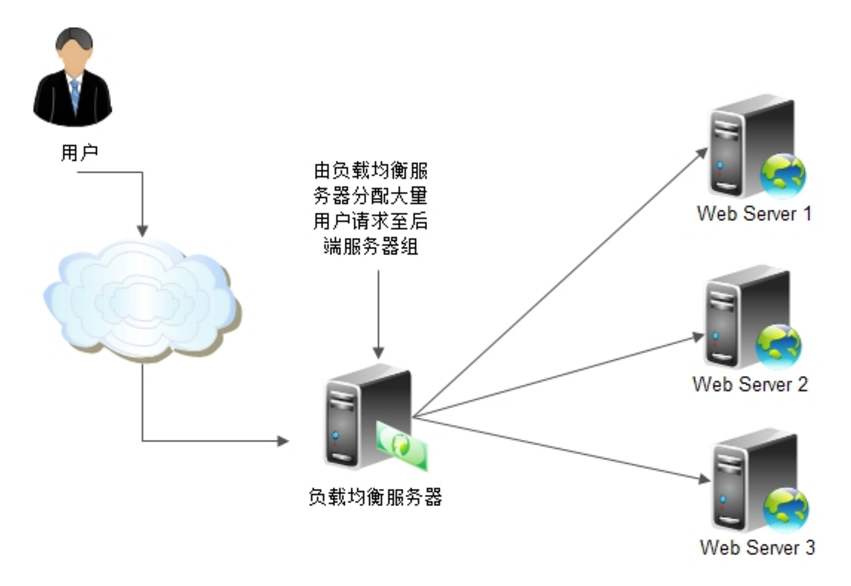

下文给大家带来多节点部署介绍及负载均衡搭建方法,希望能够给大家在实际运用中带来一定的帮助,负载均衡涉及的东西比较多,理论也不多,网上有很多书籍,今天我们就用亿速云在行业内累计的经验来做一个解答。

在我们搭建多节点部署时,多个master同时运行工作,在处理工作问题时总是使用同一个master完成工作,当master服务器面对多个请求任务时,处理速度就会变慢,同时其余的master服务器不处理请求也是一种资源的浪费,这个时候我们就考虑到做负载均衡服务

[root@master01 kubeconfig]# scp -r /opt/kubernetes/ root@192.168.80.11:/opt //直接复制kubernetes目录到master02

The authenticity of host '192.168.80.11 (192.168.80.11)' can't be established.

ECDSA key fingerprint is SHA256:Ih0NpZxfLb+MOEFW8B+ZsQ5R8Il2Sx8dlNov632cFlo.

ECDSA key fingerprint is MD5:a9:ee:e5:cc:40:c7:9e:24:5b:c1:cd:c1:7b:31:42:0f.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.80.11' (ECDSA) to the list of known hosts.

root@192.168.80.11's password:

token.csv 100% 84 61.4KB/s 00:00

kube-apiserver 100% 929 1.6MB/s 00:00

kube-scheduler 100% 94 183.2KB/s 00:00

kube-controller-manager 100% 483 969.2KB/s 00:00

kube-apiserver 100% 184MB 106.1MB/s 00:01

kubectl 100% 55MB 85.9MB/s 00:00

kube-controller-manager 100% 155MB 111.9MB/s 00:01

kube-scheduler 100% 55MB 115.8MB/s 00:00

ca-key.pem 100% 1675 2.7MB/s 00:00

ca.pem 100% 1359 2.6MB/s 00:00

server-key.pem 100% 1679 2.5MB/s 00:00

server.pem 100% 1643 2.7MB/s 00:00

[root@master01 kubeconfig]# scp /usr/lib/systemd/system/{kube-apiserver,kube-controller-manager, kube-scheduler}.service root@192.168.80.11:/usr/lib/systemd/system //复制master中的三个组件启动脚本

root@192.168.80.11's password:

kube-apiserver.service 100% 282 274.4KB/s 00:00

kube-controller-manager.service 100% 317 403.5KB/s 00:00

kube-scheduler.service 100% 281 379.4KB/s 00:00

[root@master01 kubeconfig]# scp -r /opt/etcd/ root@192.168.80.11:/opt/ //特别注意:master02一定要有 etcd证书,否则apiserver服务无法启动 拷贝master01上已有的etcd证书给master02使用

root@192.168.80.11's password:

etcd 100% 509 275.7KB/s 00:00

etcd 100% 18MB 95.3MB/s 00:00

etcdctl 100% 15MB 75.1MB/s 00:00

ca-key.pem 100% 1679 941.1KB/s 00:00

ca.pem 100% 1265 1.6MB/s 00:00

server-key.pem 100% 1675 2.0MB/s 00:00

server.pem 100% 1338 1.5MB/s 00:00[root@master02 ~]# systemctl stop firewalld.service //关闭防火墙 [root@master02 ~]# setenforce 0 //关闭selinux [root@master02 ~]# vim /opt/kubernetes/cfg/kube-apiserver //更改文件 ... --etcd-servers=https://192.168.80.12:2379,https://192.168.80.13:2379,https://192.168.80.14:2379 \ --bind-address=192.168.80.11 \ //更改IP地址 --secure-port=6443 \ --advertise-address=192.168.80.11 \ //更改IP地址 --allow-privileged=true \ --service-cluster-ip-range=10.0.0.0/24 \ ... :wq [root@master02 ~]# systemctl start kube-apiserver.service //启动apiserver服务 [root@master02 ~]# systemctl enable kube-apiserver.service //设置开机自启 Created symlink from /etc/systemd/system/multi-user.target.wants/kube-apiserver.service to /usr/lib/ systemd/system/kube-apiserver.service. [root@master02 ~]# systemctl start kube-controller-manager.service //启动controller-manager [root@master02 ~]# systemctl enable kube-controller-manager.service //设置开机自启 Created symlink from /etc/systemd/system/multi-user.target.wants/kube-controller-manager.service to /usr/lib/systemd/system/kube-controller-manager.service. [root@master02 ~]# systemctl start kube-scheduler.service //启动scheduler [root@master02 ~]# systemctl enable kube-scheduler.service //设置开机自启 Created symlink from /etc/systemd/system/multi-user.target.wants/kube-scheduler.service to /usr/lib/ systemd/system/kube-scheduler.service. [root@master02 ~]# vim /etc/profile //编辑添加环境变量 ... export PATH=$PATH:/opt/kubernetes/bin/ :wq [root@master02 ~]# source /etc/profile //重新执行 [root@master02 ~]# kubectl get node //查看节点信息 NAME STATUS ROLES AGE VERSION 192.168.80.13 Ready <none> 146m v1.12.3 192.168.80.14 Ready <none> 144m v1.12.3 //多master配置成功

lb01、lb02同步操作keepalived服务配置文件下载 提取码:fkoh

[root@lb01 ~]# systemctl stop firewalld.service

[root@lb01 ~]# setenforce 0

[root@lb01 ~]# vim /etc/yum.repos.d/nginx.repo //配置nginx服务yum源

[nginx]

name=nginx repo

baseurl=http://nginx.org/packages/centos/7/$basearch/

gpgcheck=0

:wq

[root@lb01 yum.repos.d]# yum list //重新加载yum

已加载插件:fastestmirror

base | 3.6 kB 00:00:00

extras | 2.9 kB 00:00:00

...

[root@lb01 yum.repos.d]# yum install nginx -y //安装nginx服务

已加载插件:fastestmirror

Loading mirror speeds from cached hostfile

* base: mirrors.aliyun.com

* extras: mirrors.163.com

...

[root@lb01 yum.repos.d]# vim /etc/nginx/nginx.conf //编辑nginx配置文件

...

events {

worker_connections 1024;

}

stream { //添加四层转发模块

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver {

server 192.168.80.12:6443; //注意IP地址

server 192.168.80.11:6443;

}

server {

listen 6443;

proxy_pass k8s-apiserver;

}

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

...

:wq

[root@lb01 yum.repos.d]# systemctl start nginx //启动nginx服务 可以在浏览器中访问测试nginx服务

[root@lb01 yum.repos.d]# yum install keepalived -y //安装keepalived服务

已加载插件:fastestmirror

Loading mirror speeds from cached hostfile

* base: mirrors.aliyun.com

* extras: mirrors.163.com

...

[root@lb01 yum.repos.d]# mount.cifs //192.168.80.2/shares/K8S/k8s02 /mnt/ //挂载宿主机目录

Password for root@//192.168.80.2/shares/K8S/k8s02:

[root@lb01 yum.repos.d]# cp /mnt/keepalived.conf /etc/keepalived/keepalived.conf //复制准备好的 keepalived配置文件覆盖源配置文件

cp:是否覆盖"/etc/keepalived/keepalived.conf"? yes

[root@lb01 yum.repos.d]# vim /etc/keepalived/keepalived.conf //编辑配置文件

...

vrrp_script check_nginx {

script "/etc/nginx/check_nginx.sh" //注意脚本位置修改

}

vrrp_instance VI_1 {

state MASTER

interface ens33 //注意网卡名称

virtual_router_id 51 //VRRP 路由 ID实例,每个实例是唯一的

priority 100 //优先级,备服务器设置 90

advert_int 1 //指定VRRP 心跳包通告间隔时间,默认1秒

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.80.100/24 //飘逸地址

}

track_script {

check_nginx

}

}

//删除下面所有内容

:wqlb02服务器keepalived配置文件修改

[root@lb02 ~]# vim /etc/keepalived/keepalived.conf

...

vrrp_script check_nginx {

script "/etc/nginx/check_nginx.sh" //注意脚本位置修改

}

vrrp_instance VI_1 {

state BACKUP //修改角色为backup

interface ens33 //网卡名称

virtual_router_id 51 //VRRP 路由 ID实例,每>个实例是唯一的

priority 90 //优先级,备服务器设置 90

advert_int 1 //指定VRRP 心跳包通告间隔时间,默认1秒

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.80.100/24 //虚拟IP地址

}

track_script {

check_nginx

}

}

//删除下面所有内容

:wqlb01、lb02同步操作

[root@lb01 yum.repos.d]# vim /etc/nginx/check_nginx.sh //编辑判断nginx状态脚本 count=$(ps -ef |grep nginx |egrep -cv "grep|$$") if [ "$count" -eq 0 ];then systemctl stop keepalived fi :wq chmod +x /etc/nginx/check_nginx.sh //添加脚本执行权限 [root@lb01 yum.repos.d]# systemctl start keepalived //启动服务

[root@lb01 ~]# ip a //查看地址信息 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:e9:04:ba brd ff:ff:ff:ff:ff:ff inet 192.168.80.19/24 brd 192.168.80.255 scope global ens33 valid_lft forever preferred_lft forever inet 192.168.80.100/24 scope global secondary ens33 //虚拟地址成功配置 valid_lft forever preferred_lft forever inet6 fe80::c3ab:d7ec:1adf:c5df/64 scope link valid_lft forever preferred_lft forever

[root@lb02 ~]# ip a //查看地址信息 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:7d:c7:ab brd ff:ff:ff:ff:ff:ff inet 192.168.80.20/24 brd 192.168.80.255 scope global ens33 valid_lft forever preferred_lft forever inet6 fe80::cd8b:b80c:8deb:251f/64 scope link valid_lft forever preferred_lft forever inet6 fe80::c3ab:d7ec:1adf:c5df/64 scope link tentative dadfailed valid_lft forever preferred_lft forever //没有虚拟IP地址 lb02属于备用服务

[root@lb01 ~]# systemctl stop nginx.service [root@lb01 nginx]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:e9:04:ba brd ff:ff:ff:ff:ff:ff inet 192.168.80.19/24 brd 192.168.80.255 scope global ens33 valid_lft forever preferred_lft forever inet6 fe80::c3ab:d7ec:1adf:c5df/64 scope link valid_lft forever preferred_lft forever [root@lb02 ~]# ip a //在lb02服务器查看 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:7d:c7:ab brd ff:ff:ff:ff:ff:ff inet 192.168.80.20/24 brd 192.168.80.255 scope global ens33 valid_lft forever preferred_lft forever inet 192.168.80.100/24 scope global secondary ens33 //漂移地址转移到lb02上 valid_lft forever preferred_lft forever inet6 fe80::cd8b:b80c:8deb:251f/64 scope link valid_lft forever preferred_lft forever inet6 fe80::c3ab:d7ec:1adf:c5df/64 scope link tentative dadfailed valid_lft forever preferred_lft forever

[root@lb01 nginx]# systemctl start nginx [root@lb01 nginx]# systemctl start keepalived.service [root@lb01 nginx]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:e9:04:ba brd ff:ff:ff:ff:ff:ff inet 192.168.80.19/24 brd 192.168.80.255 scope global ens33 valid_lft forever preferred_lft forever inet 192.168.80.100/24 scope global secondary ens33 //漂移地址被抢占回来 因为配置了优先级 valid_lft forever preferred_lft forever inet6 fe80::c3ab:d7ec:1adf:c5df/64 scope link valid_lft forever preferred_lft forever

[root@node01 ~]# vim /opt/kubernetes/cfg/bootstrap.kubeconfig ... server: https://192.168.80.100:6443 ... :wq [root@node01 ~]# vim /opt/kubernetes/cfg/kubelet.kubeconfig ... server: https://192.168.80.100:6443 ... :wq [root@node01 ~]# vim /opt/kubernetes/cfg/kube-proxy.kubeconfig ... server: https://192.168.80.100:6443 ... :wq [root@node01 ~]# systemctl restart kubelet.service //重启服务 [root@node01 ~]# systemctl restart kube-proxy.service

[root@lb01 nginx]# tail /var/log/nginx/k8s-access.log 192.168.80.13 192.168.80.12:6443 - [11/Feb/2020:15:23:52 +0800] 200 1118 192.168.80.13 192.168.80.11:6443 - [11/Feb/2020:15:23:52 +0800] 200 1119 192.168.80.14 192.168.80.12:6443 - [11/Feb/2020:15:26:01 +0800] 200 1119 192.168.80.14 192.168.80.12:6443 - [11/Feb/2020:15:26:01 +0800] 200 1120

[root@master01 ~]# kubectl run nginx --image=nginx //创建pod节点 kubectl run --generator=deployment/apps.v1beta1 is DEPRECATED and will be removed in a future version. Use kubectl create instead. deployment.apps/nginx created [root@master01 ~]# kubectl get pods //查看pod信息 NAME READY STATUS RESTARTS AGE nginx-dbddb74b8-sdcpl 1/1 Running 0 33m //创建成功 [root@master01 ~]# kubectl logs nginx-dbddb74b8-sdcpl //查看日志信息 Error from server (Forbidden): Forbidden (user=system:anonymous, verb=get, resource=nodes, subresource=proxy) ( pods/log nginx-dbddb74b8-sdcpl) //出报错 [root@master01 ~]# kubectl create clusterrolebinding cluster-system-anonymous --clusterrole=cluster-admin --user=system:anonymous //解决日志报错问题 clusterrolebinding.rbac.authorization.k8s.io/cluster-system-anonymous created [root@master01 ~]# kubectl logs nginx-dbddb74b8-sdcpl //再次查看日志 [root@master01 ~]# //这个时候没有访问,所有日志没有显示日志信息

在node节点中访问nginx网页

[root@master01 ~]# kubectl get pods -o wide //先在master01节点上查看pod网络信息

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

nginx-dbddb74b8-sdcpl 1/1 Running 0 38m 172.17.33.2 192.168.80.14 <none>

[root@node01 ~]# curl 172.17.33.2 //在node节点上操作可以直接访问

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h3>Welcome to nginx!</h3>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>[root@master01 ~]# kubectl logs nginx-dbddb74b8-sdcpl 172.17.12.0 - - [12/Feb/2020:06:45:54 +0000] "GET / HTTP/1.1" 200 612 "-" "curl/7.29.0" "-" //出现访问信息

看了以上关于多节点部署介绍及负载均衡搭建方法,如果大家还有什么地方需要了解的可以在亿速云行业资讯里查找自己感兴趣的或者找我们的专业技术工程师解答的,亿速云技术工程师在行业内拥有十几年的经验了。

更多相关知识点文章:

免责声明:本站发布的内容(图片、视频和文字)以原创、转载和分享为主,文章观点不代表本网站立场,如果涉及侵权请联系站长邮箱:is@yisu.com进行举报,并提供相关证据,一经查实,将立刻删除涉嫌侵权内容。