1.基础环境有k8s集群

[root@kubemaster01 prometheus]# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.249.51 Ready <none> 63d v1.12.3

192.168.249.52 Ready <none> 63d v1.12.3

192.168.249.53 Ready <none> 63d v1.12.3

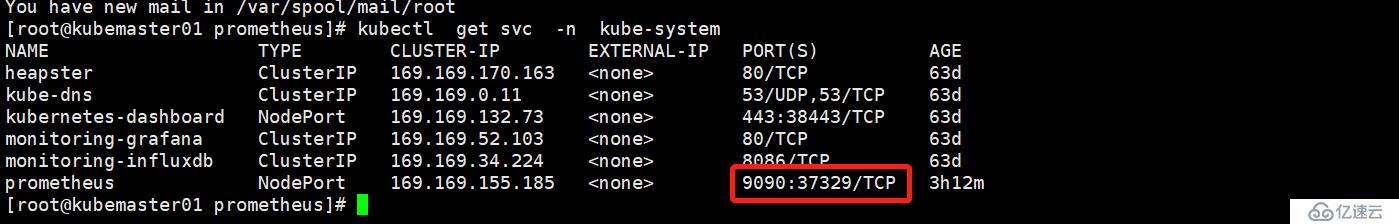

You have new mail in /var/spool/mail/root

[root@kubemaster01 prometheus]# kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-1 Healthy {"health":"true"}

etcd-2 Healthy {"health":"true"}

etcd-0 Healthy {"health":"true"}

[root@kubemaster01 prometheus]#2.下载prometheus yaml

for file in prometheus-configmap.yaml prometheus-rbac.yaml prometheus-service.yaml prometheus-statefulset.yaml ;do wget https://raw.githubusercontent.com/kubernetes/kubernetes/master/cluster/addons/prometheus/$file;done

3.设置动态storageclass

3.1.kubectl apply -f rbac.yml

kind: ServiceAccount apiVersion: v1 metadata: name: nfs-client-provisioner --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: nfs-client-provisioner-runner rules: - apiGroups: [""] resources: ["persistentvolumes"] verbs: ["get", "list", "watch", "create", "delete"] - apiGroups: [""] resources: ["persistentvolumeclaims"] verbs: ["get", "list", "watch", "update"] - apiGroups: ["storage.k8s.io"] resources: ["storageclasses"] verbs: ["get", "list", "watch"] - apiGroups: [""] resources: ["events"] verbs: ["create", "update", "patch"] --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: run-nfs-client-provisioner subjects: - kind: ServiceAccount name: nfs-client-provisioner namespace: default roleRef: kind: ClusterRole name: nfs-client-provisioner-runner apiGroup: rbac.authorization.k8s.io --- kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: name: leader-locking-nfs-client-provisioner rules: - apiGroups: [""] resources: ["endpoints"] verbs: ["get", "list", "watch", "create", "update", "patch"] --- kind: RoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: leader-locking-nfs-client-provisioner subjects: - kind: ServiceAccount name: nfs-client-provisioner namespace: default roleRef: kind: Role name: leader-locking-nfs-client-provisioner apiGroup: rbac.authorization.k8s.io

3.2 设置存储deployment.yaml

apiVersion: v1 kind: ServiceAccount metadata: name: nfs-client-provisioner --- kind: Deployment apiVersion: apps/v1 metadata: name: nfs-client-provisioner spec: replicas: 1 selector: matchLabels: app: nfs-client-provisioner strategy: type: Recreate template: metadata: labels: app: nfs-client-provisioner spec: serviceAccountName: nfs-client-provisioner containers: - name: nfs-client-provisioner image: quay.io/external_storage/nfs-client-provisioner:latest volumeMounts: - name: nfs-client-root mountPath: /persistentvolumes env: - name: PROVISIONER_NAME value: fuseim.pri/ifs - name: NFS_SERVER value: 192.168.249.54 - name: NFS_PATH value: /data/k8s/prometheus volumes: - name: nfs-client-root nfs: server: 192.168.249.54 path: /data/k8s/prometheus

3.4 kubectl apply -f class.yaml

apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: managed-nfs-storage provisioner: fuseim.pri/ifs # or choose another name, must match deployment's env PROVISIONER_NAME' parameters: archiveOnDelete: "false"

3.5 备注说明 nfs 是提前部署好的(ip:192.168.249.54)

[root@es prometheus]# cat /etc/exports /data/k8s/prometheus 192.168.249.0/24(rw,sync,no_root_squash) [root@es prometheus]#

4.修改prometheus的存储地址(prometheus-statefulset.yaml)

4.把 prometheus的svc 发布改为nodeport

5.部署

kubectl apply -f prometheus-rbac.yaml prometheus-configmap.yaml prometheus-statefulset.yaml prometheus-service.yaml

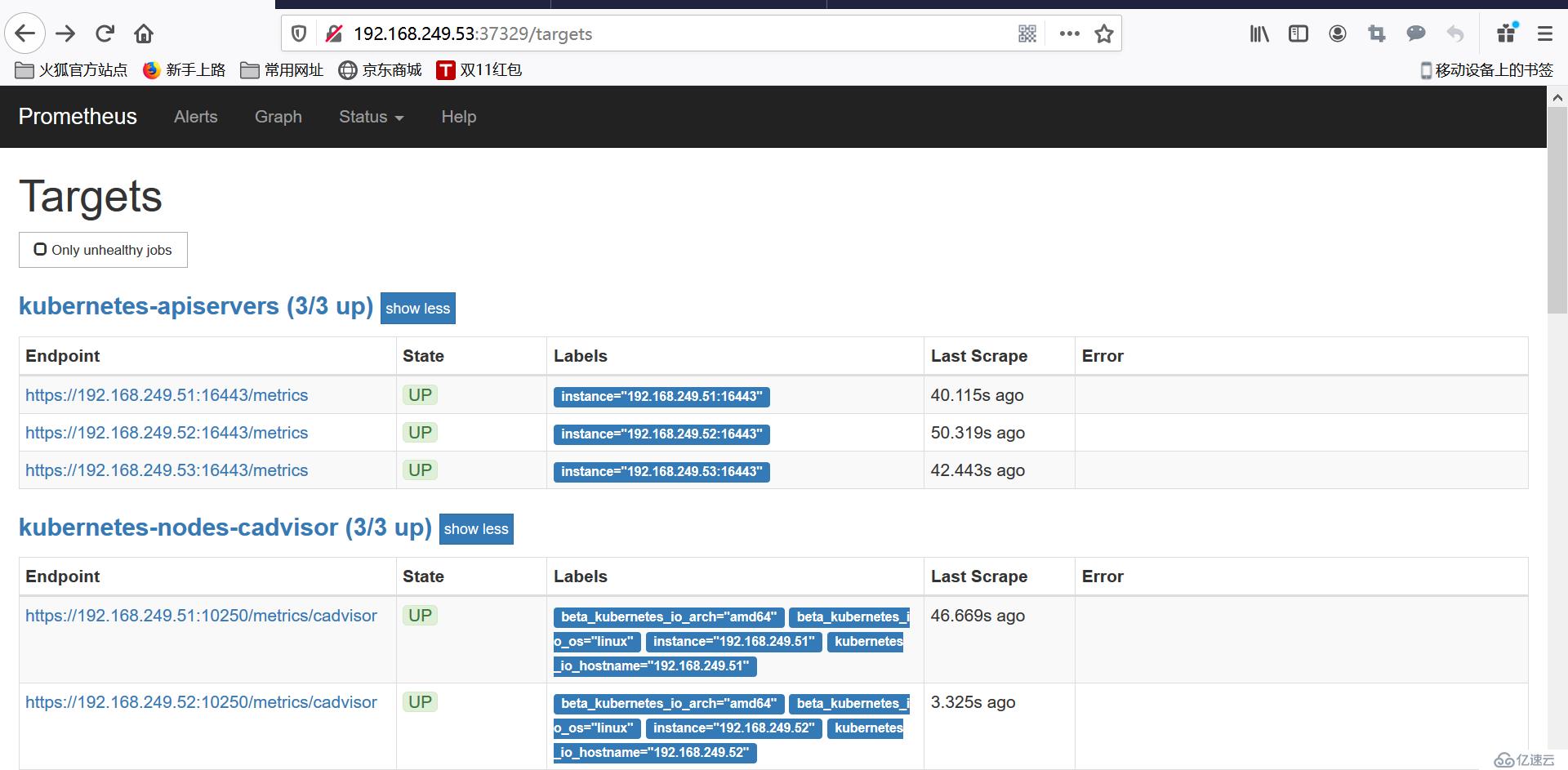

6. 访问

7.

免责声明:本站发布的内容(图片、视频和文字)以原创、转载和分享为主,文章观点不代表本网站立场,如果涉及侵权请联系站长邮箱:is@yisu.com进行举报,并提供相关证据,一经查实,将立刻删除涉嫌侵权内容。