一、环境说明

cat /etc/hosts

192.168.10.11 node1 #master1

192.168.10.14 node4 #master2

192.168.10.15 node5 #master3

备注:由于是在自己虚拟机操作,因此只部署了master节点,worker节点执行的操作我会一并写出,按照操作即可。

二、环境配置<master和worker执行>

1、设置阿里云yum源(可选)

curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

rm -rf /var/cache/yum && yum makecache

2、安装依赖包

yum install -y epel-release conntrack ipvsadm ipset jq sysstat curl iptables libseccomp

3、关闭防火墙

systemctl stop firewalld && systemctl disable firewalld

iptables -F && iptables -X && iptables -F -t nat && iptables -X -t nat && iptables -P FORWARD ACCEPT

4、关闭SELinux

setenforce 0

sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

5、关闭 swap 分区

swapoff -a

sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

6、加载内核模块

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

modprobe -- br_netfilter

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules

7、设置内核参数

cat << EOF | tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

net.ipv4.ip_forward=1

net.ipv4.tcp_tw_recycle=0

vm.swappiness=0

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.ipv6.conf.all.disable_ipv6=1

net.netfilter.nf_conntrack_max=2310720

EOF

sysctl -p /etc/sysctl.d/k8s.conf

8、安装Docker

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum makecache fast

yum install -y docker-ce-18.09.6

systemctl start docker

systemctl enable docker

安装完成后配置启动时的命令,否则docker会将iptables FORWARD chain的默认策略设置为DROP

另外Kubeadm建议将systemd设置为cgroup驱动,所以还要修改daemon.json

sed -i "13i ExecStartPost=/usr/sbin/iptables -P FORWARD ACCEPT" /usr/lib/systemd/system/docker.service

tee /etc/docker/daemon.json <<-'EOF'

{ "exec-opts": ["native.cgroupdriver=systemd"] }

EOF

systemctl daemon-reloadsystemctl restart docker

9、安装kubeadm和kubelet

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum makecache fastyum install -y kubelet kubeadm kubectl

systemctl enable kubelet

vim /usr/lib/systemd/system/kubelet.service.d/10-kubeadm.conf

#设置kubelet的cgroup driver

KUBELET_KUBECONFIG_ARGS=--cgroup-driver=systemd

systemctl daemon-reload

systemctl restart kubelet.service

10、拉取所需镜像

kubeadm config images list | sed -e 's/^/docker pull /g' -e 's#k8s.gcr.io#registry.cn-hangzhou.aliyuncs.com/google_containers#g' | sh -x

docker images | grep registry.cn-hangzhou.aliyuncs.com/google_containers | awk '{print "docker tag",$1":"$2,$1":"$2}' | sed -e 's/registry.cn-hangzhou.aliyuncs.com\/google_containers/k8s.gcr.io/2' | sh -x

docker images | grep registry.cn-hangzhou.aliyuncs.com/google_containers | awk '{print "docker rmi """$1""":"""$2}' | sh -x

三、安装keepalived和haproxy<master执行>

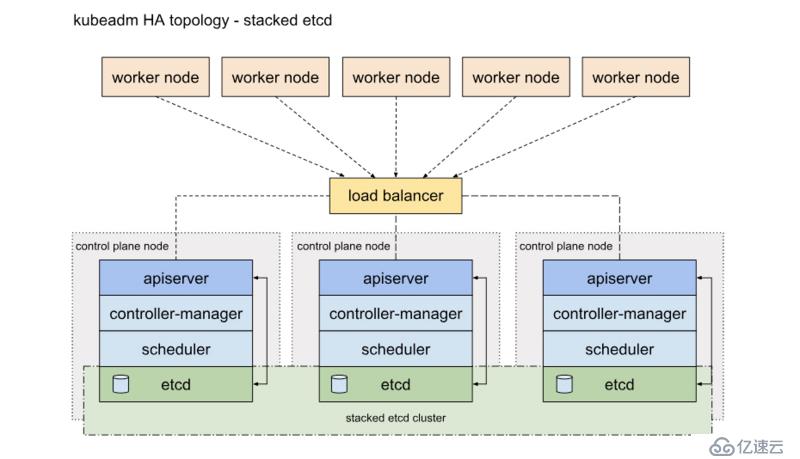

Kubernetes的高可用主要指的是控制平面的高可用,简单说就是有多套Master节点组件和Etcd组件,工作节点通过负载均衡连接到各Master。

将etcd与Master节点组件混布在一起:

Etcd混布方式:

所需机器资源少

部署简单,利于管理

容易进行横向扩展

风险大,一台宿主机挂了,master和etcd就都少了一套,集群冗余度受到的影响比较大。

3.1master安装

yum install -y keepalived haproxy

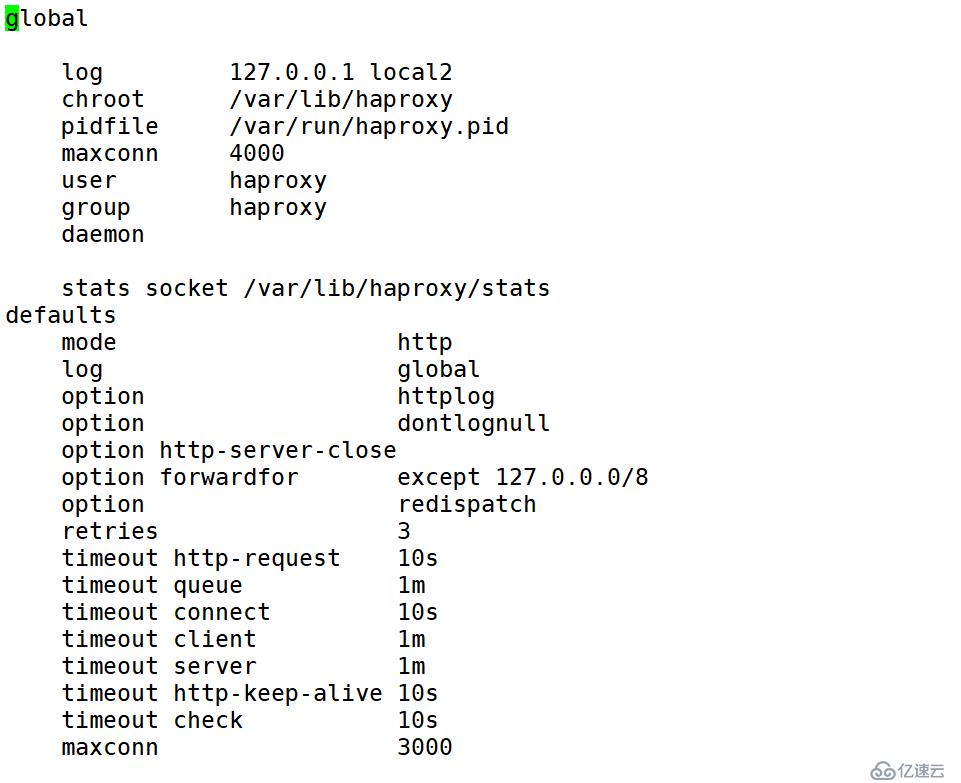

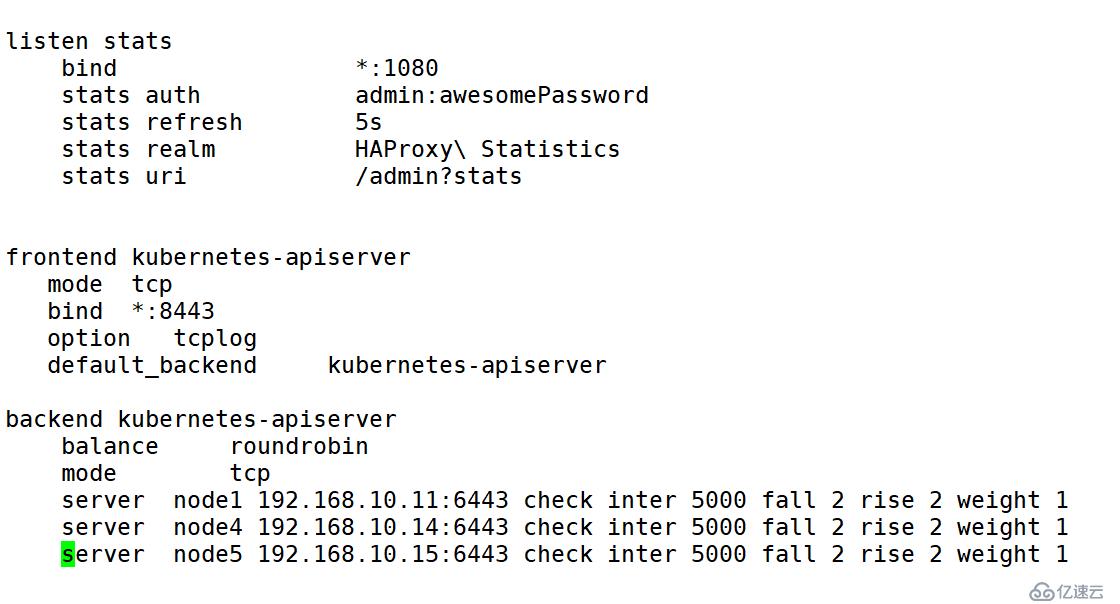

3.2修改haproxy配置文件:(三个节点都一致)

global log 127.0.0.1 local2 chroot /var/lib/haproxy pidfile /var/run/haproxy.pid maxconn 4000 user haproxy group haproxy daemon stats socket /var/lib/haproxy/stats defaults mode http log global option httplog option dontlognull option http-server-close option forwardfor except 127.0.0.0/8 option redispatch retries 3 timeout http-request 10s timeout queue 1m timeout connect 10s timeout client 1m timeout server 1m timeout http-keep-alive 10s timeout check 10s maxconn 3000 listen stats bind *:1080 stats auth admin:awesomePassword stats refresh 5s stats realm HAProxy\ Statistics stats uri /admin?stats frontend kubernetes-apiserver mode tcp bind *:8443 option tcplog default_backend kubernetes-apiserver backend kubernetes-apiserver balance roundrobin mode tcp server node1 192.168.10.11:6443 check inter 5000 fall 2 rise 2 weight 1 server node4 192.168.10.14:6443 check inter 5000 fall 2 rise 2 weight 1 server node5 192.168.10.15:6443 check inter 5000 fall 2 rise 2 weight 1

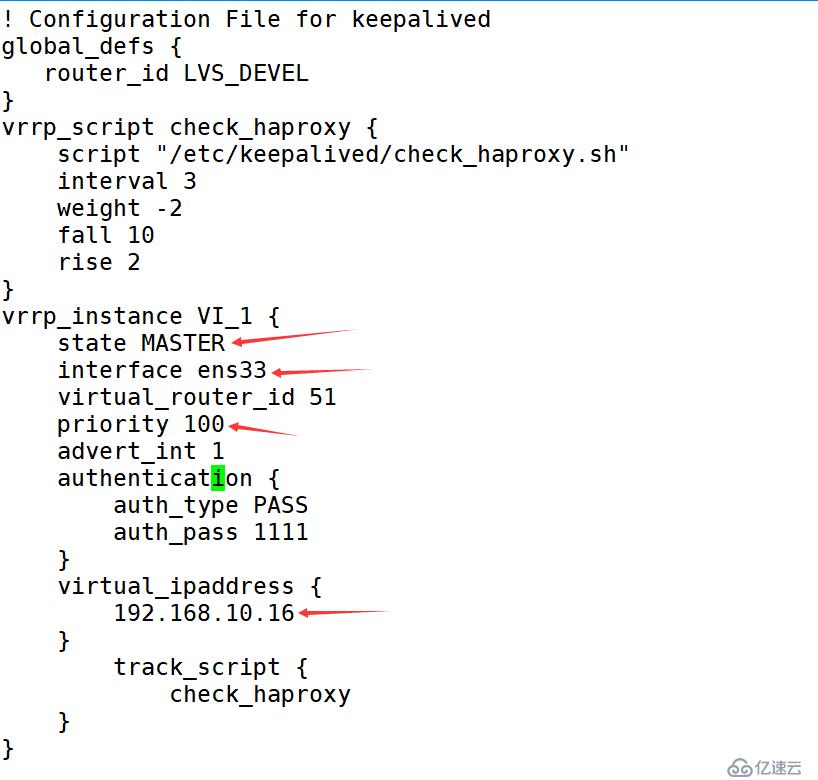

3.3修改keepalived的配置文件

节点一:

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

vrrp_script check_haproxy {

script "/etc/keepalived/check_haproxy.sh"

interval 3

weight -2

fall 10

rise 2

}

vrrp_instance VI_1 {

state MASTER

interface ens33 #宿主机物理网卡名称

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.10.16 #VIP要与自己的IP在同一网段

}

track_script {

check_haproxy

}

}

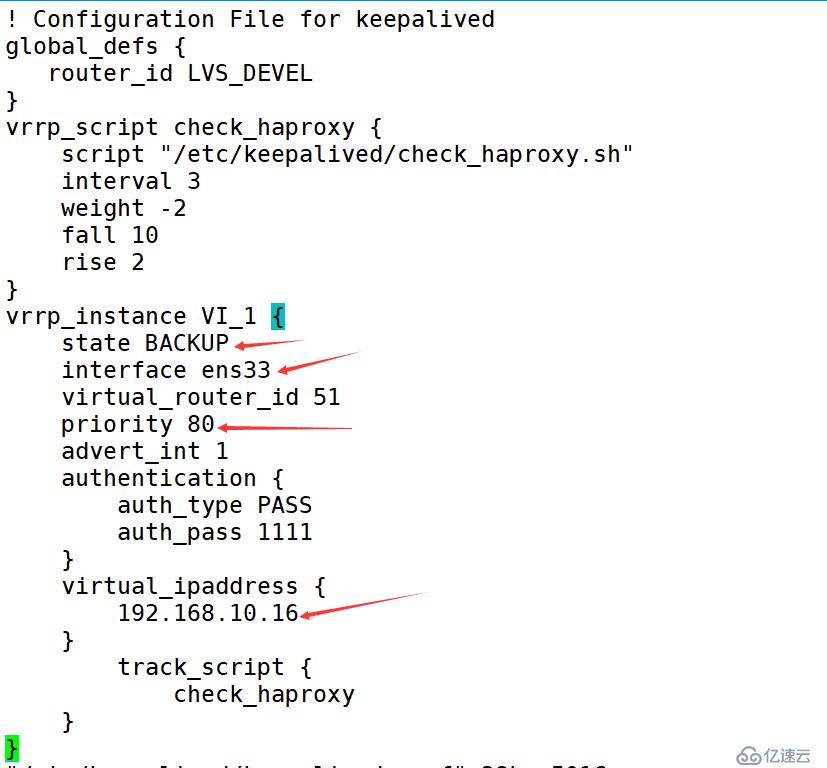

节点二:

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

vrrp_script check_haproxy {

script "/etc/keepalived/check_haproxy.sh"

interval 3

weight -2

fall 10

rise 2

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 51

priority 80

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.10.16

}

track_script {

check_haproxy

}

}

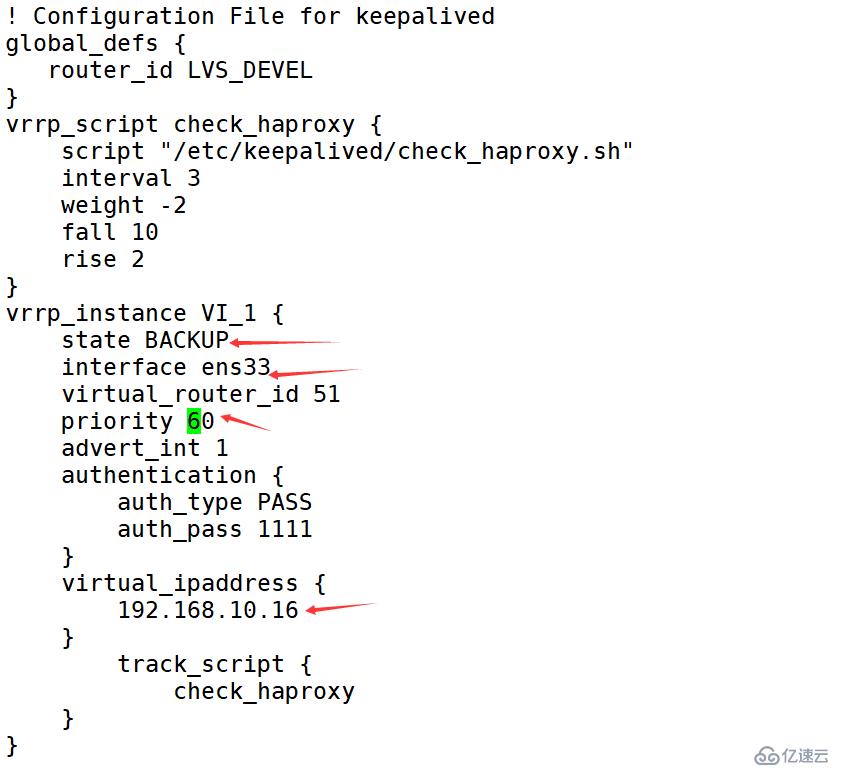

节点三:

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

vrrp_script check_haproxy {

script "/etc/keepalived/check_haproxy.sh"

interval 3

weight -2

fall 10

rise 2

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 51

priority 60

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.10.16

}

track_script {

check_haproxy

}

}

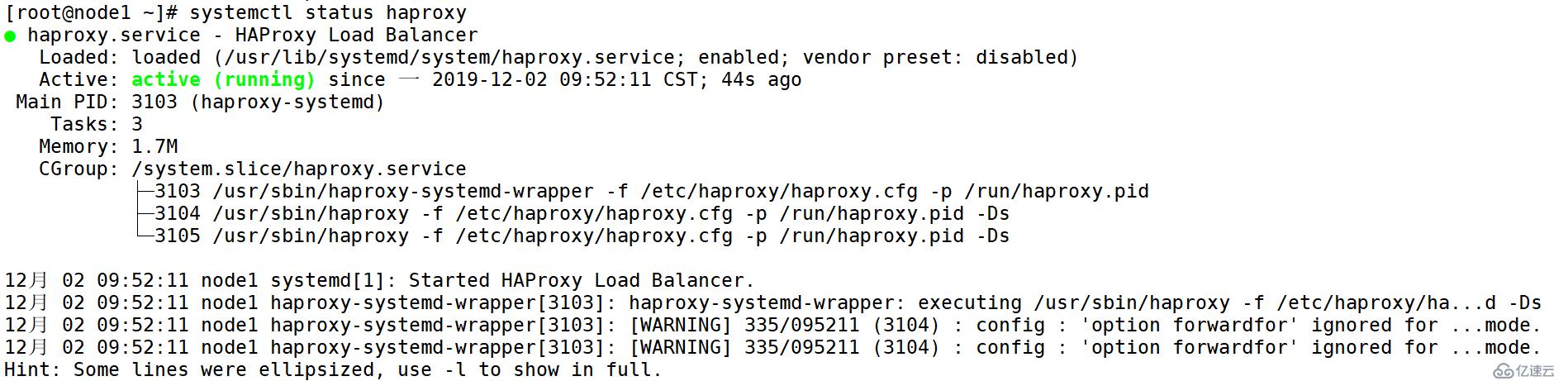

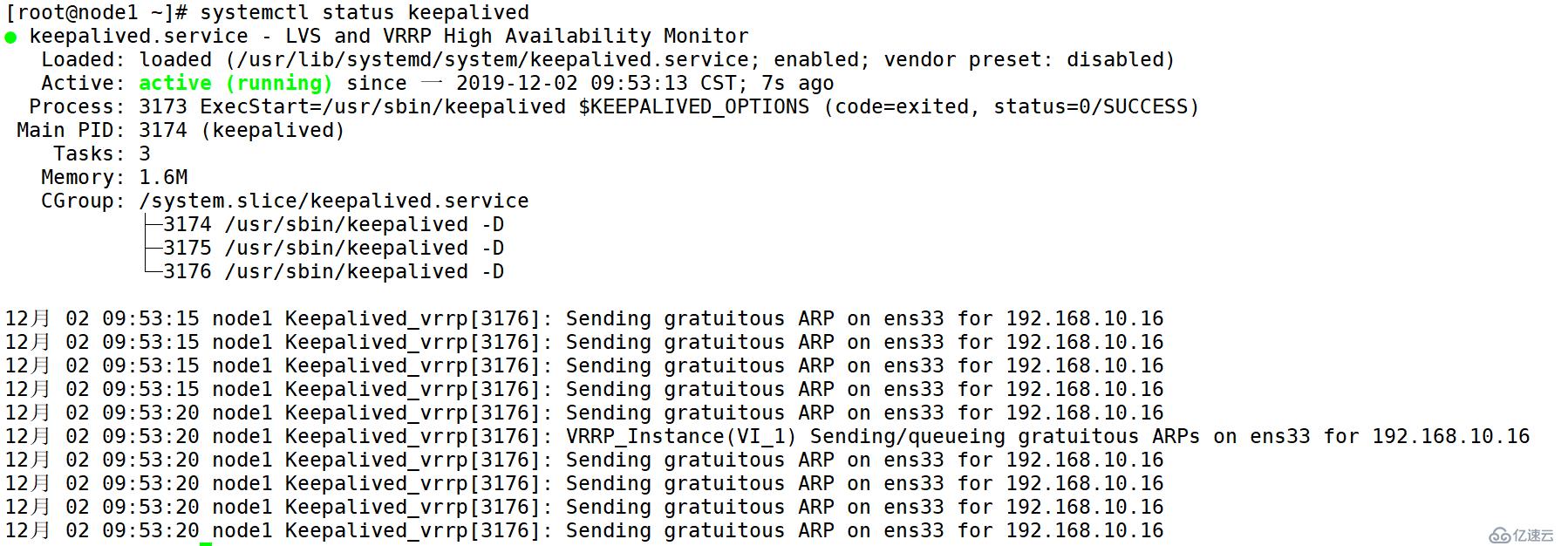

在三个master执行:

cat > /etc/keepalived/check_haproxy.sh <<EOF #!/bin/bash systemctl status haproxy > /dev/null if [[ \$? != 0 ]];then echo "haproxy is down,close the keepalived" systemctl stop keepalived fi EOF chmod +x /etc/keepalived/check_haproxy.sh systemctl enable keepalived && systemctl start keepalived systemctl enable haproxy && systemctl start haproxy systemctl status keepalived && systemctl status haproxy #如果keepalived状态不是running,则从新执行 systemctl restart keepalived

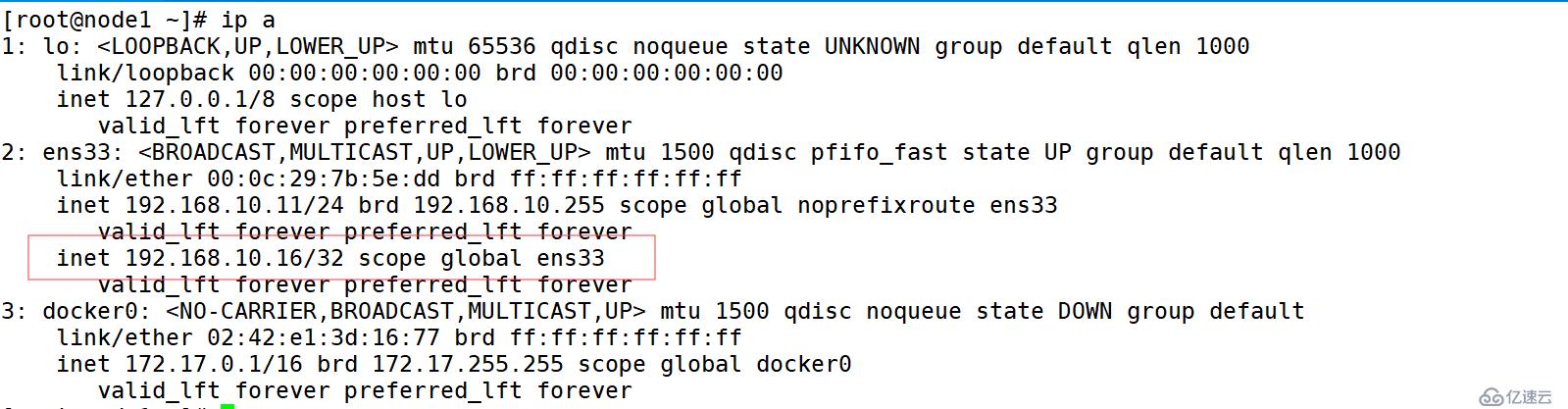

即可在master节点看到:

到此keepalived和haproxy准备完成。

四、初始化集群

kubeadm init \

--kubernetes-version=v1.16.3 \

--pod-network-cidr=10.244.0.0/16 \

--apiserver-advertise-address=192.168.10.11 \

--control-plane-endpoint 192.168.10.16:8443 --upload-certs

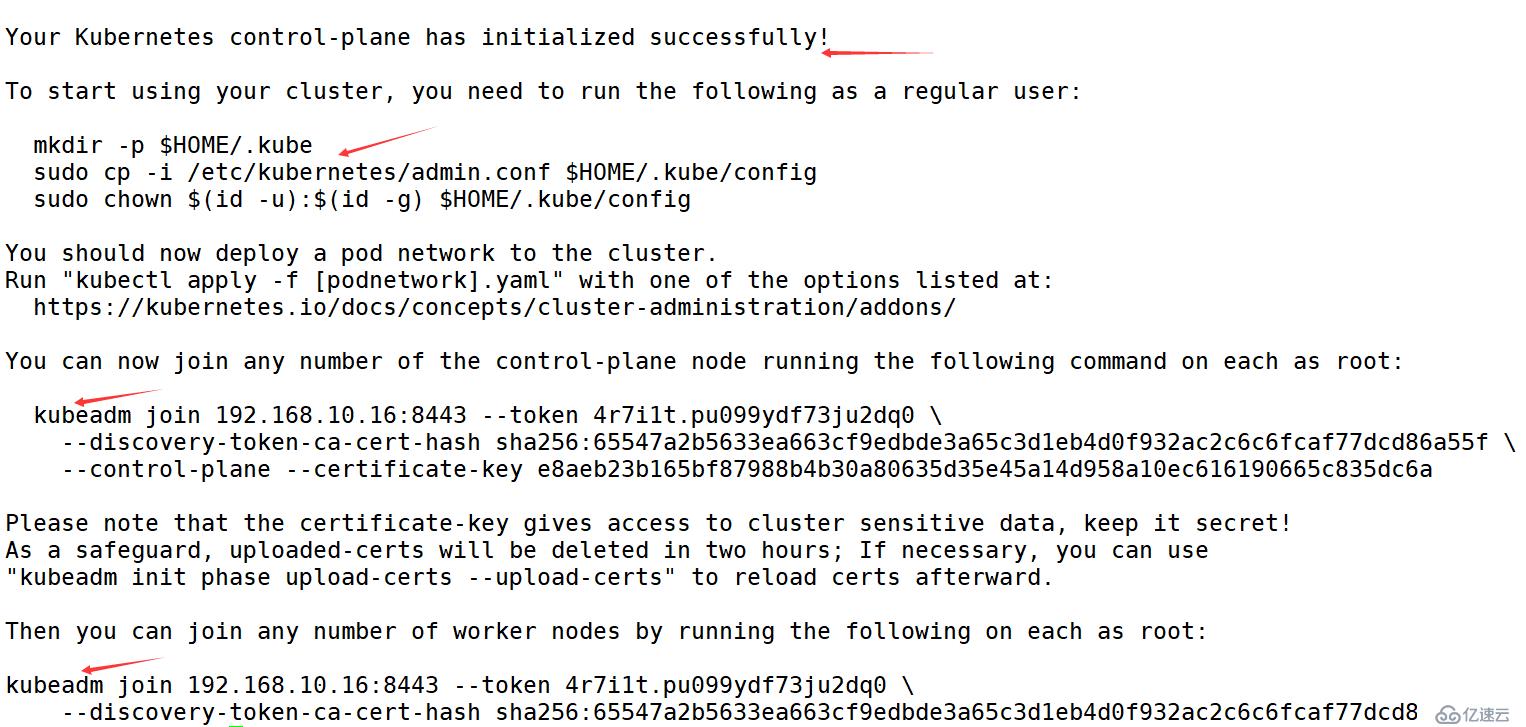

则表示初始化成功

1.为需要使用kubectl的用户进行配置

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

2.安装Pod Network

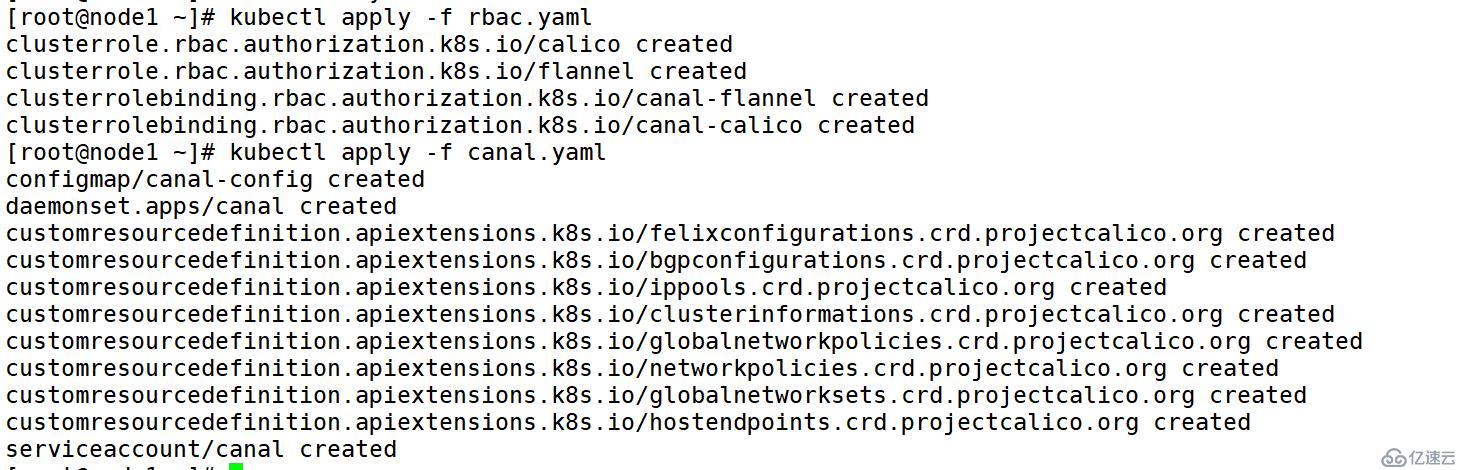

安装canal网络插件

wget https://docs.projectcalico.org/v3.1/getting-started/kubernetes/installation/hosted/canal/rbac.yaml

wget https://docs.projectcalico.org/v3.1/getting-started/kubernetes/installation/hosted/canal/canal.yaml

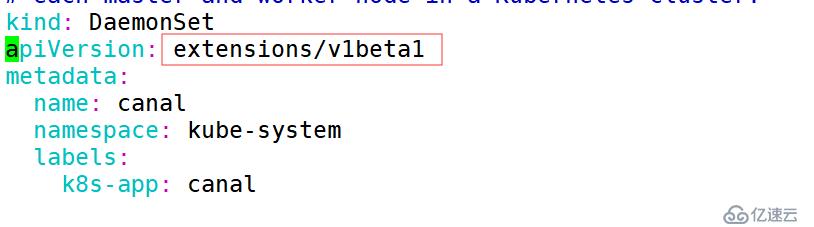

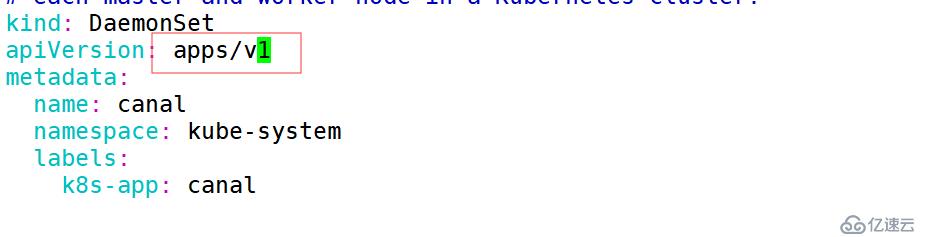

这里需要修改canal.yaml文件中

修改为:

3.然后部署:

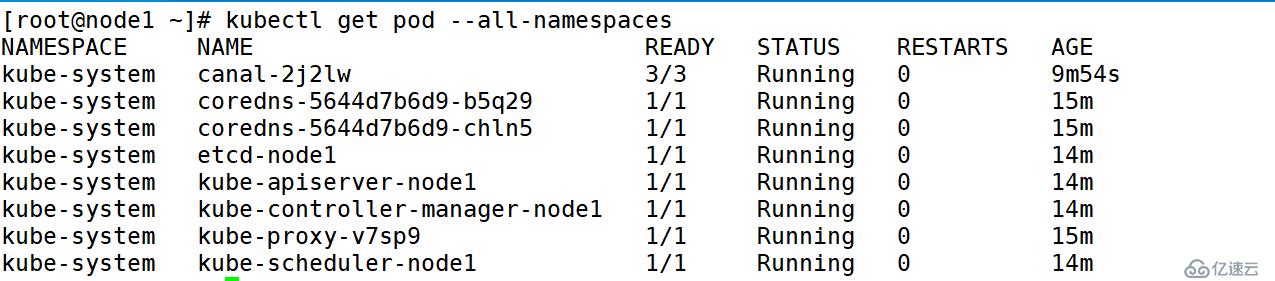

看到所有状态都是running则部署成功

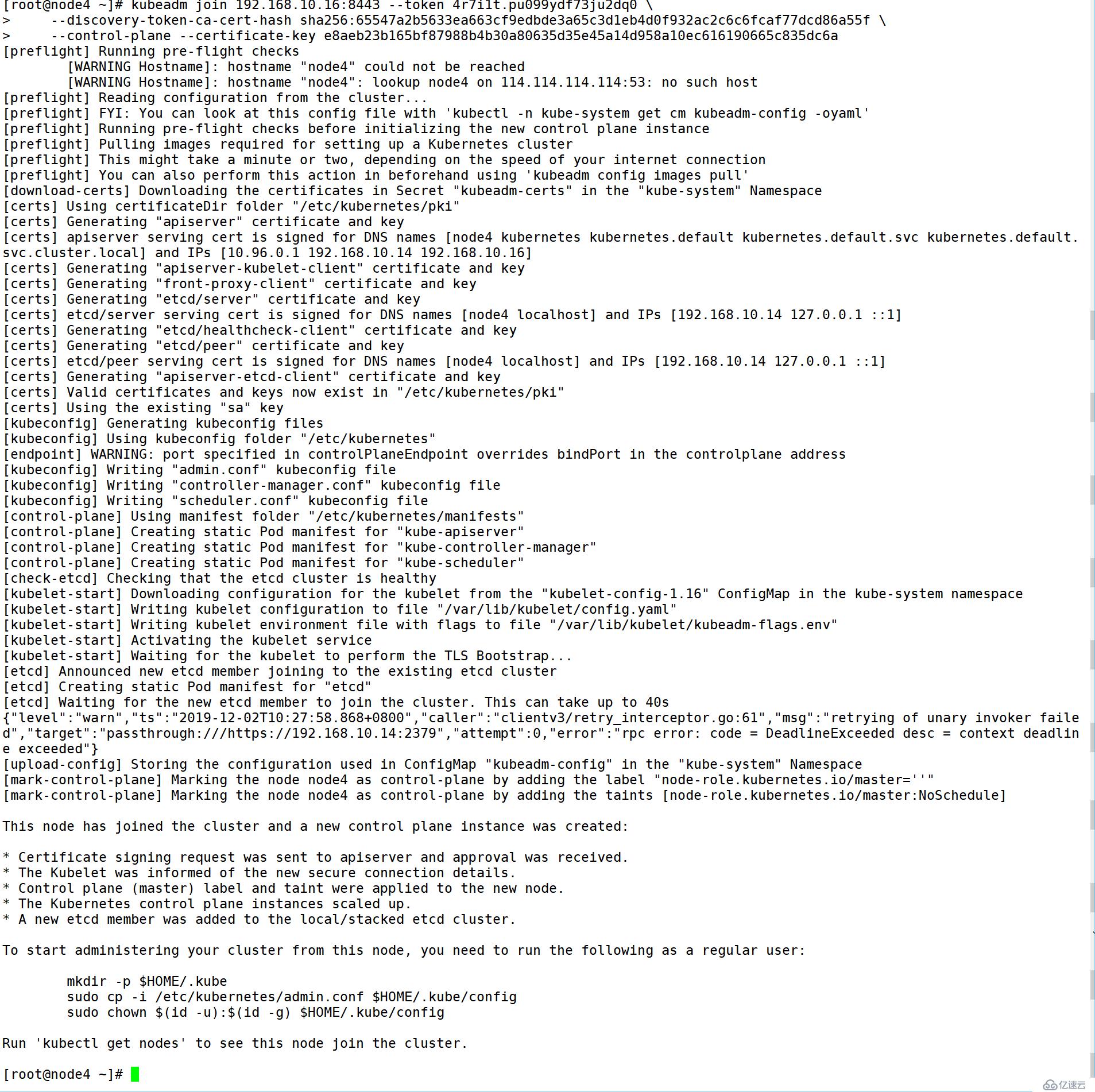

4、加入其他的master节点

kubeadm join 192.168.10.16:8443 --token 4r7i1t.pu099ydf73ju2dq0 \

--discovery-token-ca-cert-hash sha256:65547a2b5633ea663cf9edbde3a65c3d1eb4d0f932ac2c6c6fcaf77dcd86a55f \

--control-plane --certificate-key e8aeb23b165bf87988b4b30a80635d35e45a14d958a10ec616190665c835dc6a

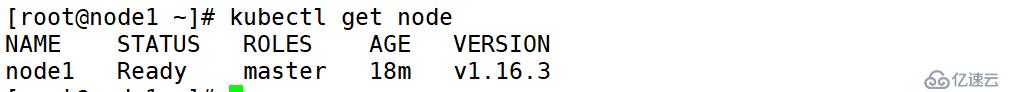

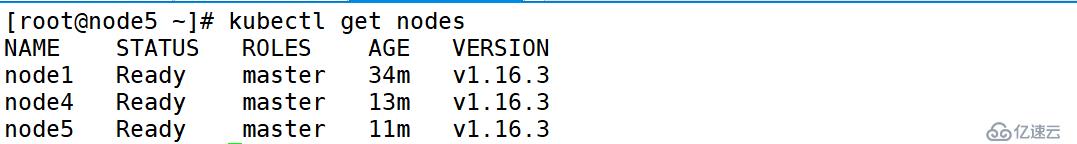

在任意节点执行:

kubectl get node

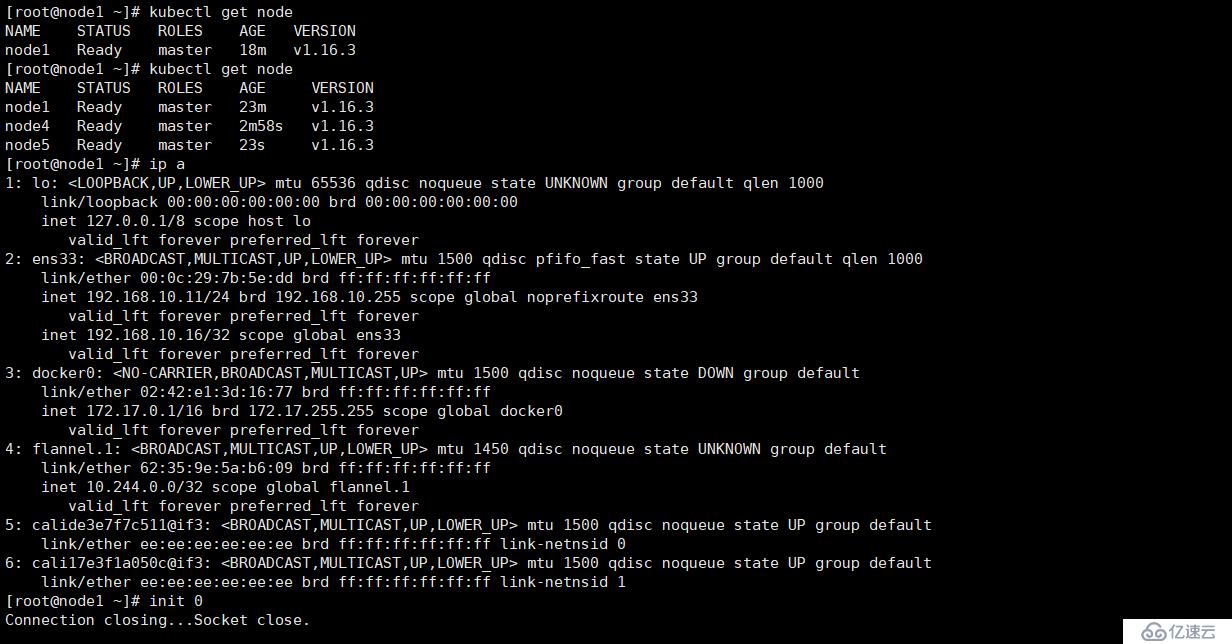

5.进行测试master高可用:

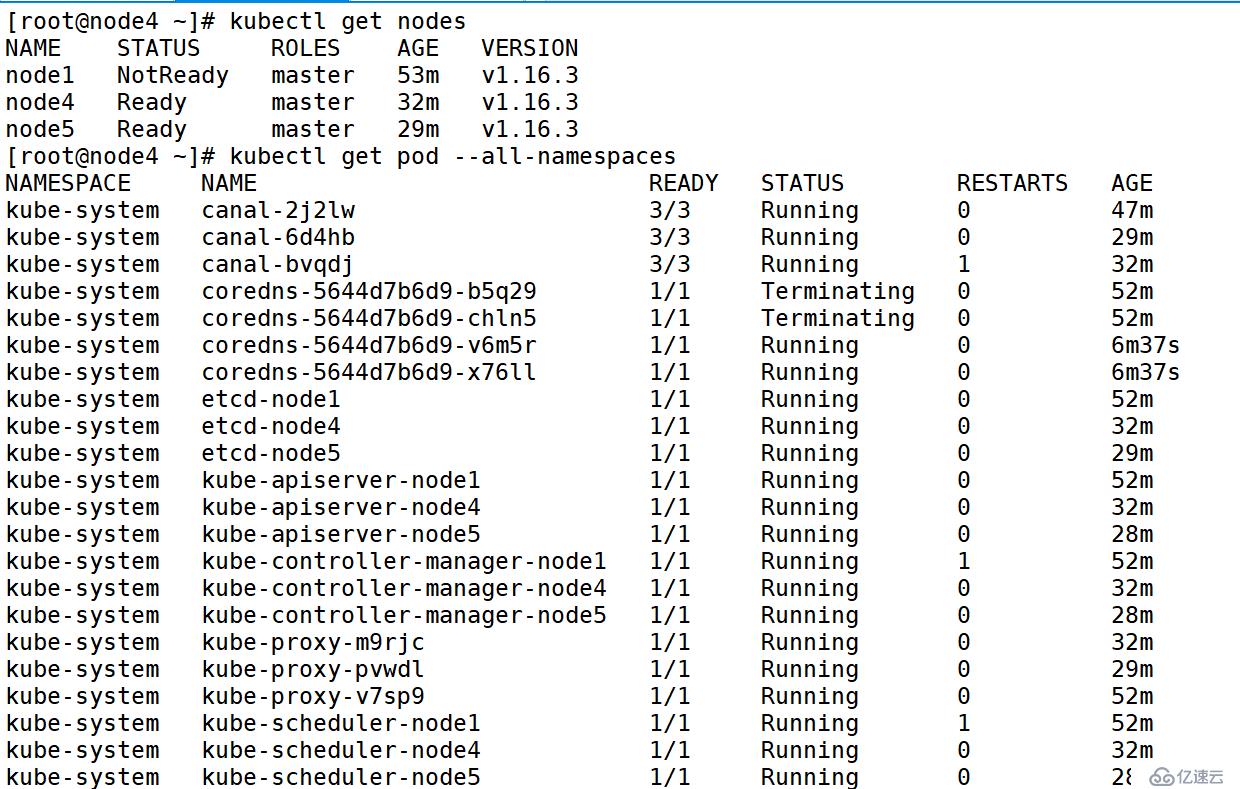

down掉master1

在其他节点查看

五、加入worker节点

kubeadm join 192.168.10.16:8443 --token 4r7i1t.pu099ydf73ju2dq0 \

--discovery-token-ca-cert-hash sha256:65547a2b5633ea663cf9edbde3a65c3d1eb4d0f932ac2c6c6fcaf77dcd86a55f

免责声明:本站发布的内容(图片、视频和文字)以原创、转载和分享为主,文章观点不代表本网站立场,如果涉及侵权请联系站长邮箱:is@yisu.com进行举报,并提供相关证据,一经查实,将立刻删除涉嫌侵权内容。