k8s pv 和 PVC 为何绑定不上

使用statefuset 部署有状态应用,应用总是处于pending 状态,在开始之前先介绍什么是statefuset, 在 k8s 中一般用 deployment 管理无状态应用,statefuset 用来管理有状态应用,如 redis 、mysql 、zookper 等分布式应用,这些应用的启动停止都会有严格的顺序

一、statefulset

headless (无头服务),没有cluserIP, 资源标识符,用于生成可解析的dns 记录

StatefulSet 用于pod 资源的管理

volumeClaimTemplates 提供存储

二、statefulset 部署

使用nfs 做网络存储

搭建nfs

配置共享存储目录

创建pv

编排 yaml

搭建nfs

yum install nfs-utils -y

mkdir -p /usr/local/k8s/redis/pv{7..12} # 创建挂载目录

cat /etc/exports /usr/local/k8s/redis/pv7 172.16.0.0/16(rw,sync,no_root_squash) /usr/local/k8s/redis/pv8 172.16.0.0/16(rw,sync,no_root_squash) /usr/local/k8s/redis/pv9 172.16.0.0/16(rw,sync,no_root_squash) /usr/local/k8s/redis/pv10 172.16.0.0/16(rw,sync,no_root_squash) /usr/local/k8s/redis/pv11 172.16.0.0/16(rw,sync,no_root_squash exportfs -avr

创建pv

cat nfs_pv2.yaml

apiVersion: v1 kind: PersistentVolume metadata: name: nfs-pv7 spec: capacity: storage: 500M accessModes: - ReadWriteMany persistentVolumeReclaimPolicy: Retain storageClassName: slow nfs: server: 172.16.0.59 path: "/usr/local/k8s/redis/pv7" --- apiVersion: v1 kind: PersistentVolume metadata: name: nfs-pv8 spec: capacity: storage: 500M accessModes: - ReadWriteMany storageClassName: slow persistentVolumeReclaimPolicy: Retain nfs: server: 172.16.0.59 path: "/usr/local/k8s/redis/pv8" --- apiVersion: v1 kind: PersistentVolume metadata: name: nfs-pv9 spec: capacity: storage: 500M accessModes: - ReadWriteMany storageClassName: slow persistentVolumeReclaimPolicy: Retain nfs: server: 172.16.0.59 path: "/usr/local/k8s/redis/pv9" --- apiVersion: v1 kind: PersistentVolume metadata: name: nfs-pv10 spec: capacity: storage: 500M accessModes: - ReadWriteMany storageClassName: slow persistentVolumeReclaimPolicy: Retain nfs: server: 172.16.0.59 path: "/usr/local/k8s/redis/pv10" --- apiVersion: v1 kind: PersistentVolume metadata: name: nfs-pv11 spec: capacity: storage: 500M accessModes: - ReadWriteMany storageClassName: slow persistentVolumeReclaimPolicy: Retain nfs: server: 172.16.0.59 path: "/usr/local/k8s/redis/pv11" --- apiVersion: v1 kind: PersistentVolume metadata: name: nfs-pv12 spec: capacity: storage: 500M accessModes: - ReadWriteMany storageClassName: slow persistentVolumeReclaimPolicy: Retain nfs: server: 172.16.0.59 path: "/usr/local/k8s/redis/pv12"

kubectl apply -f nfs_pv2.yaml

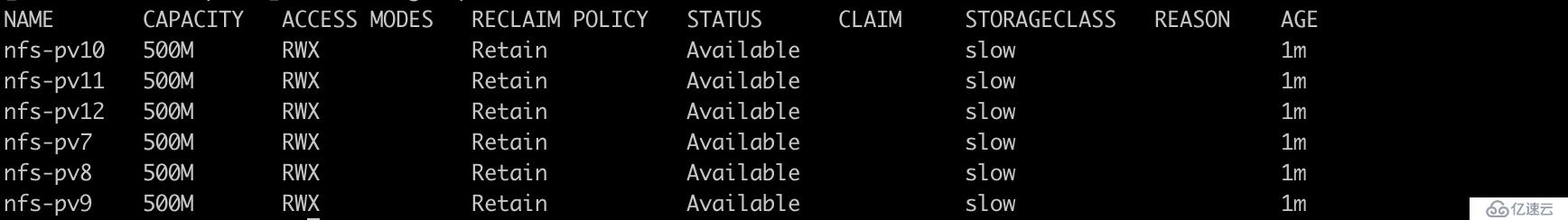

查看 # 创建成功

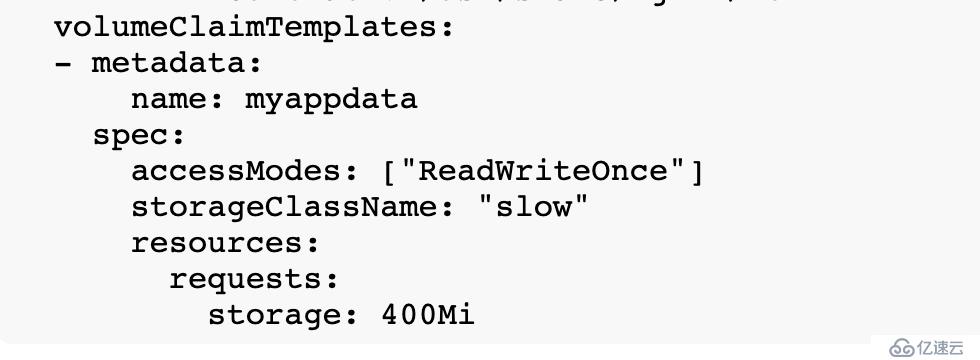

编写yaml 编排应用

apiVersion: v1 kind: Service metadata: name: myapp labels: app: myapp spec: ports: - port: 80 name: web clusterIP: None selector: app: myapp-pod --- apiVersion: apps/v1 kind: StatefulSet metadata: name: myapp spec: serviceName: myapp replicas: 3 selector: matchLabels: app: myapp-pod template: metadata: labels: app: myapp-pod spec: containers: - name: myapp image: ikubernetes/myapp:v1 resources: requests: cpu: "500m" memory: "500Mi" ports: - containerPort: 80 name: web volumeMounts: - name: myappdata mountPath: /usr/share/nginx/html volumeClaimTemplates: - metadata: name: myappdata spec: accessModes: ["ReadWriteOnce"] storageClassName: "slow" resources: requests: storage: 400Mi

kubectl create -f new-stateful.yaml

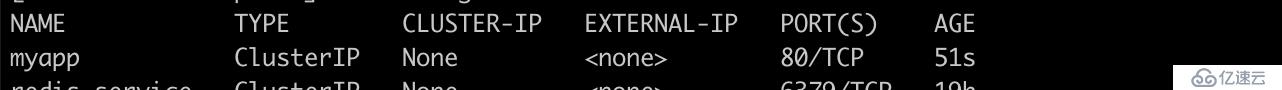

查看headless 创建成功

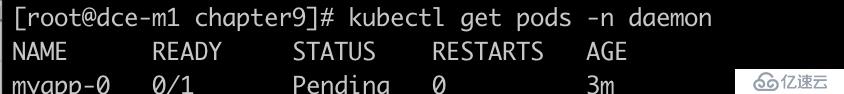

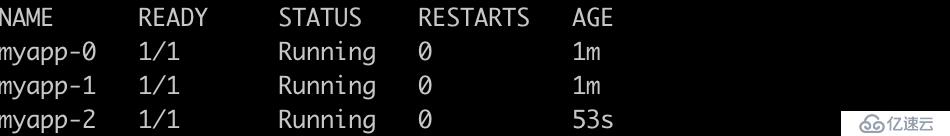

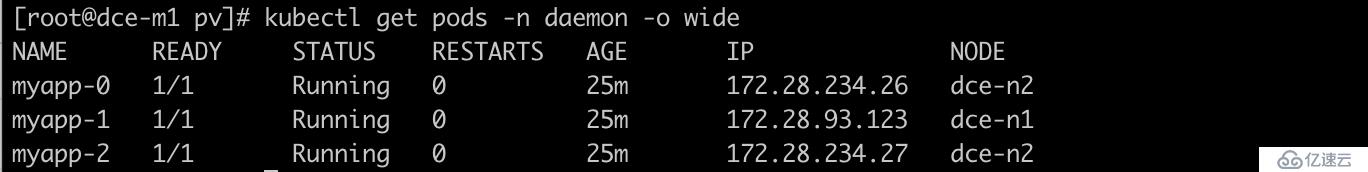

查看pod 是否创建成功

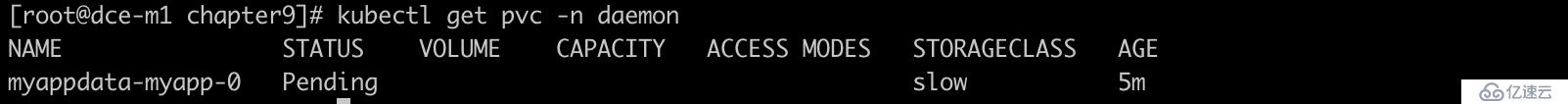

查看pvc 是否创建成功

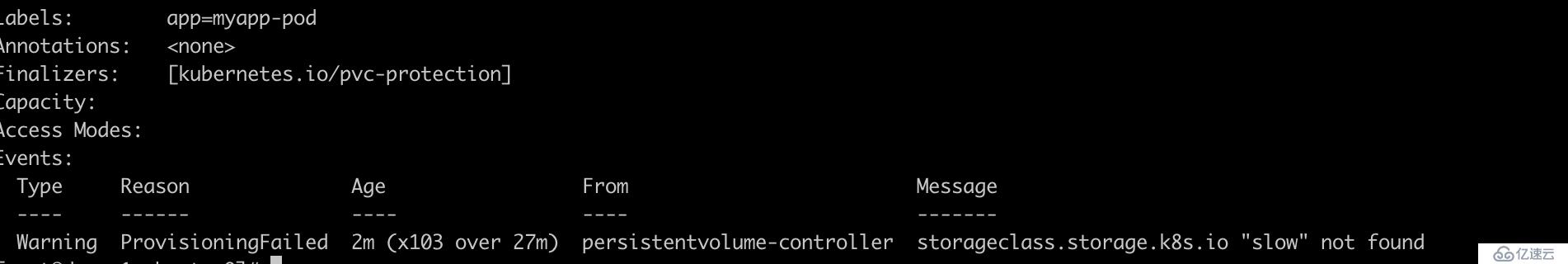

pod 启动没有成功,依赖于pvc ,查看pvc 的日志,没有找到对应的pvc,明明写了啊

查看 关联信息,有下面这个属性

storageClassName: "slow"

三、statefulset 排障

pvc 无法创建,导致pod 无法正常启动,yaml 文件重新检查了几遍,

思考的方向:pvc 如何绑定pv ,通过storageClassName 去关联,pv 也创建成功了,也存在storageClassName: slow 这个属性,结果愣是找不到

。。。。

。。。。

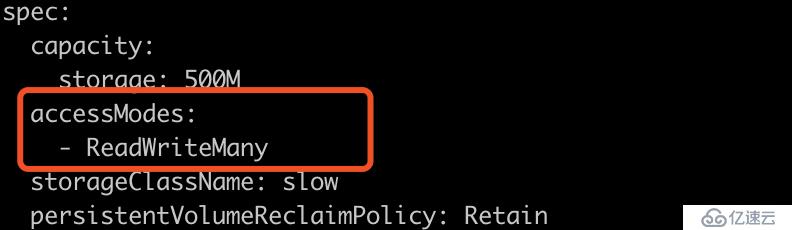

后面检查pv 和pvc 的权限是否一直

发现pv 设置的权限

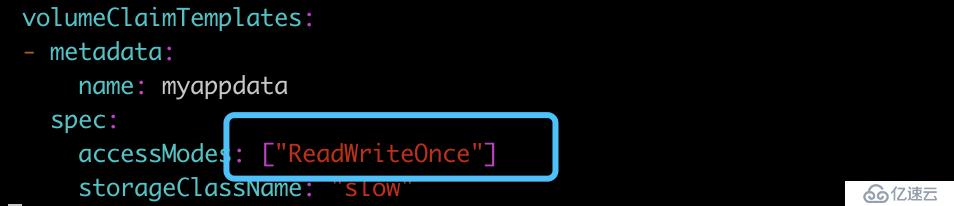

volumeClaimTemplates: 声明的pvc 权限

两边的权限不一致,

操作

删除 pvc kubectl delete pvc myappdata-myapp-0 -n daemon

删除 yaml 文件, kubectl delete -f new-stateful.yaml -n daemon

尝试修改 accessModes: ["ReadWriteMany"]

再次查看

提示:pv 和PVC 设定权限注

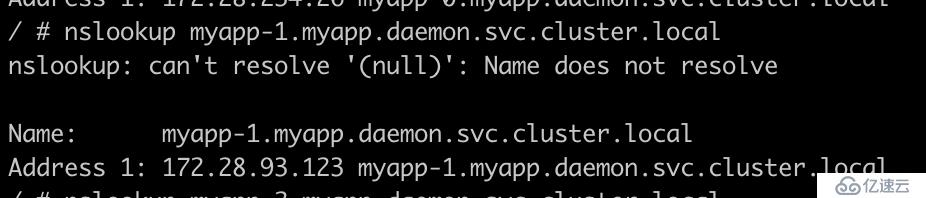

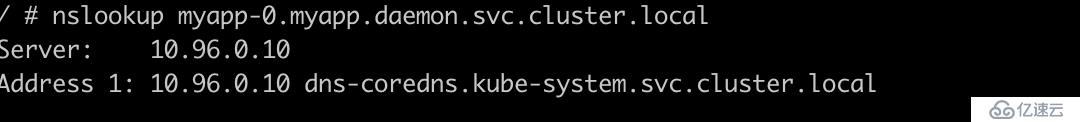

四、statefulset 测试,域名解析

kubectl exec -it myapp-0 sh -n daemon

nslookup myapp-0.myapp.daemon.svc.cluster.local

解析的规则 如下

myapp-0 myapp daemon

FQDN: $(podname).(headless server name).namespace.svc.cluster.local

容器里面如没有nsllokup ,需要安装对应的包,busybox 可以提供类似的功能

提供yaml 文件

apiVersion: v1 kind: Pod metadata: name: busybox namespace: daemon spec: containers: - name: busybox image: busybox:1.28.4 command: - sleep - "7600" resources: requests: memory: "200Mi" cpu: "250m" imagePullPolicy: IfNotPresent restartPolicy: Never

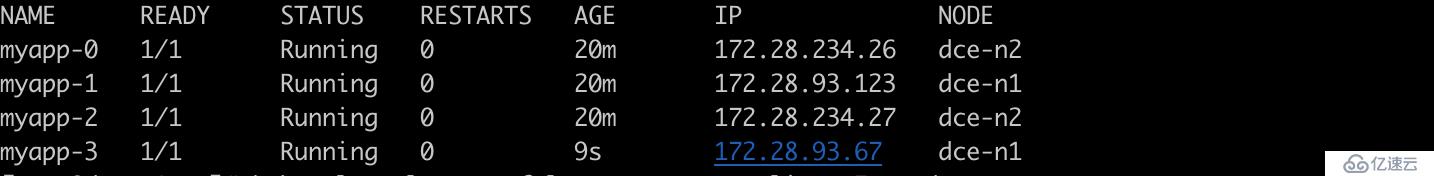

五、statefulset 的扩缩容

扩容:

Statefulset 资源的扩缩容与Deployment 资源相似,即通过修改副本数,Statefulset 资源的拓展过程,与创建过程类似,应用名称的索引号,依次增加

可使用 kubectl scale

kubectl patch

实践:kubectl scale statefulset myapp --replicas=4

缩容:

缩容只需要将pod 副本数调小

kubectl patch statefulset myapp -p '{"spec":{"replicas":3}}' -n daemon

提示:资源扩缩容,需要动态创建pv 与pvc 的绑定关系,这里使用的是nfs 做持久化存储,pv 的多少是预先创建的

六、statefulset 的滚动更新

滚动更新

金丝雀发布

滚动更新

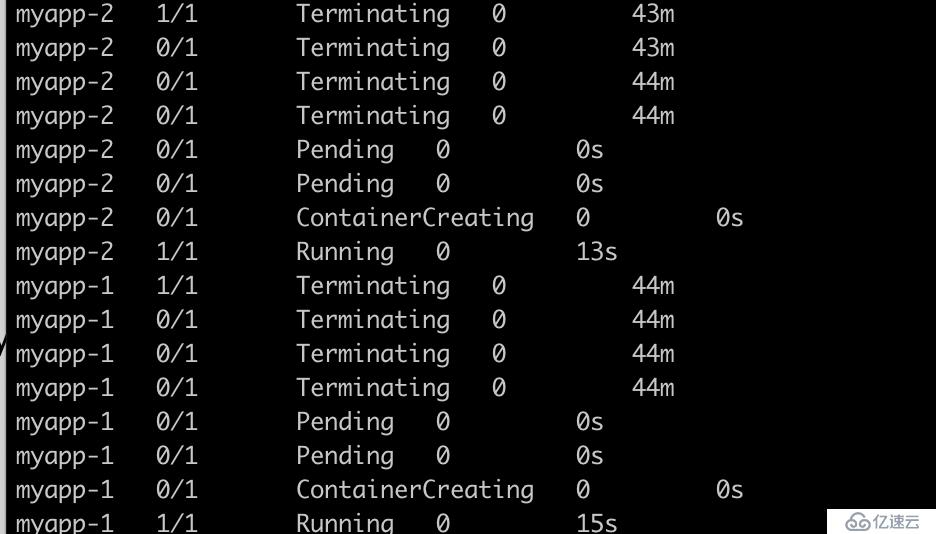

滚动更新 是从索引pod 号最大的开始的,终止完一个资源,在进行开始下一个pod ,滚动更新是 statefulset 默认的更新策略

kubectl set image statefulset/myapp myapp=ikubernetes/myapp:v2 -n daemon

升级过程

查看pod 状态

kubectl get pods -n daemon

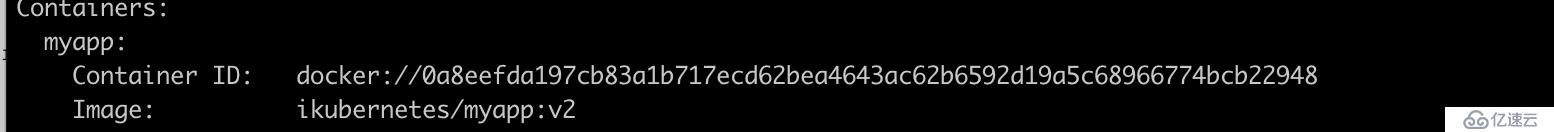

查看升级后镜像是否更新

kubectl describe pod myapp-0 -n daemon

免责声明:本站发布的内容(图片、视频和文字)以原创、转载和分享为主,文章观点不代表本网站立场,如果涉及侵权请联系站长邮箱:is@yisu.com进行举报,并提供相关证据,一经查实,将立刻删除涉嫌侵权内容。