master节点:

10.20.10.103 name=cnvs-kubm-101-103 role: [controlplane,worker,etcd] user: k8suser

10.20.10.104 name=cnvs-kubm-101-104 role: [controlplane,worker,etcd] user: k8suser

10.20.10.105 name=cnvs-kubm-101-105 role: [controlplane,worker,etcd] user: k8suser

集群管理节点:10.20.10.103

vip:10.20.10.253

node 节点:

10.20.10.106 name=cnvs-kubnode-101-106 role: [controlplane,worker,etcd] user: k8suser

10.20.10.107 name=cnvs-kubnode-101-107 role: [controlplane,worker,etcd] user: k8suser

10.20.10.108 name=cnvs-kubnode-101-108 role: [controlplane,worker,etcd] user: k8suser

10.20.10.118 name=cnvs-kubnode-101-118 role: [controlplane,worker,etcd] user: k8suser

10.20.10.120 name=cnvs-kubnode-101-120 role: [controlplane,worker,etcd] user: k8suser

10.20.10.122 name=cnvs-kubnode-101-122 role: [controlplane,worker,etcd] user: k8suser

10.20.10.123 name=cnvs-kubnode-101-123 role: [controlplane,worker,etcd] user: k8suser

10.20.10.124 name=cnvs-kubnode-101-124 role: [controlplane,worker,etcd] user: k8suser

rke部署不允许使用root 用户,需要新建一个集群部署账户或者将现有账户添加docker 用户组.

本次采取将现有k8suser账户添加到 docker

#单机

usermod k8suser -G docker

#批量

ansible kub-all -m shell -a "usermod k8suser -G docker"

#验证

[root@cnvs-kubm-101-103 kub-deploy]# ansible kub-all -m shell -a "id k8suser"

10.20.10.107 | CHANGED | rc=0 >>

uid=1000(k8suser) gid=1000(k8suser) groups=1000(k8suser),992(docker)

#kub-all 包含集群所有主机groupadd docker && useradd rancher -G docker

echo "123456" | passwd --stdin rancher在安装主机上与安装集群所有节点(安装集群用户)打通ssh无密码验证。

ansible kub-all -m shell -a "echo 'ssh-rsa AAAAB3NzaC1yaLuTb ' >>/home/k8suser/.ssh/authorized_keys"

mkdir -p /etc/rke/

下载地址 https://www.rancher.cn/docs/rancher/v2.x/cn/install-prepare/download/rke/

unzip rke_linux-amd64.zip

mv rke_linux-amd64 /usr/bin/rke

[root@cnvs-kubm-101-103 rke]# chmod 755 /usr/bin/rke

[root@cnvs-kubm-101-103 rke]# rke -v

rke version v0.2.8

address:公共域名或IP地址

user:可以运行docker命令的用户,需要是普通用户。

role:分配给节点的Kubernetes角色列表

ssh_key_path:用于对节点进行身份验证的SSH私钥的路径(默认为~/.ssh/id_rsa)

cat > cluster.yml << EOF

nodes:

- address: 10.20.10.103

user: k8suser

role: [controlplane,worker,etcd]

- address: 10.20.10.104

user: k8suser

role: [controlplane,worker,etcd]

- address: 10.20.10.105

user: k8suser

role: [controlplane,worker,etcd]

- address: 10.20.10.106

user: k8suser

role: [worker]

labels: {traefik: traefik-outer}

- address: 10.20.10.107

user: k8suser

role: [worker]

labels: {traefik: traefik-outer}

- address: 10.20.10.108

user: k8suser

role: [worker]

labels: {traefik: traefik-outer}

- address: 10.20.10.118

user: k8suser

role: [worker]

labels: {traefik: traefik-inner}

- address: 10.20.10.120

user: k8suser

role: [worker]

labels: {traefik: traefik-inner}

- address: 10.20.10.122

user: k8suser

role: [worker]

labels: {app: ingress}

- address: 10.20.10.123

user: k8suser

role: [worker]

labels: {app: ingress}

- address: 10.20.10.124

user: k8suser

role: [worker]

labels: {app: ingress}

ingress:

node_selector: {app: ingress}

cluster_name: cn-kube-prod

services:

etcd:

snapshot: true

creation: 6h

retention: 24h

kubeproxy:

extra_args:

proxy-mode: ipvs

kubelet:

extra_args:

cgroup-driver: 'systemd'

authentication:

strategy: x509

sans:

- "10.20.10.252"

- "10.20.10.253"

- "cnpaas.pt.com"

EOFaddress:公共域名或IP地址

user:可以运行docker命令的用户,需要是普通用户。

role:分配给节点的Kubernetes角色列表

ssh_key_path:用于对节点进行身份验证的SSH私钥的路径(默认为~/.ssh/id_rsa)cd /etc/rke

rke up --- 然后等待结束~!

INFO[3723] [sync] Syncing nodes Labels and Taints

INFO[3725] [sync] Successfully synced nodes Labels and Taints

INFO[3725] [network] Setting up network plugin: canal

INFO[3725] [addons] Saving ConfigMap for addon rke-network-plugin to Kubernetes

................略

INFO[3751] [addons] Executing deploy job rke-metrics-addon

INFO[3761] [addons] Metrics Server deployed successfully

INFO[3761] [ingress] Setting up nginx ingress controller

INFO[3761] [addons] Saving ConfigMap for addon rke-ingress-controller to Kubernetes

INFO[3761] [addons] Successfully saved ConfigMap for addon rke-ingress-controller to Kubernetes

INFO[3761] [addons] Executing deploy job rke-ingress-controller

INFO[3771] [ingress] ingress controller nginx deployed successfully

INFO[3771] [addons] Setting up user addons

INFO[3771] [addons] no user addons defined

INFO[3771] Finished building Kubernetes cluster successfully

[root@cnvs-kubm-101-103 rke]#

Kubernetes集群状态由Kubernetes集群中的集群配置文件cluster.yml和组件证书组成,由RKE生成,但根据您的RKE版本,集群状态的保存方式不同。

从v0.2.0开始,RKE在集群配置文件cluster.yml的同一目录中创建一个.rkestate文件。该.rkestate文件包含集群的当前状态,包括RKE配置和证书。需要保留此文件以更新集群或通过RKE对集群执行任何操作。

[root@cndh2321-6-13 rke]# ll

-rw-r----- 1 root root 121198 Aug 30 18:04 cluster.rkestate

-rw-r--r-- 1 root root 1334 Aug 30 16:31 cluster.yml

-rw-r----- 1 root root 5431 Aug 30 17:08 kube_config_cluster.yml

-rwxr-xr-x 1 root root 10833540 Aug 29 20:07 rke_linux-amd64.zip

集群管理节点:10.20.10.103

1:安装完成首先修改:kube_config_cluster.yml

apiVersion: v1

kind: Config

clusters:

- cluster:

.......

FLS0tLS0K

server: "https://10.20.10.253:16443" <=== 修改地址为集群master节点vip 地址和端口:

.....

user: "kube-admin-cn-kube-prod"2:如果安装节点部署集群节点,需要将部署节点 copy (kube_config_cluster.yml) 配置文件至集群管理节点

scp kube_config_cluster.yml 10.20.10.103:/etc/kubernetes/3:配置环境变量:

rm -rf $HOME/.kube

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/kube_config_cluster.yml $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

yum -y install kubectl#验证集群

查看集群版本:

kubectl --kubeconfig /etc/kubernetes/kube_config_cluster.yml version or kubectl version

Client Version: version.Info{Major:"1", Minor:"15", GitVersion:"v1.15.3", GitCommit:"2d3c76f9091b6bec110a5e63777c332469e0cba2", GitTreeState:"clean", BuildDate:"2019-08-19T11:13:54Z", GoVersion:"go1.12.9", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"14", GitVersion:"v1.14.6", GitCommit:"96fac5cd13a5dc064f7d9f4f23030a6aeface6cc", GitTreeState:"clean", BuildDate:"2019-08-19T11:05:16Z", GoVersion:"go1.12.9", Compiler:"gc", Platform:"linux/amd64"}

kubectl --kubeconfig /etc/kubernetes/kube_config_cluster.yml get nodes or kubectl get nodes

NAME STATUS ROLES AGE VERSION INTERNAL-IP CONTAINER-RUNTIME

10.20.10.103 Ready controlplane,etcd,worker 29m v1.14.6 10.20.10.103 docker://18.9.8

10.20.10.104 Ready controlplane,etcd,worker 29m v1.14.6 10.20.10.104 docker://18.9.8

10.20.10.105 Ready controlplane,etcd,worker 29m v1.14.6 10.20.10.105 docker://18.9.8

10.20.10.106 Ready worker 29m v1.14.6 10.20.10.106 docker://18.9.8

10.20.10.107 Ready worker 29m v1.14.6 10.20.10.107 docker://18.9.8

10.20.10.108 Ready worker 29m v1.14.6 10.20.10.108 docker://18.9.8

10.20.10.118 Ready worker 29m v1.14.6 10.20.10.118 docker://18.9.8

10.20.10.120 Ready worker 29m v1.14.6 10.20.10.120 docker://18.9.8

10.20.10.122 Ready worker 24m v1.14.6 10.20.10.122 docker://18.9.8

10.20.10.123 Ready worker 29m v1.14.6 10.20.10.123 docker://18.9.8

10.20.10.124 Ready worker 29m v1.14.6 10.20.10.124 docker://18.9.8#traefik=traefik-outer

kubectl get node -l "traefik=traefik-outer"

NAME STATUS ROLES AGE VERSION

10.20.10.106 Ready worker 31m v1.14.6

10.20.10.107 Ready worker 31m v1.14.6

10.20.10.108 Ready worker 31m v1.14.6

#traefik=traefik-outer

kubectl get node -l "traefik=traefik-inner"

NAME STATUS ROLES AGE VERSION

10.20.10.118 Ready worker 32m v1.14.6

10.20.10.120 Ready worker 32m v1.14.6

#app=ingress

kubectl get node -l "app=ingress"

NAME STATUS ROLES AGE VERSION

10.20.10.122 Ready worker 26m v1.14.6

10.20.10.123 Ready worker 31m v1.14.6

10.20.10.124 Ready worker 31m v1.14.6

#服务

[root@cnvs-kubm-101-103 k8suser]# kubectl get svc -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 50m

ingress-nginx default-http-backend ClusterIP 10.43.63.186 <none> 80/TCP 34m

kube-system kube-dns ClusterIP 10.43.0.10 <none> 53/UDP,53/TCP,9153/TCP 34m

kube-system metrics-server ClusterIP 10.43.179.102 <none> 443/TCP 34m

kube-system tiller-deploy ClusterIP 10.43.152.163 <none> 44134/TCP 6m38s

#部署环境

[root@cnvs-kubm-101-103 k8suser]# kubectl get deploy -A

NAMESPACE NAME READY UP-TO-DATE AVAILABLE AGE

ingress-nginx default-http-backend 1/1 1 1 34m

kube-system coredns 3/3 3 3 34m

kube-system coredns-autoscaler 1/1 1 1 34m

kube-system metrics-server 1/1 1 1 34m

kube-system tiller-deploy 1/1 1 1 6m55s

#容器

[root@cnvs-kubm-101-103 k8suser]# kubectl get pods -o wide -A

NAMESPACE NAME READY STATUS AGE IP NODE

ingress-nginx default-http-backend-5954bd5d8c-m6k9b 1/1 Running 30m 10.42.6.2 10.20.10.103

ingress-nginx nginx-ingress-controller-sgkm4 1/1 Running 25m 10.20.10.122 10.20.10.122

ingress-nginx nginx-ingress-controller-t2644 1/1 Running 28m 10.20.10.123 10.20.10.123

ingress-nginx nginx-ingress-controller-zq2lj 1/1 Running 21m 10.20.10.124 10.20.10.124

kube-system canal-5df7s 2/2 Running 31m 10.20.10.118 10.20.10.118

kube-system canal-62t7j 2/2 Running 31m 10.20.10.103 10.20.10.103

kube-system canal-cczs2 2/2 Running 31m 10.20.10.108 10.20.10.108

kube-system canal-kzzz7 2/2 Running 31m 10.20.10.106 10.20.10.106

kube-system canal-lp97g 2/2 Running 31m 10.20.10.107 10.20.10.107

kube-system canal-p4wbh 2/2 Running 31m 10.20.10.105 10.20.10.105

kube-system canal-qm4l6 2/2 Running 31m 10.20.10.104 10.20.10.104

kube-system canal-rb8j6 2/2 Running 31m 10.20.10.122 10.20.10.122

kube-system canal-w6rp7 2/2 Running 31m 10.20.10.124 10.20.10.124

kube-system canal-wwjjc 2/2 Running 31m 10.20.10.120 10.20.10.120

kube-system canal-x5xw6 2/2 Running 31m 10.20.10.123 10.20.10.123

kube-system coredns-autoscaler-5d5d49b8ff-sdbpj 1/1 Running 31m 10.42.1.3 10.20.10.118

kube-system coredns-bdffbc666-98vp9 1/1 Running 20m 10.42.4.2 10.20.10.124

kube-system coredns-bdffbc666-k5qtb 1/1 Running 20m 10.42.6.3 10.20.10.103

kube-system coredns-bdffbc666-qmrwr 1/1 Running 31m 10.42.1.2 10.20.10.118

kube-system metrics-server-7f6bd4c888-bpnk2 1/1 Running 30m 10.42.1.4 10.20.10.118

kube-system rke-coredns-addon-deploy-job-4t2xd 0/1 Completed 31m 10.20.10.103 10.20.10.103

kube-system rke-ingress-controller-deploy-job-f69dg 0/1 Completed 30m 10.20.10.103 10.20.10.103

kube-system rke-metrics-addon-deploy-job-v2pqk 0/1 Completed 31m 10.20.10.103 10.20.10.103

kube-system rke-network-plugin-deploy-job-92wv2 0/1 Completed 31m 10.20.10.103 10.20.10.103

kubectl create serviceaccount --namespace kube-system tiller

kubectl create clusterrolebinding tiller-cluster-rule --clusterrole=cluster-admin --serviceaccount=kube-system:tiller

helm init --history-max 200

kubectl patch deploy --namespace kube-system tiller-deploy -p '{"spec":{"template":{"spec":{"serviceAccount":"tiller"}}}}'[root@cnvs-kubm-101-103 k8suser]# helm version

Client: &version.Version{SemVer:"v2.14.3", GitCommit:"0e7f3b6637f7af8fcfddb3d2941fcc7cbebb0085", GitTreeState:"clean"}

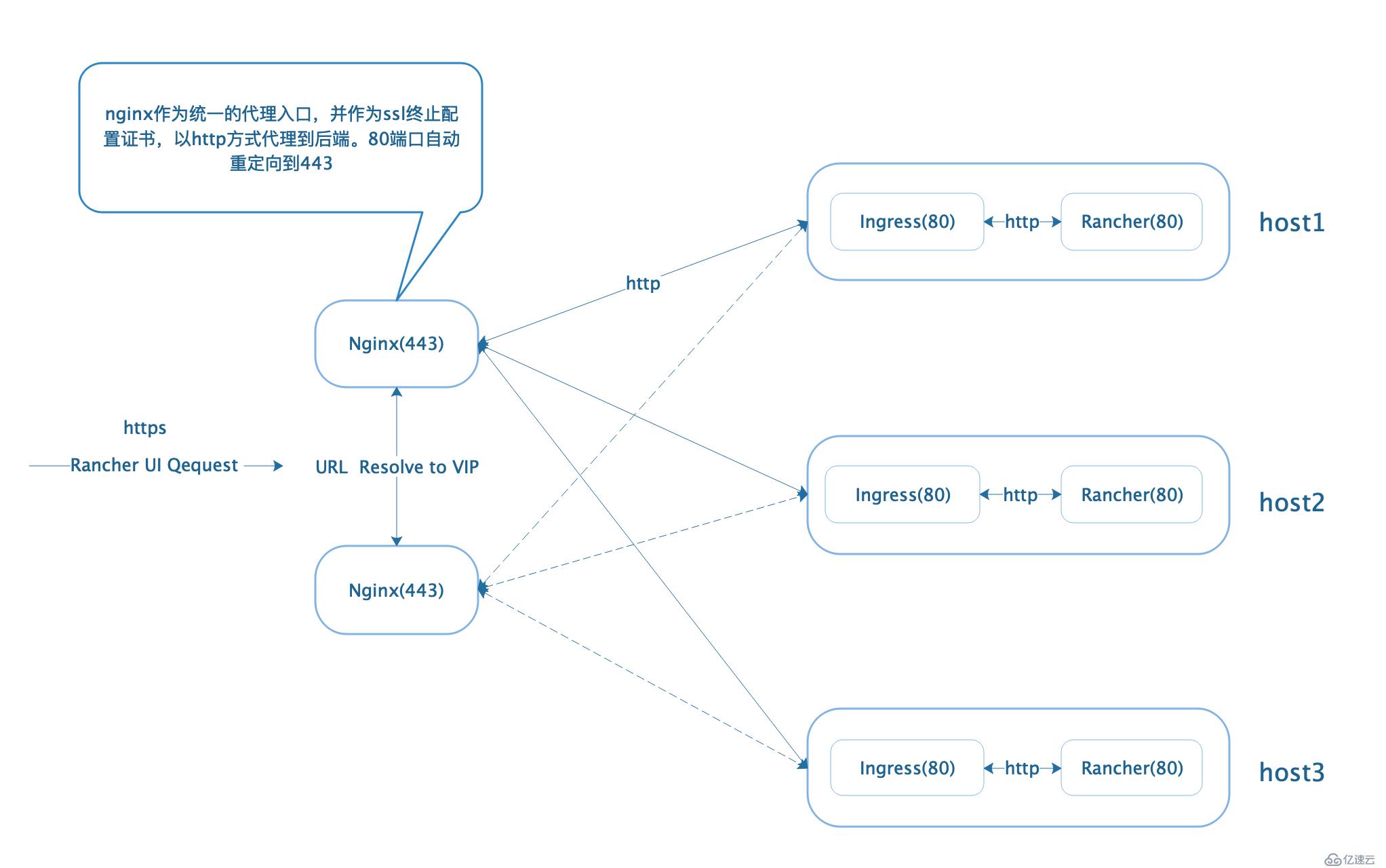

Server: &version.Version{SemVer:"v2.14.3", GitCommit:"0e7f3b6637f7af8fcfddb3d2941fcc7cbebb0085", GitTreeState:"clean"}以外部HTTP L7负载均衡器作为访问入口,使用公司购买企业ssl证书,使用外部七层负载均衡器作为访问入口,那么将需要把ssl证书配置在L7负载均衡器上面,如果是权威认证证书,rancher侧则无需配置证书。pt业务环境使用nginx作为代理工具,将ssl 放在nginx端。

Rancher Server(rancher-stable)稳定版Helm charts仓库,此仓库版本推荐用于生产环境。

helm repo add rancher-stable https://releases.rancher.com/server-charts/stable

[root@cnvs-kubm-101-103 kub-deploy]# helm search rancher

NAME CHART VERSION APP VERSION DESCRIPTION

rancher-stable/rancher 2.2.8 v2.2.8 Install Rancher Server to manage Kubernetes clusters acro...helm install rancher-stable/rancher \

--name rancher \

--namespace cattle-system \

--set auditLog.level=1 \

--set auditLog.maxAge=3 \

--set auditLog.maxBackups=2 \

--set auditLog.maxSize=2000 \

--set tls=external \

--set hostname=cnpaas.pt.com

注意:内网dns: cnpass.k8suser.com指向 master 节点 vip :10.20.10.253

如果走内网域名干预,内部api接口转发nginx 要同时配置 tcp和heep转发,详见尾部nginx配置。

NOTES:

Rancher Server has been installed.

.......

Browse to https://cnpass.k8suser.com

Happy Containering!

完成此步骤即可从公网访问rancher平台管理平台:

upstream cn-prod-rancher {

server 10.20.10.122;

server 10.20.10.123;

server 10.20.10.124;

}

map $http_upgrade $connection_upgrade {

default Upgrade;

'' close;

}

server {

# listen 443 ssl;

listen 443 ssl http2;

server_name cnpaas.pt.com;

ssl_certificate /usr/local/openresty/nginx/ssl2018/k8suser.com.20201217.pem;

ssl_certificate_key /usr/local/openresty/nginx/ssl2018/k8suser.com.20201217.key;

access_log /data/nginxlog/k8scs.k8suser.com.log access;

location / {

proxy_set_header Host $host;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header X-Forwarded-Port $server_port;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_pass http://cn-prod-rancher;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection $connection_upgrade;

# This allows the ability for the execute shell window to remain open for up to 15 minutes.

## Without this parameter, the default is 1 minute and will automatically close.

proxy_read_timeout 900s;

proxy_buffering off;

}

}

server {

listen 80;

server_name FQDN;

return 301 https://$server_name$request_uri;

}

stream {

upstream kube_apiserver {

least_conn;

server 10.20.10.103:6443 weight=5 max_fails=2 fail_timeout=10s;

server 10.20.10.104:6443 weight=5 max_fails=2 fail_timeout=10s;

server 10.20.10.105:6443 weight=5 max_fails=2 fail_timeout=10s;

}

server {

listen 0.0.0.0:16443;

proxy_pass kube_apiserver;

proxy_timeout 10m;

proxy_connect_timeout 1s;

}

}

worker_processes 4;

worker_rlimit_nofile 40000;

events {

worker_connections 8192;

}

http {

upstream cn-prod-rancher {

server 10.20.10.122;

server 10.20.10.123;

server 10.20.10.124;

}

gzip on;

gzip_disable "msie6";

gzip_disable "MSIE [1-6]\.(?!.*SV1)";

gzip_vary on;

gzip_static on;

gzip_proxied any;

gzip_min_length 0;

gzip_comp_level 8;

gzip_buffers 16 8k;

gzip_http_version 1.1;

gzip_types

text/xml application/xml application/atom+xml application/rss+xml application/xhtml+xml image/svg+xml application/font-woff

text/javascript application/javascript application/x-javascript

text/x-json application/json application/x-web-app-manifest+json

text/css text/plain text/x-component

font/opentype application/x-font-ttf application/vnd.ms-fontobject font/woff2

image/x-icon image/png image/jpeg;

map $http_upgrade $connection_upgrade {

default Upgrade;

'' close;

}

server {

listen 443 ssl http2;

server_name cnpaas.pt.com;

ssl_certificate /usr/local/nginx/k8suser.com.20201217.pem;

ssl_certificate_key /usr/local/nginx/ssl.pem;

access_log /data/nginxlog/k8scs.k8suser.com.log ;

location / {

proxy_set_header Host $host;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header X-Forwarded-Port $server_port;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_pass http://cn-prod-rancher;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection $connection_upgrade;

# This allows the ability for the execute shell window to remain open for up to 15 minutes.

## Without this parameter, the default is 1 minute and will automatically close.

proxy_read_timeout 900s;

proxy_buffering off;

}

}

server {

listen 80;

server_name FQDN;

return 301 https://$server_name$request_uri;

}

}

stream {

upstream kube_apiserver {

least_conn;

server 10.20.10.103:6443 weight=5 max_fails=2 fail_timeout=10s;

server 10.20.10.104:6443 weight=5 max_fails=2 fail_timeout=10s;

server 10.20.10.105:6443 weight=5 max_fails=2 fail_timeout=10s;

}

server {

listen 0.0.0.0:16443;

proxy_pass kube_apiserver;

proxy_timeout 10m;

proxy_connect_timeout 1s;

}

}

浏览器访问 cnpaas.pt.com 进入引导新建管理员密码界面!

[root@cnvs-kubm-101-103 nginx]# kubectl get pods -o wide -A

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE

cattle-system cattle-cluster-agent-5c978b9d49-2mfqb 1/1 Running 8 44m 10.42.6.5 10.20.10.103

cattle-system cattle-node-agent-8wbj9 1/1 Running 8 44m 10.20.10.123 10.20.10.123

cattle-system cattle-node-agent-b2qsm 1/1 Running 8 44m 10.20.10.107 10.20.10.107

cattle-system cattle-node-agent-bgxvb 1/1 Running 8 44m 10.20.10.122 10.20.10.122

cattle-system cattle-node-agent-hkx6n 1/1 Running 8 44m 10.20.10.105 10.20.10.105

cattle-system cattle-node-agent-kbf8c 1/1 Running 8 44m 10.20.10.120 10.20.10.120

cattle-system cattle-node-agent-mxws9 1/1 Running 8 44m 10.20.10.118 10.20.10.118

cattle-system cattle-node-agent-n7z5w 1/1 Running 8 44m 10.20.10.108 10.20.10.108

cattle-system cattle-node-agent-p46tp 1/1 Running 8 44m 10.20.10.106 10.20.10.106

cattle-system cattle-node-agent-qxp6g 1/1 Running 8 44m 10.20.10.104 10.20.10.104

cattle-system cattle-node-agent-rqkkz 1/1 Running 8 44m 10.20.10.124 10.20.10.124

cattle-system cattle-node-agent-srs8f 1/1 Running 8 44m 10.20.10.103 10.20.10.103

cattle-system rancher-76bc7dccd5-7h59d 2/2 Running 0 62m 10.42.4.4 10.20.10.124

cattle-system rancher-76bc7dccd5-g4cwn 2/2 Running 0 62m 10.42.5.3 10.20.10.106

cattle-system rancher-76bc7dccd5-rx7dh 2/2 Running 0 62m 10.42.9.3 10.20.10.120

ingress-nginx default-http-backend-5954bd5d8c-m6k9b 1/1 Running 0 102m 10.42.6.2 10.20.10.103

ingress-nginx nginx-ingress-controller-sgkm4 1/1 Running 0 97m 10.20.10.122 10.20.10.122

ingress-nginx nginx-ingress-controller-t2644 1/1 Running 0 99m 10.20.10.123 10.20.10.123

ingress-nginx nginx-ingress-controller-zq2lj 1/1 Running 0 92m 10.20.10.124 10.20.10.124

kube-system canal-5df7s 2/2 Running 0 102m 10.20.10.118 10.20.10.118

kube-system canal-62t7j 2/2 Running 0 102m 10.20.10.103 10.20.10.103

kube-system canal-cczs2 2/2 Running 0 102m 10.20.10.108 10.20.10.108

kube-system canal-kzzz7 2/2 Running 0 102m 10.20.10.106 10.20.10.106

kube-system canal-lp97g 2/2 Running 0 102m 10.20.10.107 10.20.10.107

kube-system canal-p4wbh 2/2 Running 0 102m 10.20.10.105 10.20.10.105

kube-system canal-qm4l6 2/2 Running 0 102m 10.20.10.104 10.20.10.104

kube-system canal-rb8j6 2/2 Running 0 102m 10.20.10.122 10.20.10.122

kube-system canal-w6rp7 2/2 Running 0 102m 10.20.10.124 10.20.10.124

kube-system canal-wwjjc 2/2 Running 0 102m 10.20.10.120 10.20.10.120

kube-system canal-x5xw6 2/2 Running 0 102m 10.20.10.123 10.20.10.123

kube-system coredns-autoscaler-5d5d49b8ff-sdbpj 1/1 Running 0 102m 10.42.1.3 10.20.10.118

kube-system coredns-bdffbc666-98vp9 1/1 Running 0 92m 10.42.4.2 10.20.10.124

kube-system coredns-bdffbc666-k5qtb 1/1 Running 0 92m 10.42.6.3 10.20.10.103

kube-system coredns-bdffbc666-qmrwr 1/1 Running 0 102m 10.42.1.2 10.20.10.118

kube-system metrics-server-7f6bd4c888-bpnk2 1/1 Running 0 102m 10.42.1.4 10.20.10.118

kube-system rke-coredns-addon-deploy-job-4t2xd 0/1 Completed 0 102m 10.20.10.103 10.20.10.103

kube-system rke-ingress-controller-deploy-job-f69dg 0/1 Completed 0 102m 10.20.10.103 10.20.10.103

kube-system rke-metrics-addon-deploy-job-v2pqk 0/1 Completed 0 102m 10.20.10.103 10.20.10.103

kube-system rke-network-plugin-deploy-job-92wv2 0/1 Completed 0 103m 10.20.10.103 10.20.10.103

kube-system tiller-deploy-7695cdcfb8-dcw5w 1/1 Running 0 74m 10.42.6.4 10.20.10.103

https://blog.51cto.com/michaelkang/category21.html

https://www.rancher.cn/docs/rke/latest/cn/example-yamls/

https://www.rancher.cn/docs/rancher/v2.x/cn/install-prepare/download/rke/

https://www.rancher.cn/docs/rancher/v2.x/cn/configuration/cli/

http://www.eryajf.net/2723.html

免责声明:本站发布的内容(图片、视频和文字)以原创、转载和分享为主,文章观点不代表本网站立场,如果涉及侵权请联系站长邮箱:is@yisu.com进行举报,并提供相关证据,一经查实,将立刻删除涉嫌侵权内容。