Solaris 10(x86)构建Oracle 10g RAC之--配置系统环境(2)

系统环境:

操作系统:Solaris 10(x86-64)

Cluster: Oracle CRS 10.2.0.1.0

Oracle: Oracle 10.2.0.1.0

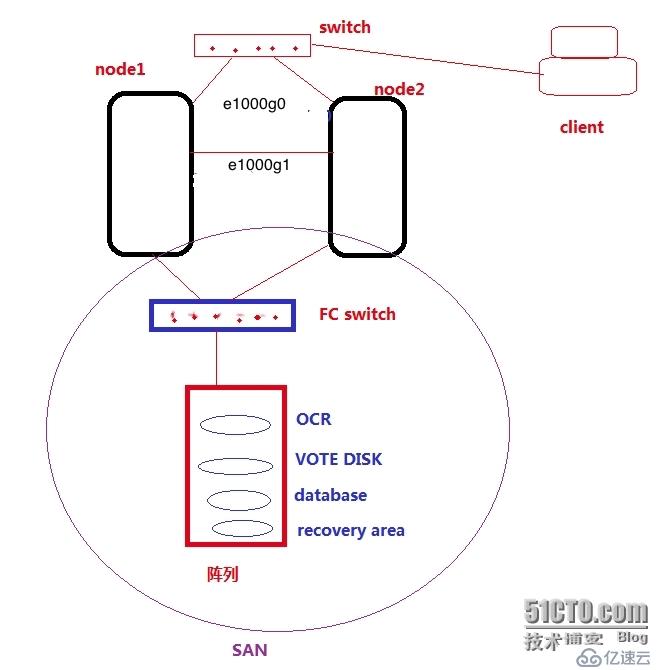

如图所示:RAC 系统架构

一、建立主机之间的信任关系(在所有node)

1、配置主机hosts.equiv文件

[root@node1:/]# cat /etc/hosts.equiv node1 root node1 oracle node1-vip root node1-vip oracle node1-priv root node1-priv oracle node2 root node2 oracle node2-vip root node2-vip oracle node2-priv root node2-priv oracle

2、配置Oracle用户.rhosts文件

[oracle@node1:/export/home/oracle]$ cat .rhosts node1 root node1 oracle node1-vip root node1-vip oracle node1-priv root node1-priv oracle node2 root node2 oracle node2-vip root node2-vip oracle node2-priv root node2-priv oracle

3、启动相关的服务,验证

[root@node1:/]# svcs -a |grep rlogin disabled 10:05:17 svc:/network/login:rlogin [root@node1:/]# svcadm enable svc:/network/login:rlogin [root@node1:/]# svcadm enable svc:/network/rexec:default [root@node1:/]# svcadm enable svc:/network/shell:default [root@node1:/]# svcs -a |grep rlogin online 11:37:34 svc:/network/login:rlogin [root@node1:/]# su - oracle Oracle Corporation SunOS 5.10 Generic Patch January 2005 [oracle@node1:/export/home/oracle]$ rlogin node1 Last login: Wed Jan 21 11:29:36 from node2-priv Oracle Corporation SunOS 5.10 Generic Patch January 2005

二、安装CRS前系统环境的检测(在node1)

[oracle@node1:/export/home/oracle]$ unzip 10201_clusterware_solx86_64.zip

[oracle@node1:/export/home/oracle/clusterware/cluvfy]$ ./runcluvfy.sh

USAGE:

cluvfy [ -help ]

cluvfy stage { -list | -help }

cluvfy stage {-pre|-post} <stage-name> <stage-specific options> [-verbose]

cluvfy comp { -list | -help }

cluvfy comp <component-name> <component-specific options> [-verbose]

[oracle@node1:/export/home/oracle/clusterware/cluvfy]$ ./runcluvfy.sh stage -pre crsinst -n node1,node2 -verbose

Performing pre-checks for cluster services setup

Checking node reachability...

Check: Node reachability from node "node1"

Destination Node Reachable?

------------------------------------ ------------------------

node1 yes

node2 yes

Result: Node reachability check passed from node "node1".

Checking user equivalence...

Check: User equivalence for user "oracle"

Node Name Comment

------------------------------------ ------------------------

node2 passed

node1 passed

Result: User equivalence check passed for user "oracle".

Checking administrative privileges...

Check: Existence of user "oracle"

Node Name User Exists Comment

------------ ------------------------ ------------------------

node2 yes passed

node1 yes passed

Result: User existence check passed for "oracle".

Check: Existence of group "oinstall"

Node Name Status Group ID

------------ ------------------------ ------------------------

node2 exists 200

node1 exists 200

Result: Group existence check passed for "oinstall".

Check: Membership of user "oracle" in group "oinstall" [as Primary]

Node Name User Exists Group Exists User in Group Primary Comment

---------------- ------------ ------------ ------------ ------------ ------------

node2 yes yes yes yes passed

node1 yes yes yes yes passed

Result: Membership check for user "oracle" in group "oinstall" [as Primary] passed.

Administrative privileges check passed.

Checking node connectivity...

Interface information for node "node2"

Interface Name IP Address Subnet

------------------------------ ------------------------------ ----------------

e1000g0 192.168.8.12 192.168.8.0

e1000g1 10.10.10.12 10.10.10.0

Interface information for node "node1"

Interface Name IP Address Subnet

------------------------------ ------------------------------ ----------------

e1000g0 192.168.8.11 192.168.8.0

e1000g1 10.10.10.11 10.10.10.0

Check: Node connectivity of subnet "192.168.8.0"

Source Destination Connected?

------------------------------ ------------------------------ ----------------

node2:e1000g0 node1:e1000g0 yes

Result: Node connectivity check passed for subnet "192.168.8.0" with node(s) node2,node1.

Check: Node connectivity of subnet "10.10.10.0"

Source Destination Connected?

------------------------------ ------------------------------ ----------------

node2:e1000g1 node1:e1000g1 yes

Result: Node connectivity check passed for subnet "10.10.10.0" with node(s) node2,node1.

Suitable interfaces for the private interconnect on subnet "192.168.8.0":

node2 e1000g0:192.168.8.12

node1 e1000g0:192.168.8.11

Suitable interfaces for the private interconnect on subnet "10.10.10.0":

node2 e1000g1:10.10.10.12

node1 e1000g1:10.10.10.11

ERROR:

Could not find a suitable set of interfaces for VIPs.

Result: Node connectivity check failed.

---vip 网络检测失败

Checking system requirements for 'crs'...

Check: Total memory

Node Name Available Required Comment

------------ ------------------------ ------------------------ ----------

node2 1.76GB (1843200KB) 512MB (524288KB) passed

node1 1.76GB (1843200KB) 512MB (524288KB) passed

Result: Total memory check passed.

Check: Free disk space in "/tmp" dir

Node Name Available Required Comment

------------ ------------------------ ------------------------ ----------

node2 3GB (3150148KB) 400MB (409600KB) passed

node1 2.74GB (2875128KB) 400MB (409600KB) passed

Result: Free disk space check passed.

Check: Swap space

Node Name Available Required Comment

------------ ------------------------ ------------------------ ----------

node2 2GB (2096476KB) 512MB (524288KB) passed

node1 2GB (2096476KB) 512MB (524288KB) passed

Result: Swap space check passed.

Check: System architecture

Node Name Available Required Comment

------------ ------------------------ ------------------------ ----------

node2 64-bit 64-bit passed

node1 64-bit 64-bit passed

Result: System architecture check passed.

Check: Operating system version

Node Name Available Required Comment

------------ ------------------------ ------------------------ ----------

node2 SunOS 5.10 SunOS 5.10 passed

node1 SunOS 5.10 SunOS 5.10 passed

Result: Operating system version check passed.

Check: Operating system patch for "118345-03"

Node Name Applied Required Comment

------------ ------------------------ ------------------------ ----------

node2 unknown 118345-03 failed

node1 unknown 118345-03 failed

Result: Operating system patch check failed for "118345-03".

Check: Operating system patch for "119961-01"

Node Name Applied Required Comment

------------ ------------------------ ------------------------ ----------

node2 119961-06 119961-01 passed

node1 119961-06 119961-01 passed

Result: Operating system patch check passed for "119961-01".

Check: Operating system patch for "117837-05"

Node Name Applied Required Comment

------------ ------------------------ ------------------------ ----------

node2 unknown 117837-05 failed

node1 unknown 117837-05 failed

Result: Operating system patch check failed for "117837-05".

Check: Operating system patch for "117846-08"

Node Name Applied Required Comment

------------ ------------------------ ------------------------ ----------

node2 unknown 117846-08 failed

node1 unknown 117846-08 failed

Result: Operating system patch check failed for "117846-08".

Check: Operating system patch for "118682-01"

Node Name Applied Required Comment

------------ ------------------------ ------------------------ ----------

node2 unknown 118682-01 failed

node1 unknown 118682-01 failed

Result: Operating system patch check failed for "118682-01".

---系统补丁检测失败

Check: Group existence for "dba"

Node Name Status Comment

------------ ------------------------ ------------------------

node2 exists passed

node1 exists passed

Result: Group existence check passed for "dba".

Check: Group existence for "oinstall"

Node Name Status Comment

------------ ------------------------ ------------------------

node2 exists passed

node1 exists passed

Result: Group existence check passed for "oinstall".

Check: User existence for "oracle"

Node Name Status Comment

------------ ------------------------ ------------------------

node2 exists passed

node1 exists passed

Result: User existence check passed for "oracle".

Check: User existence for "nobody"

Node Name Status Comment

------------ ------------------------ ------------------------

node2 exists passed

node1 exists passed

Result: User existence check passed for "nobody".

System requirement failed for 'crs'

Pre-check for cluster services setup was unsuccessful on all the nodes.----在以上的系统环境检测中,VIP网络检查失败;

如果在检测前没有配置VIP网络,可以用一下方式进行配置;如果已经配置过,就不会检测失败。

配置vip network(node1):[root@node1:/]# ifconfig e1000g0:1 plumb up[root@node1:/]# ifconfig e1000g0:1 192.168.8.13 netmask 255.255.255.0[root@node1:/]# ifconfig -alo0: flags=2001000849<UP,LOOPBACK,RUNNING,MULTICAST,IPv4,VIRTUAL> mtu 8232 index 1 inet 127.0.0.1 netmask ff000000 e1000g0: flags=1000843<UP,BROADCAST,RUNNING,MULTICAST,IPv4> mtu 1500 index 2 inet 192.168.8.11 netmask ffffff00 broadcast 192.168.8.255 ether 8:0:27:28:b1:8c e1000g0:1: flags=4001000842<BROADCAST,RUNNING,MULTICAST,IPv4,DUPLICATE> mtu 1500 index 2 inet 192.168.8.13 netmask ffffff00 broadcast 192.168.8.255e1000g1: flags=1000843<UP,BROADCAST,RUNNING,MULTICAST,IPv4> mtu 1500 index 3 inet 10.10.10.11 netmask ffffff00 broadcast 10.10.10.255 ether 8:0:27:6e:16:1 配置vip network(node2):[root@node2:/]# ifconfig e1000g0:1 plumb up[root@node2:/]# ifconfig e1000g0:1 192.168.8.14 netmask 255.255.255.0 [root@node2:/]# ifconfig -alo0: flags=2001000849<UP,LOOPBACK,RUNNING,MULTICAST,IPv4,VIRTUAL> mtu 8232 index 1 inet 127.0.0.1 netmask ff000000 e1000g0: flags=1000843<UP,BROADCAST,RUNNING,MULTICAST,IPv4> mtu 1500 index 2 inet 192.168.8.12 netmask ffffff00 broadcast 192.168.8.255 ether 8:0:27:1f:bf:4c e1000g0:1: flags=1000843<UP,BROADCAST,RUNNING,MULTICAST,IPv4> mtu 1500 index 2 inet 192.168.8.14 netmask ffffff00 broadcast 192.168.8.255e1000g1: flags=1000843<UP,BROADCAST,RUNNING,MULTICAST,IPv4> mtu 1500 index 3 inet 10.10.10.12 netmask ffffff00 broadcast 10.10.10.255 ether 8:0:27:a5:2c:db----在以上的系统环境检测中,部分补丁没有安装(可以通过Oracle官方网站下载,本机为测试环境暂不安装)

免责声明:本站发布的内容(图片、视频和文字)以原创、转载和分享为主,文章观点不代表本网站立场,如果涉及侵权请联系站长邮箱:is@yisu.com进行举报,并提供相关证据,一经查实,将立刻删除涉嫌侵权内容。