log_format main_cookie '$remote_addr\t$host\t$time_local\t$status\t$request_method\t$uri\t$query_string\t$body_bytes_sent\t$http_referer\t$http_user_ag

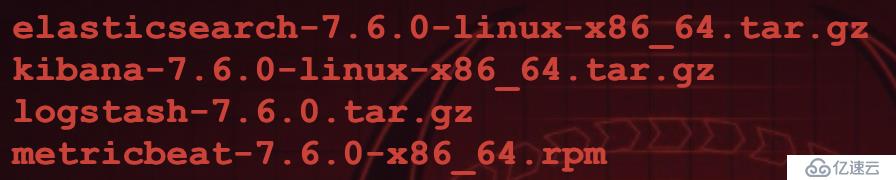

ent\t$bytes_sent\t$request_time\t$upstream_response_time\t$aoji_uuid\t$aoji_session_uuid';软件包如下:

elasticsearch-7.6.0-linux-x86_64.tar.gz 解压到 /data/ 目录

tar xf elasticsearch-7.6.0-linux-x86_64.tar.gz && mv elasticsearch-7.6.0 /data/配置文件所在目录:/data/elasticsearch-7.6.0/config 修改配置文件elasticsearch.yml

node.name: es-1

network.host: 172.31.0.14

http.port: 9200

xpack.security.enabled: true

discovery.type: single-node su - admin

/data/elasticsearch-7.6.0/bin/elasticsearch -d在elasticsearch-7.6.0/bin/目录下运行elasticsearch-setup-passwords设置密码(账号默认为elastic):

./elasticsearch-setup-passwords interactive

它会不止是设置elasticsearch,其他的kibana、logstash也会一起设置了,密码最好全设置同一个 tar xf logstash-7.6.0.tar.gz && mv logstash-7.6.0 /data/logstash

修改配置文件logstash.yml,内容如下:

node.name: node-1

xpack.monitoring.enabled: true

xpack.monitoring.elasticsearch.username: logstash_system

xpack.monitoring.elasticsearch.password: 123456

xpack.monitoring.elasticsearch.hosts: ["http://172.31.0.14:9200"]

在confg目录下创建nginx_access.conf, 内容如下:

input {

file {

path => [ "/data/weblog/yourdoamins/access.log" ]

start_position => "beginning"

ignore_older => 0

}

}

filter {

grok {

match => { "message" => "%{IPV4:client_ip}\t%{HOSTNAME:domain}\t%{HTTPDATE:timestamp}\t%{INT:status}\t(%{WORD:request_method}|-)\t(%{URIPATH:ur

i}|-|)\t(?:%{DATA:query_string}|-)\t(?:%{BASE10NUM:body_bytes_sent}|-)\t%{DATA:referrer}\t%{DATA:agent}\t%{INT:bytes_sent}\t%{BASE16FLOAT:request_time}

\t%{BASE16FLOAT:upstream_response_time}" }

}

geoip {

source => "client_ip"

target => "geoip"

database => "/data/logstash/GeoLite2-City.mmdb"

add_field => [ "[geoip][coordinates]", "%{[geoip][longitude]}" ]

add_field => [ "[geoip][coordinates]", "%{[geoip][latitude]}" ]

}

mutate {

convert => [ "[geoip][coordinates]", "float" ]

convert => [ "response","integer" ]

convert => [ "bytes","integer" ]

replace => { "type" => "nginx_access" }

remove_field => "message"

}

}

output {

elasticsearch {

hosts => ["172.31.0.14:9200"]

index => "logstash-nginx-access-%{+YYYY.MM.dd}"

user => "elastic"

password => "123456"

}

stdout {codec => rubydebug}

}相关配置文件解释,请自行查看官方文档或Google

然后就是logstash中配置的GeoIP的数据库解析ip了,这里是用了开源的ip数据源,用来分析客户端的ip归属地。官网在这里:MAXMIND

tar xf GeoLite2-City_20200218.tar.gz

cd GeoLite2-City_20200218 && mv GeoLite2-City.mmdb /data/logstash

测试下logstash 的配置文件,使用它自带的命令去测试,如下:

#./bin/logstash -t -f config/nginx_access.conf

Configuration OKcd /data/logstash/

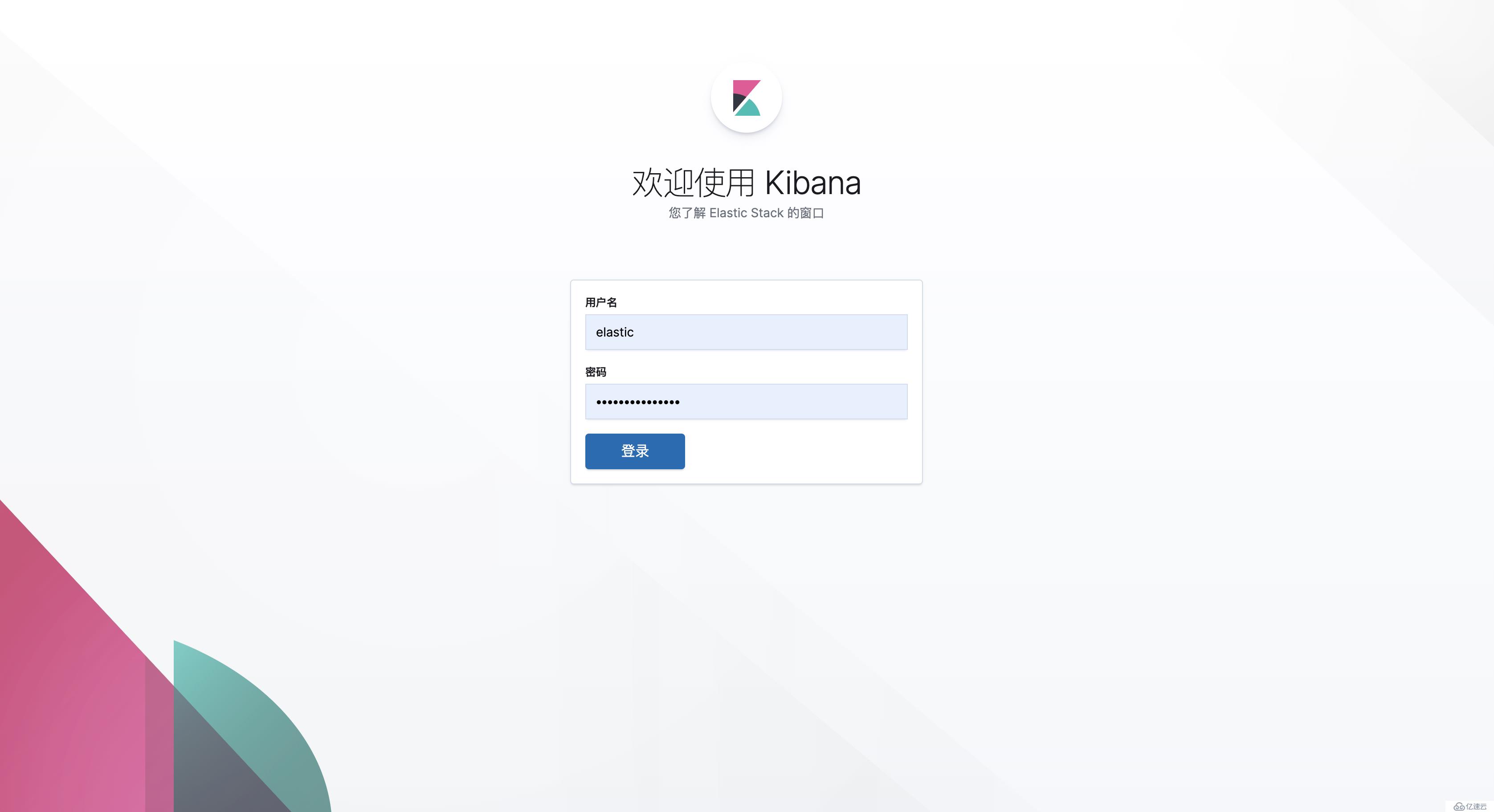

nohup /data/logstash/bin/logstash -f config/nginx_access.conf &tar xf kibana-7.6.0-linux-x86_64.tar.gz && mv kibana-7.6.0 /data/修改配置文件kibana.yml,内容如下:

server.port: 5601

server.host: "172.31.0.14"

elasticsearch.hosts: ["http://172.31.0.14:9200"]

elasticsearch.username: "elastic"

elasticsearch.password: "123456"

i18n.locale: "zh-CN"nohup /data/kibana-7.6.0/bin/kibana & upstream yourdomain {

server 172.31.0.14:5601;

}

server {

listen 80;

server_name yourdomain;

return 302 https://$server_name$request_uri;

}

server {

listen 443 ssl;

server_name yourdomain;

ssl_session_timeout 5m;

ssl_protocols TLSv1 TLSv1.1 TLSv1.2;

ssl_ciphers ECDHE-RSA-AES128-GCM-SHA256:ECDHE:ECDH:AES:HIGH:!NULL:!aNULL:!MD5:!ADH:!RC4;

ssl_prefer_server_ciphers on;

ssl_certificate /data/ssl/yourdomain.cer;

ssl_certificate_key /data/ssl/yourdomain.key;

ssl_trusted_certificate /data/ssl/yourdomain.ca.cer;

location / {

proxy_pass http:// yourdomain;

proxy_redirect off;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_next_upstream http_502 http_504 http_404 error timeout invalid_header;

}

access_log /data/weblog/yourdomain/access.log main;

error_log /data/weblog/yourdomain/error.log;

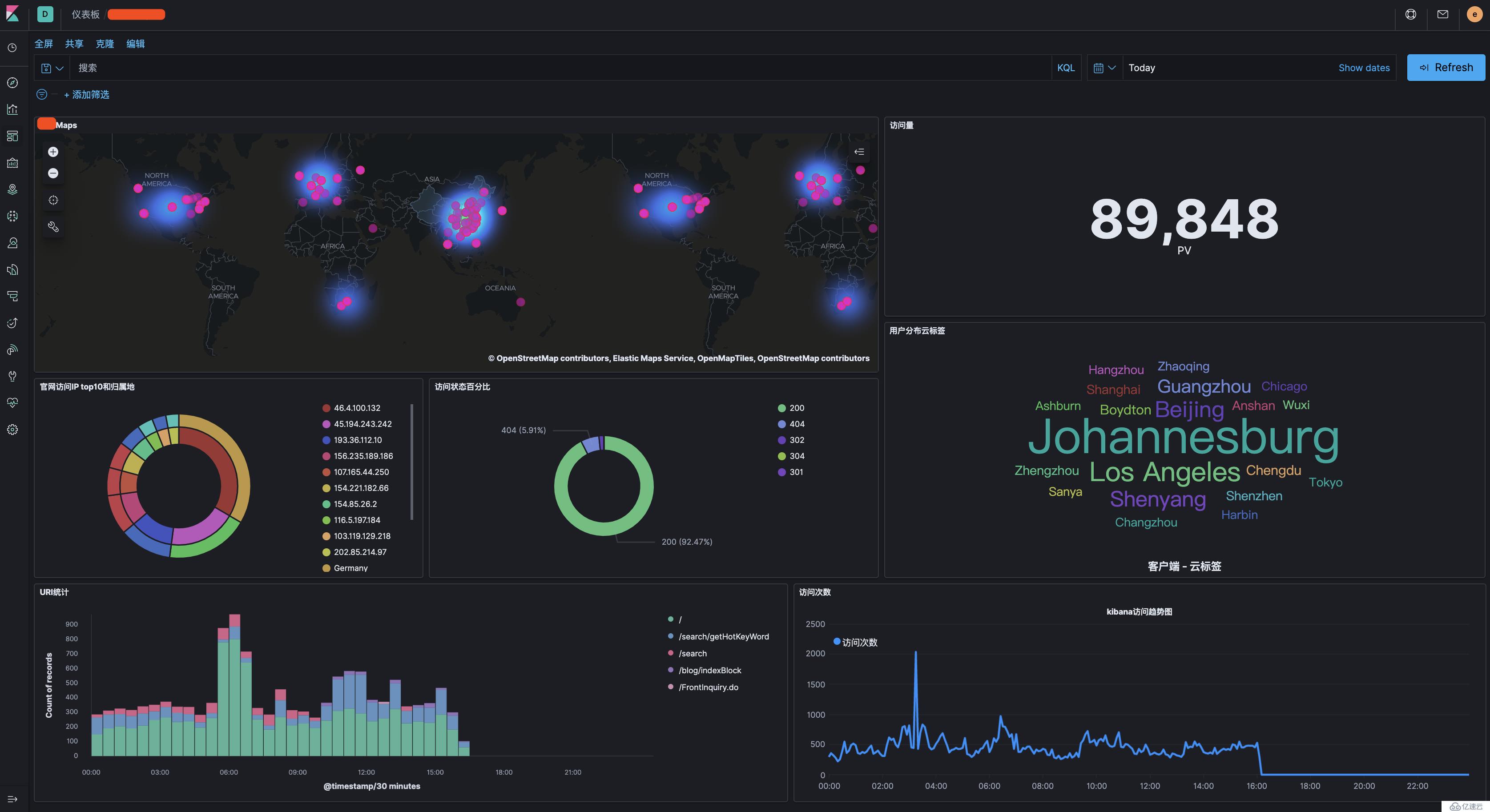

} 后续Kibana中添加索引,配置可视化图形都很简单了,官方文档比较全面自行发挥配置即可,

以上就是生产环境配置,由于鄙人水平有限,有什么配置不当得地方请小伙伴们指正纠错,感谢。

免责声明:本站发布的内容(图片、视频和文字)以原创、转载和分享为主,文章观点不代表本网站立场,如果涉及侵权请联系站长邮箱:is@yisu.com进行举报,并提供相关证据,一经查实,将立刻删除涉嫌侵权内容。