SpringBoot+Mybatis plus如何实现多数据源整合的实践,相信很多没有经验的人对此束手无策,为此本文总结了问题出现的原因和解决方法,通过这篇文章希望你能解决这个问题。

SpringBoot 版本为1.5.10.RELEASE,Mybatis plus 版本为2.1.8。

spring: aop: proxy-target-class: true auto: true datasource: druid: # 数据库 1 db1: url: jdbc:mysql://localhost:3306/db1?useUnicode=true&characterEncoding=utf8&autoReconnect=true&zeroDateTimeBehavior=convertToNull&transformedBitIsBoolean=true username: root password: root driver-class-name: com.mysql.jdbc.Driver initialSize: 5 minIdle: 5 maxActive: 20 # 数据库 2 db2: url: jdbc:mysql://localhost:3306/db2?useUnicode=true&characterEncoding=utf8&autoReconnect=true&zeroDateTimeBehavior=convertToNull&transformedBitIsBoolean=true username: root password: root driver-class-name: com.mysql.jdbc.Driver initialSize: 5 minIdle: 5 maxActive: 20

@Configuration

@MapperScan({"com.warm.system.mapper*"})

public class MybatisPlusConfig {

/**

* mybatis-plus分页插件<br>

* 文档:http://mp.baomidou.com<br>

*/

@Bean

public PaginationInterceptor paginationInterceptor() {

PaginationInterceptor paginationInterceptor = new PaginationInterceptor();

//paginationInterceptor.setLocalPage(true);// 开启 PageHelper 的支持

return paginationInterceptor;

}

/**

* mybatis-plus SQL执行效率插件【生产环境可以关闭】

*/

@Bean

public PerformanceInterceptor performanceInterceptor() {

return new PerformanceInterceptor();

}

@Bean(name = "db1")

@ConfigurationProperties(prefix = "spring.datasource.druid.db1" )

public DataSource db1 () {

return DruidDataSourceBuilder.create().build();

}

@Bean(name = "db2")

@ConfigurationProperties(prefix = "spring.datasource.druid.db2" )

public DataSource db2 () {

return DruidDataSourceBuilder.create().build();

}

/**

* 动态数据源配置

* @return

*/

@Bean

@Primary

public DataSource multipleDataSource (@Qualifier("db1") DataSource db1,

@Qualifier("db2") DataSource db2 ) {

DynamicDataSource dynamicDataSource = new DynamicDataSource();

Map< Object, Object > targetDataSources = new HashMap<>();

targetDataSources.put(DBTypeEnum.db1.getValue(), db1 );

targetDataSources.put(DBTypeEnum.db2.getValue(), db2);

dynamicDataSource.setTargetDataSources(targetDataSources);

dynamicDataSource.setDefaultTargetDataSource(db1);

return dynamicDataSource;

}

@Bean("sqlSessionFactory")

public SqlSessionFactory sqlSessionFactory() throws Exception {

MybatisSqlSessionFactoryBean sqlSessionFactory = new MybatisSqlSessionFactoryBean();

sqlSessionFactory.setDataSource(multipleDataSource(db1(),db2()));

//sqlSessionFactory.setMapperLocations(new PathMatchingResourcePatternResolver().getResources("classpath:/mapper/*/*Mapper.xml"));

MybatisConfiguration configuration = new MybatisConfiguration();

//configuration.setDefaultScriptingLanguage(MybatisXMLLanguageDriver.class);

configuration.setJdbcTypeForNull(JdbcType.NULL);

configuration.setMapUnderscoreToCamelCase(true);

configuration.setCacheEnabled(false);

sqlSessionFactory.setConfiguration(configuration);

sqlSessionFactory.setPlugins(new Interceptor[]{ //PerformanceInterceptor(),OptimisticLockerInterceptor()

paginationInterceptor() //添加分页功能

});

sqlSessionFactory.setGlobalConfig(globalConfiguration());

return sqlSessionFactory.getObject();

}

@Bean

public GlobalConfiguration globalConfiguration() {

GlobalConfiguration conf = new GlobalConfiguration(new LogicSqlInjector());

conf.setLogicDeleteValue("-1");

conf.setLogicNotDeleteValue("1");

conf.setIdType(0);

conf.setMetaObjectHandler(new MyMetaObjectHandler());

conf.setDbColumnUnderline(true);

conf.setRefresh(true);

return conf;

}

}@Component

@Aspect

@Order(-100) //这是为了保证AOP在事务注解之前生效,Order的值越小,优先级越高

@Slf4j

public class DataSourceSwitchAspect {

@Pointcut("execution(* com.warm.system.service.db1..*.*(..))")

private void db1Aspect() {

}

@Pointcut("execution(* com.warm.system.service.db2..*.*(..))")

private void db2Aspect() {

}

@Before( "db1Aspect()" )

public void db1() {

log.info("切换到db1 数据源...");

DbContextHolder.setDbType(DBTypeEnum.db1);

}

@Before("db2Aspect()" )

public void db2 () {

log.info("切换到db2 数据源...");

DbContextHolder.setDbType(DBTypeEnum.db2);

}

}

public class DbContextHolder {

private static final ThreadLocal contextHolder = new ThreadLocal<>();

/**

* 设置数据源

* @param dbTypeEnum

*/

public static void setDbType(DBTypeEnum dbTypeEnum) {

contextHolder.set(dbTypeEnum.getValue());

}

/**

* 取得当前数据源

* @return

*/

public static String getDbType() {

return (String) contextHolder.get();

}

/**

* 清除上下文数据

*/

public static void clearDbType() {

contextHolder.remove();

}

}

public enum DBTypeEnum {

db1("db1"), db2("db2");

private String value;

DBTypeEnum(String value) {

this.value = value;

}

public String getValue() {

return value;

}

}

public class DynamicDataSource extends AbstractRoutingDataSource {

/**

* 取得当前使用哪个数据源

* @return

*/

@Override

protected Object determineCurrentLookupKey() {

return DbContextHolder.getDbType();

}

}OK!写个单元测试来验证一下:

@SpringBootTest

@RunWith(SpringJUnit4ClassRunner.class)

public class DataTest {

@Autowired

private UserService userService;

@Autowired

private OrderService orderService;

@Test

public void test() {

userService.getUserList().stream().forEach(item -> System.out.println(item));

orderService.getOrderList().stream().forEach(item -> System.out.println(item));

}

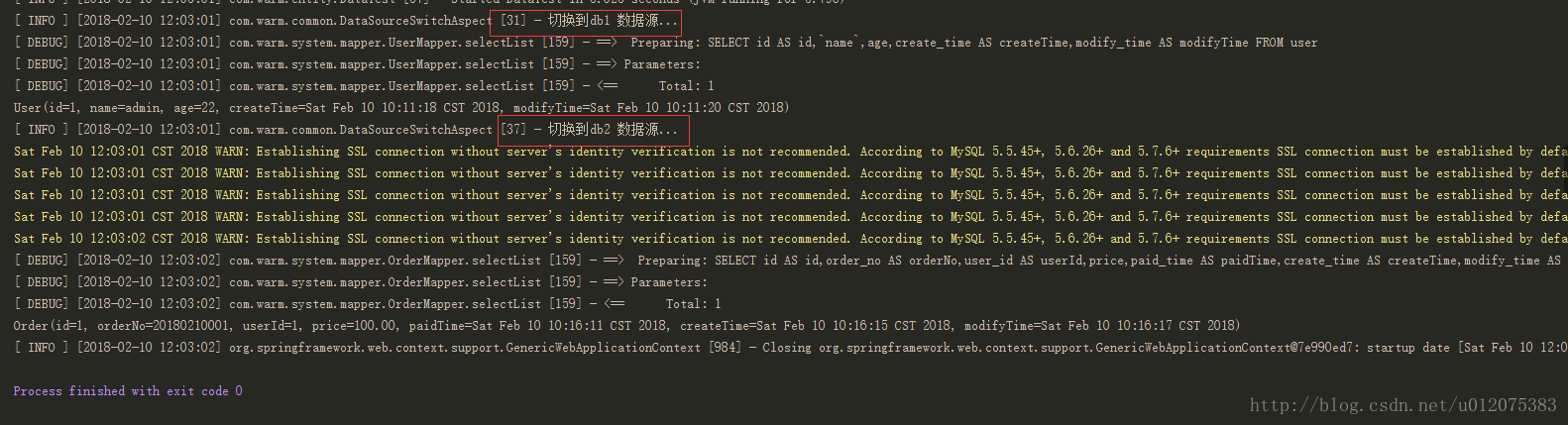

}如图所示,证明数据源能动态切换了。

具体项目结构和代码参考Github。

踩坑记录:

直接调用Mybatis plus 的service方法AOP不会生效,即数据源不会动态切换,解决方法:在自己的service层中封装一下,调用自定义的service方法AOP即能正常生效了,如下所示:

@Service

public class UserServiceImpl extends ServiceImpl<UserMapper, User> implements UserService {

@Override

public List<User> getUserList() {

return selectList(null);

}

}application.yml 定义的mybatis plus 配置信息不生效,如:

#MyBatis mybatis-plus: mapper-locations: classpath:/mapper/*/*Mapper.xml #实体扫描,多个package用逗号或者分号分隔 typeAliasesPackage: com.jinhuatuo.edu.sys.entity global-config: #主键类型 0:"数据库ID自增", 1:"用户输入ID",2:"全局唯一ID (数字类型唯一ID)", 3:"全局唯一ID UUID"; id-type: 0 #字段策略 0:"忽略判断",1:"非 NULL 判断"),2:"非空判断" field-strategy: 2 #驼峰下划线转换 db-column-underline: true #刷新mapper 调试神器 refresh-mapper: true #数据库大写下划线转换 #capital-mode: true #序列接口实现类配置 #key-generator: com.baomidou.springboot.xxx #逻辑删除配置 #logic-delete-value: 0 #logic-not-delete-value: 1 #自定义填充策略接口实现 meta-object-handler: com.jinhuatuo.edu.config.mybatis.MyMetaObjectHandler #自定义SQL注入器 #sql-injector: com.baomidou.springboot.xxx configuration: map-underscore-to-camel-case: true cache-enabled: false

解决方法: 所有这些配置在MybatisPlusConfig 类中用代码的方式进行配置,分页插件亦是如此,否则统计列表总数的数据会拿不到,参考代码即可。

在application.yml配置

logging: level: debug

控制台也不会打印Mybatis 执行的SQL语句,解决方法:自定义日志输出方案,如在classpath下直接引入日志配置文件如logback-spring.xml即可,同时application.yml无需再配置日志信息。

logback-spring.xml配置参考:

<?xml version="1.0" encoding="UTF-8"?>

<!-- scan:配置文件如果发生改变,将会重新加载,默认值为true -->

<configuration scan="true" scanPeriod="10 seconds">

<!-- <include resource="org/springframework/boot/logging/logback/base.xml"/> -->

<!-- 日志文件路径 -->

<!-- <springProperty name="logFilePath" source="logging.path"/> -->

<property resource="application.yml" />

<substitutionProperty name="LOG_HOME" value="${logging.path}" />

<substitutionProperty name="PROJECT_NAME" value="${spring.application.name}" />

<!--<substitutionProperty name="CUR_NODE" value="${info.node}" />-->

<!-- 日志数据库路径 -->

<!-- <springProperty name="logDbPath" source="spring.datasource.one.url"/>

<springProperty name="logDbDriver" source="spring.datasource.one.driver-class-name"/>

<springProperty name="logDbUser" source="spring.datasource.one.username"/>

<springProperty name="logDbPwd" source="spring.datasource.one.password"/> -->

<!-- 将日志文件 -->

<appender name="file" class="ch.qos.logback.core.rolling.RollingFileAppender">

<append>true</append>

<encoder>

<pattern>

[ %-5level] [%date{yyyy-MM-dd HH:mm:ss}] %logger{96} [%line] - %msg%n

</pattern>

<charset>utf-8</charset>

</encoder>

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>${LOG_HOME}/${PROJECT_NAME}-%d{yyyy-MM-dd}.%i.log</fileNamePattern>

<timeBasedFileNamingAndTriggeringPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedFNATP">

<maxFileSize>50 MB</maxFileSize>

</timeBasedFileNamingAndTriggeringPolicy>

<!--最多保留30天log-->

<maxHistory>30</maxHistory>

</rollingPolicy>

</appender>

<!-- 将日志错误文件-->

<appender name="file_error" class="ch.qos.logback.core.rolling.RollingFileAppender">

<append>true</append>

<encoder>

<pattern>

[ %-5level] [%date{yyyy-MM-dd HH:mm:ss}] %logger{96} [%line] - %msg%n

</pattern>

<charset>utf-8</charset>

</encoder>

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>${LOG_HOME}/${PROJECT_NAME}-error-%d{yyyy-MM-dd}.%i.log</fileNamePattern>

<timeBasedFileNamingAndTriggeringPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedFNATP">

<maxFileSize>50 MB</maxFileSize>

</timeBasedFileNamingAndTriggeringPolicy>

<!--最多保留30天log-->

<maxHistory>30</maxHistory>

</rollingPolicy>

<filter class="ch.qos.logback.classic.filter.ThresholdFilter">

<level>ERROR</level>

<!-- <onMatch>ACCEPT</onMatch>

<onMismatch>DENY </onMismatch> -->

</filter>

</appender>

<!-- 将日志写入控制台 -->

<appender name="console" class="ch.qos.logback.core.ConsoleAppender">

<encoder>

<pattern>

[ %-5level] [%date{yyyy-MM-dd HH:mm:ss}] %logger{96} [%line] - %msg%n

</pattern>

<!--<charset>utf-8</charset>-->

</encoder>

</appender>

<!-- 将日志写入数据库 -->

<!-- <appender name="DB-CLASSIC-MYSQL-POOL" class="ch.qos.logback.classic.db.DBAppender">

<connectionSource class="ch.qos.logback.core.db.DataSourceConnectionSource">

<dataSource class="org.springframework.jdbc.datasource.DriverManagerDataSource">

<driverClassName>${logDbDriver}</driverClassName>

<url>${logDbPath}</url>

<username>${logDbUser}</username>

<password>${logDbPwd}</password>

</dataSource>

</connectionSource>

</appender> -->

<!-- spring扩展,分环境配置log信息 -->

<springProfile name="dev">

<!-- <logger name="sand.dao" level="DEBUG"/> -->

<!-- <logger name="org.springframework.web" level="INFO"/> -->

<logger name="org.springboot.sample" level="TRACE" />

<logger name="org.springframework.cloud" level="INFO" />

<logger name="com.netflix" level="INFO"></logger>

<logger name="org.springframework.boot" level="INFO"></logger>

<logger name="org.springframework.web" level="INFO"/>

<logger name="jdbc.sqltiming" level="debug"/>

<logger name="com.ibatis" level="debug" />

<logger name="com.ibatis.common.jdbc.SimpleDataSource" level="debug" />

<logger name="com.ibatis.common.jdbc.ScriptRunner" level="debug" />

<logger name="com.ibatis.sqlmap.engine.impl.SqlMapClientDelegate" level="debug" />

<logger name="java.sql.Connection" level="debug" />

<logger name="java.sql.Statement" level="debug" />

<logger name="java.sql.PreparedStatement" level="debug" />

<logger name="java.sql.ResultSet" level="debug" />

<logger name="com.warm" level="debug"/>

<root level="DEBUG">

<appender-ref ref="console" />

<appender-ref ref="file" />

</root>

<root level="ERROR">

<appender-ref ref="file_error" />

<!-- <appender-ref ref="DB-CLASSIC-MYSQL-POOL" /> -->

</root>

</springProfile>

<springProfile name="test">

<logger name="org.springboot.sample" level="TRACE" />

<logger name="org.springframework.cloud" level="INFO" />

<logger name="com.netflix" level="INFO"></logger>

<logger name="org.springframework.boot" level="INFO"></logger>

<logger name="org.springframework.web" level="INFO"/>

<logger name="jdbc.sqltiming" level="debug"/>

<logger name="com.ibatis" level="debug" />

<logger name="com.ibatis.common.jdbc.SimpleDataSource" level="debug" />

<logger name="com.ibatis.common.jdbc.ScriptRunner" level="debug" />

<logger name="com.ibatis.sqlmap.engine.impl.SqlMapClientDelegate" level="debug" />

<logger name="java.sql.Connection" level="debug" />

<logger name="java.sql.Statement" level="debug" />

<logger name="java.sql.PreparedStatement" level="debug" />

<logger name="java.sql.ResultSet" level="debug" />

<logger name="com.warm" level="DEBUG"/>

<root level="DEBUG">

<!-- <appender-ref ref="console" /> -->

<appender-ref ref="file" />

</root>

<root level="ERROR">

<appender-ref ref="file_error" />

<!-- <appender-ref ref="DB-CLASSIC-MYSQL-POOL" /> -->

</root>

</springProfile>

<springProfile name="prod">

<logger name="org.springboot.sample" level="TRACE" />

<logger name="org.springframework.cloud" level="INFO" />

<logger name="com.netflix" level="INFO"></logger>

<logger name="org.springframework.boot" level="INFO"></logger>

<logger name="org.springframework.web" level="INFO"/>

<logger name="jdbc.sqltiming" level="debug"/>

<logger name="com.ibatis" level="debug" />

<logger name="com.ibatis.common.jdbc.SimpleDataSource" level="debug" />

<logger name="com.ibatis.common.jdbc.ScriptRunner" level="debug" />

<logger name="com.ibatis.sqlmap.engine.impl.SqlMapClientDelegate" level="debug" />

<logger name="java.sql.Connection" level="debug" />

<logger name="java.sql.Statement" level="debug" />

<logger name="java.sql.PreparedStatement" level="debug" />

<logger name="java.sql.ResultSet" level="debug" />

<logger name="com.warm" level="info"/>

<root level="DEBUG">

<!-- <appender-ref ref="console" /> -->

<appender-ref ref="file" />

</root>

<root level="ERROR">

<appender-ref ref="file_error" />

<!-- <appender-ref ref="DB-CLASSIC-MYSQL-POOL" /> -->

</root>

</springProfile>

</configuration>看完上述内容,你们掌握SpringBoot+Mybatis plus如何实现多数据源整合的实践的方法了吗?如果还想学到更多技能或想了解更多相关内容,欢迎关注亿速云行业资讯频道,感谢各位的阅读!

免责声明:本站发布的内容(图片、视频和文字)以原创、转载和分享为主,文章观点不代表本网站立场,如果涉及侵权请联系站长邮箱:is@yisu.com进行举报,并提供相关证据,一经查实,将立刻删除涉嫌侵权内容。