一、环境配置

1.系统环境

[root@date ~]# cat /etc/centos-release

CentOS Linux release 7.4.1708 (Core)2..安装JAVA环境

yum -y install java java-1.8.0-openjdk-devel

#jps需要jdk-devel支持3.下载kafka

[root@slave1 ~]# ls kafka_2.11-1.0.0.tgz

kafka_2.11-1.0.0.tgz二、配置zookeeper

1.修改kafka中zookeeper的配置文件

[root@slave1 ~]# cat /opt/kafka/config/zookeeper.properties | grep -v "^$" | grep -v "^#"

tickTime=2000

initLimit=10

syncLimit=5

maxClientCnxns=300

dataDir=/opt/zookeeper/data

dataLogDir=/opt/zookeeper/log

clientPort=2181

server.1=20.0.5.11:2888:3888

server.2=20.0.5.12:2888:3888

server.3=20.0.5.13:2888:38882.复制配置文件到其他节点

[root@slave1 ~]# pscp.pssh -h zlist /opt/kafka/config/zookeeper.properties /opt/kafka/config/

[root@slave1 ~]# cat zlist

20.0.5.11

20.0.5.12

20.0.5.133.在各个节点创建数据和日志目录

[root@slave1 ~]# pssh -h zlist 'mkdir /opt/zookeeper/data'

[root@slave1 ~]# pssh -h zlist 'mkdir /opt/zookeeper/log'4.创建myid文件

[root@slave1 ~]# pssh -H slave1 -i 'echo 1 > /opt/zookeeper/data/myid'

[root@slave1 ~]# pssh -H slave2 -i 'echo 2 > /opt/zookeeper/data/myid'

[root@slave1 ~]# pssh -H slave3 -i 'echo 3 > /opt/zookeeper/data/myid'5.启动zookeeper

[root@slave1 ~]# pssh -h zlist 'nohup /opt/kafka/bin/zookeeper-server-start.sh /opt/kafka/config/zookeeper.properties &'

[root@slave1 ~]# pssh -h zlist -i 'jps'

[1] 23:29:36 [SUCCESS] 20.0.5.12

3492 QuorumPeerMain

3898 Jps

[2] 23:29:36 [SUCCESS] 20.0.5.11

7369 QuorumPeerMain

9884 Jps

[3] 23:29:36 [SUCCESS] 20.0.5.13

3490 QuorumPeerMain

3898 Jps三、配置kafka

1.修改server.properties

[root@slave1 ~]# cat /opt/kafka/config/server.properties

broker.id=1

#机器唯一标识

host.name=20.0.5.11

#当前broker机器ip

port=9092

#broker监听端口

num.network.threads=3

#服务器接受请求和响应请求的线程数

num.io.threads=8

#io线程数

socket.send.buffer.bytes=102400

#发送缓冲区大小,数据先存储到缓冲区了到达一定的大小后在发送

socket.receive.buffer.bytes=102400

#接收缓冲区大小,达到一定大小后序列化到磁盘

socket.request.max.bytes=104857600

#向kafka请求消息或者向kafka发送消息的请求的最大数

log.dirs=/opt/kafkalog

#消息存放目录

delete.topic.enable=true

#能够通过命令删除topic

num.partitions=1

#默认的分区数,一个topic默认1个分区数

num.recovery.threads.per.data.dir=1

#设置恢复和清理超时数据的线程数量

offsets.topic.replication.factor=3

#用于配置offset记录的topic的partition的副本个数

transaction.state.log.replication.factor=3

transaction.state.log.min.isr=3

log.retention.hours=168

#消息保存时间

log.segment.bytes=1073741824

#日志文件最大值,当日志文件的大于最大值,则创建一个新的

log.retention.check.interval.ms=300000

#日志保留检查间隔

zookeeper.connect=20.0.5.11:2181,20.0.5.12:2181,20.0.5.13:2181

#zookeeper地址

zookeeper.connection.timeout.ms=6000

#连接zookeeper超时时间2.复制broke配置文件到其他服务节点上(修改broker.id和host.name)

3.启动kafka broke

[root@slave1 ~]# pssh -h zlist '/opt/kafka/bin/kafka-server-start.sh /opt/kafka/config/server.properties > /root/kafka.log 2>1&'

[1] 02:13:05 [SUCCESS] 20.0.5.11

[2] 02:13:05 [SUCCESS] 20.0.5.12

[3] 02:13:05 [SUCCESS] 20.0.5.13

[root@slave1 ~]# pssh -h zlist -i 'jps'

[1] 02:14:51 [SUCCESS] 20.0.5.12

3492 QuorumPeerMain

6740 Jps

6414 Kafka

[2] 02:14:51 [SUCCESS] 20.0.5.13

3490 QuorumPeerMain

4972 Kafka

5293 Jps

[3] 02:14:51 [SUCCESS] 20.0.5.11

7369 QuorumPeerMain

11534 Kafka

11870 Jps4.创建topic

[root@slave1 ~]# /opt/kafka/bin/kafka-topics.sh --create --zookeeper 20.0.5.11:2181,20.0.5.12:2181,20.0.5.13:2181 --replication-factor 3 --partitions 3 --topic test1

Created topic "test1".

[root@slave1 ~]# /opt/kafka/bin/kafka-topics.sh --describe --zookeeper 20.0.5.11:2181,20.0.5.12:2181,20.0.5.13:2181 --topic test1

Topic:test1 PartitionCount:3 ReplicationFactor:3 Configs:

Topic: test1 Partition: 0 Leader: 2 Replicas: 2,3,1 Isr: 2,3,1

Topic: test1 Partition: 1 Leader: 3 Replicas: 3,1,2 Isr: 3,1,2

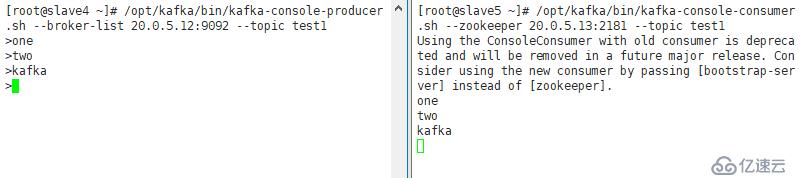

Topic: test1 Partition: 2 Leader: 1 Replicas: 1,2,3 Isr: 1,2,35.启动producer和consumer

[root@slave4 ~]# /opt/kafka/bin/kafka-console-producer.sh --broker-list 20.0.5.12:9092 --topic test1

[root@slave5 ~]# /opt/kafka/bin/kafka-console-consumer.sh --zookeeper 20.0.5.13:2181 --topic test1

6.删除topic

[root@slave1 ~]# /opt/kafka/bin/kafka-topics.sh --delete --zookeeper 20.0.5.11:2181 --topic test1

Topic test1 is marked for deletion.

Note: This will have no impact if delete.topic.enable is not set to true.亿速云「云服务器」,即开即用、新一代英特尔至强铂金CPU、三副本存储NVMe SSD云盘,价格低至29元/月。点击查看>>

免责声明:本站发布的内容(图片、视频和文字)以原创、转载和分享为主,文章观点不代表本网站立场,如果涉及侵权请联系站长邮箱:is@yisu.com进行举报,并提供相关证据,一经查实,将立刻删除涉嫌侵权内容。