本篇内容主要讲解“Android如何使用Flutter实现录音插件”,感兴趣的朋友不妨来看看。本文介绍的方法操作简单快捷,实用性强。下面就让小编来带大家学习“Android如何使用Flutter实现录音插件”吧!

Flutter 官方的做法,就是自动注册插件,

很方便

手动注册,体现本文的不同

插件是 AudioRecorderPlugin

class MainActivity: FlutterActivity() {

override fun onCreate(savedInstanceState: Bundle?) {

super.onCreate(savedInstanceState)

flutterEngine!!.plugins.add(AudioRecorderPlugin())

}

}主要是消息回调

下文依次是,

开始录音

结束录音

正在录音

是否有录音权限

注意,这里的录音权限包含两个,麦克风的权限,和存储权限

@Override

public void onMethodCall(@NonNull MethodCall call, @NonNull Result result) {

switch (call.method) {

case "start":

Log.d(LOG_TAG, "Start");

Log.d(LOG_TAG, "11111____");

String path = call.argument("path");

mExtension = call.argument("extension");

startTime = Calendar.getInstance().getTime();

if (path != null) {

mFilePath = Environment.getExternalStorageDirectory().getAbsolutePath() + "/" + path;

} else {

Log.d(LOG_TAG, "11111____222");

String fileName = String.valueOf(startTime.getTime());

mFilePath = Environment.getExternalStorageDirectory().getAbsolutePath() + "/" + fileName + mExtension;

}

Log.d(LOG_TAG, mFilePath);

startRecording();

isRecording = true;

result.success(null);

break;

case "stop":

Log.d(LOG_TAG, "Stop");

stopRecording();

long duration = Calendar.getInstance().getTime().getTime() - startTime.getTime();

Log.d(LOG_TAG, "Duration : " + String.valueOf(duration));

isRecording = false;

HashMap<String, Object> recordingResult = new HashMap<>();

recordingResult.put("duration", duration);

recordingResult.put("path", mFilePath);

recordingResult.put("audioOutputFormat", mExtension);

result.success(recordingResult);

break;

case "isRecording":

Log.d(LOG_TAG, "Get isRecording");

result.success(isRecording);

break;

case "hasPermissions":

Log.d(LOG_TAG, "Get hasPermissions");

Context context = _flutterBinding.getApplicationContext();

PackageManager pm = context.getPackageManager();

int hasStoragePerm = pm.checkPermission(Manifest.permission.WRITE_EXTERNAL_STORAGE, context.getPackageName());

int hasRecordPerm = pm.checkPermission(Manifest.permission.RECORD_AUDIO, context.getPackageName());

boolean hasPermissions = hasStoragePerm == PackageManager.PERMISSION_GRANTED && hasRecordPerm == PackageManager.PERMISSION_GRANTED;

result.success(hasPermissions);

break;

default:

result.notImplemented();

break;

}

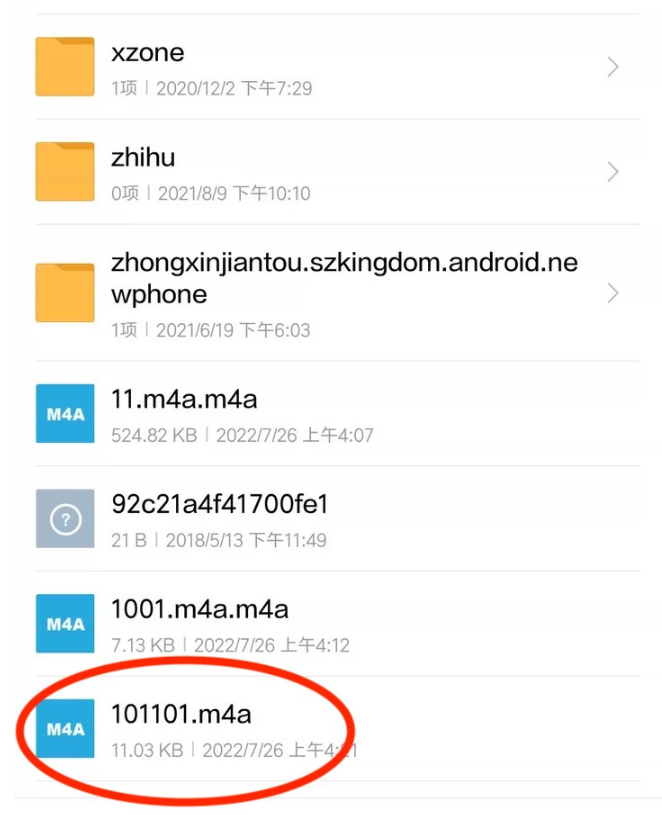

}使用 wav 的封装格式,用 AudioRecord;

其他封装格式,用 MediaRecorder

上面两个播放器,有开始录音和结束录音功能;

暂停录音和恢复录音,则多次开始和结束,再把文件拼接在一起

建立 MethodChannel, 异步调用上面的原生功能

class AudioRecorder {

static const MethodChannel _channel = const MethodChannel('audio_recorder');

static LocalFileSystem fs = LocalFileSystem();

static Future start(String path, AudioOutputFormat audioOutputFormat) async {

String extension;

if (path != null) {

if (audioOutputFormat != null) {

if (_convertStringInAudioOutputFormat(p.extension(path)) !=

audioOutputFormat) {

extension = _convertAudioOutputFormatInString(audioOutputFormat);

path += extension;

} else {

extension = p.extension(path);

}

} else {

if (_isAudioOutputFormat(p.extension(path))) {

extension = p.extension(path);

} else {

extension = ".m4a"; // default value

path += extension;

}

}

File file = fs.file(path);

if (await file.exists()) {

throw new Exception("A file already exists at the path :" + path);

} else if (!await file.parent.exists()) {

throw new Exception("The specified parent directory does not exist");

}

} else {

extension = ".m4a"; // default value

}

return _channel

.invokeMethod('start', {"path": path, "extension": extension});

}

static Future<Recording?> stop() async {

// 把原生带出来的信息,放入字典中

Map<String, dynamic> response =

Map.from(await _channel.invokeMethod('stop'));

if (response != null) {

int duration = response['duration'];

String fmt = response['audioOutputFormat'];

AudioOutputFormat? outputFmt = _convertStringInAudioOutputFormat(fmt);

if (fmt != null && outputFmt != null) {

Recording recording = new Recording(

new Duration(milliseconds: duration),

response['path'],

outputFmt,

response['audioOutputFormat']);

return recording;

}

} else {

return null;

}

}这里的插件名, 为 SwiftAudioRecorderPlugin

public class SwiftAudioRecorderPlugin: NSObject, FlutterPlugin {

var isRecording = false

var hasPermissions = false

var mExtension = ""

var mPath = ""

var startTime: Date!

var audioRecorder: AVAudioRecorder?

public static func register(with registrar: FlutterPluginRegistrar) {

let channel = FlutterMethodChannel(name: "audio_recorder", binaryMessenger: registrar.messenger())

let instance = SwiftAudioRecorderPlugin()

registrar.addMethodCallDelegate(instance, channel: channel)

}

public func handle(_ call: FlutterMethodCall, result: @escaping FlutterResult) {

switch call.method {

case "start":

print("start")

let dic = call.arguments as! [String : Any]

mExtension = dic["extension"] as? String ?? ""

mPath = dic["path"] as? String ?? ""

startTime = Date()

let documentsPath = NSSearchPathForDirectoriesInDomains(.documentDirectory, .userDomainMask, true)[0]

if mPath == "" {

mPath = documentsPath + "/" + String(Int(startTime.timeIntervalSince1970)) + ".m4a"

}

else{

mPath = documentsPath + "/" + mPath

}

print("path: " + mPath)

let settings = [

AVFormatIDKey: getOutputFormatFromString(mExtension),

AVSampleRateKey: 12000,

AVNumberOfChannelsKey: 1,

AVEncoderAudioQualityKey: AVAudioQuality.high.rawValue

]

do {

try AVAudioSession.sharedInstance().setCategory(AVAudioSession.Category.playAndRecord, options: AVAudioSession.CategoryOptions.defaultToSpeaker)

try AVAudioSession.sharedInstance().setActive(true)

let recorder = try AVAudioRecorder(url: URL(string: mPath)!, settings: settings)

recorder.delegate = self

recorder.record()

audioRecorder = recorder

} catch {

print("fail")

result(FlutterError(code: "", message: "Failed to record", details: nil))

}

isRecording = true

result(nil)

case "pause":

audioRecorder?.pause()

result(nil)

case "resume":

audioRecorder?.record()

result(nil)

case "stop":

print("stop")

audioRecorder?.stop()

audioRecorder = nil

let duration = Int(Date().timeIntervalSince(startTime as Date) * 1000)

isRecording = false

var recordingResult = [String : Any]()

recordingResult["duration"] = duration

recordingResult["path"] = mPath

recordingResult["audioOutputFormat"] = mExtension

result(recordingResult)

case "isRecording":

print("isRecording")

result(isRecording)

case "hasPermissions":

print("hasPermissions")

switch AVAudioSession.sharedInstance().recordPermission{

case AVAudioSession.RecordPermission.granted:

print("granted")

hasPermissions = true

case AVAudioSession.RecordPermission.denied:

print("denied")

hasPermissions = false

case AVAudioSession.RecordPermission.undetermined:

print("undetermined")

AVAudioSession.sharedInstance().requestRecordPermission() { [unowned self] allowed in

DispatchQueue.main.async {

if allowed {

self.hasPermissions = true

} else {

self.hasPermissions = false

}

}

}

default:()

}

result(hasPermissions)

default:

result(FlutterMethodNotImplemented)

}

}

}逻辑与安卓插件类似,

因为 iOS 的 AVAudioRecorder 对 pause 和 resume 操作,支持友好,

所以增添了暂停和恢复录音功能

iOS 端的权限比安卓权限,少一个

仅需要录音麦克风权限

public class SwiftAudioRecorderPlugin: NSObject, FlutterPlugin {

var isRecording = false

var hasPermissions = false

var mExtension = ""

var mPath = ""

var startTime: Date!

var audioRecorder: AVAudioRecorder?

public static func register(with registrar: FlutterPluginRegistrar) {

let channel = FlutterMethodChannel(name: "audio_recorder", binaryMessenger: registrar.messenger())

let instance = SwiftAudioRecorderPlugin()

registrar.addMethodCallDelegate(instance, channel: channel)

}

public func handle(_ call: FlutterMethodCall, result: @escaping FlutterResult) {

switch call.method {

case "start":

print("start")

let dic = call.arguments as! [String : Any]

mExtension = dic["extension"] as? String ?? ""

mPath = dic["path"] as? String ?? ""

startTime = Date()

let documentsPath = NSSearchPathForDirectoriesInDomains(.documentDirectory, .userDomainMask, true)[0]

if mPath == "" {

mPath = documentsPath + "/" + String(Int(startTime.timeIntervalSince1970)) + ".m4a"

}

else{

mPath = documentsPath + "/" + mPath

}

print("path: " + mPath)

let settings = [

AVFormatIDKey: getOutputFormatFromString(mExtension),

AVSampleRateKey: 12000,

AVNumberOfChannelsKey: 1,

AVEncoderAudioQualityKey: AVAudioQuality.high.rawValue

]

do {

try AVAudioSession.sharedInstance().setCategory(AVAudioSession.Category.playAndRecord, options: AVAudioSession.CategoryOptions.defaultToSpeaker)

try AVAudioSession.sharedInstance().setActive(true)

let recorder = try AVAudioRecorder(url: URL(string: mPath)!, settings: settings)

recorder.delegate = self

recorder.record()

audioRecorder = recorder

} catch {

print("fail")

result(FlutterError(code: "", message: "Failed to record", details: nil))

}

isRecording = true

result(nil)

case "pause":

audioRecorder?.pause()

result(nil)

case "resume":

audioRecorder?.record()

result(nil)

case "stop":

print("stop")

audioRecorder?.stop()

audioRecorder = nil

let duration = Int(Date().timeIntervalSince(startTime as Date) * 1000)

isRecording = false

var recordingResult = [String : Any]()

recordingResult["duration"] = duration

recordingResult["path"] = mPath

recordingResult["audioOutputFormat"] = mExtension

result(recordingResult)

case "isRecording":

print("isRecording")

result(isRecording)

case "hasPermissions":

print("hasPermissions")

switch AVAudioSession.sharedInstance().recordPermission{

case AVAudioSession.RecordPermission.granted:

print("granted")

hasPermissions = true

case AVAudioSession.RecordPermission.denied:

print("denied")

hasPermissions = false

case AVAudioSession.RecordPermission.undetermined:

print("undetermined")

AVAudioSession.sharedInstance().requestRecordPermission() { [unowned self] allowed in

DispatchQueue.main.async {

if allowed {

self.hasPermissions = true

} else {

self.hasPermissions = false

}

}

}

default:()

}

result(hasPermissions)

default:

result(FlutterMethodNotImplemented)

}

}

}通过判断平台,Platform.isIOS,

给 iOS 设备,增加完善的功能

@override

Widget build(BuildContext context) {

final VoidCallback tapFirst;

if (Platform.isAndroid && name == kEnd) {

tapFirst = _audioEnd;

} else {

tapFirst = _audioGoOn;

}

List<Widget> views = [

ElevatedButton(

child: Text(

name,

style: Theme.of(context).textTheme.headline4,

),

onPressed: tapFirst,

)

];

if (Platform.isIOS && name != kStarted) {

views.add(SizedBox(height: 80));

views.add(ElevatedButton(

child: Text(

kEnd,

style: Theme.of(context).textTheme.headline4,

),

onPressed: _audioEnd,

));

}

return Scaffold(

appBar: AppBar(

// Here we take the value from the MyHomePage object that was created by

// the App.build method, and use it to set our appbar title.

title: Text(widget.title),

),

body: Center(

// Center is a layout widget. It takes a single child and positions it

// in the middle of the parent.

child: Column(

mainAxisAlignment: MainAxisAlignment.center,

children: views,

),

), // This trailing comma makes auto-formatting nicer for build methods.

);

}到此,相信大家对“Android如何使用Flutter实现录音插件”有了更深的了解,不妨来实际操作一番吧!这里是亿速云网站,更多相关内容可以进入相关频道进行查询,关注我们,继续学习!

亿速云「云服务器」,即开即用、新一代英特尔至强铂金CPU、三副本存储NVMe SSD云盘,价格低至29元/月。点击查看>>

免责声明:本站发布的内容(图片、视频和文字)以原创、转载和分享为主,文章观点不代表本网站立场,如果涉及侵权请联系站长邮箱:is@yisu.com进行举报,并提供相关证据,一经查实,将立刻删除涉嫌侵权内容。