操作系统:CentOS Linux release 7.6.1810 (Core)

内核版本:Linux node03 3.10.0-957.21.3.el7.x86_64 #1 SMP Tue Jun 18 16:35:19 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux

kubernetes:Server Version: version.Info{Major:"1", Minor:"14", GitVersion:"v1.14.6", GitCommit:"96fac5cd13a5dc064f7d9f4f23030a6aeface6cc", GitTreeState:"archive", BuildDate:"2019-08-22T01:38:12Z", GoVersion:"go1.12.7", Compiler:"gc", Platform:"linux/amd64"}

kubectl:Client Version: version.Info{Major:"1", Minor:"15", GitVersion:"v1.15.3", GitCommit:"2d3c76f9091b6bec110a5e63777c332469e0cba2", GitTreeState:"archive", BuildDate:"2019-09-04T10:28:54Z", GoVersion:"go1.12.9", Compiler:"gc", Platform:"linux/amd64"}

操作机系统:win10 on Ubuntu 18.04.3 LTS

helm:version.BuildInfo{Version:"v3.0.0-beta.3", GitCommit:"5cb923eecbe80d1ad76399aee234717c11931d9a", GitTreeState:"clean", GoVersion:"go1.12.9"}

# 说明: helm 与以前的版本不兼容一定谨慎可以多版本一起使用

istio:1.2.5

# kube-apiserver 配置删除AlwaysPullImages 不然自动注入不生效#下载Istio

curl -L https://git.io/getLatestIstio | ISTIO_VERSION=1.2.5 sh -

# 进入 Istio 包目录

cd istio-1.2.5

# 安装目录中包含:

#在 install/ 目录中包含了 Kubernetes 安装所需的 .yaml 文件

#samples/ 目录中是示例应用

#istioctl 客户端文件保存在 bin/ 目录之中。istioctl 的功能是手工进行 Envoy Sidecar 的注入。

#istio.VERSION 配置文件

#把 istioctl 客户端加入 PATH 环境变量,可以这样实现:

echo export PATH=$PWD/bin:\$PATH >>/etc/profile

. /etc/profile

export PATH=$PWD/bin:$PATHcd install/kubernetes/helm/istio

#本人修改的地方请对照修改 values.yaml

# Top level istio values file has the following sections.

#

# global: This file is the authoritative and exhaustive source for the global section.

#

# chart sections: Every subdirectory inside the charts/ directory has a top level

# configuration key in this file. This file overrides the values specified

# by the charts/${chartname}/values.yaml.

# Check the chart level values file for exhaustive list of configuration options.

#

# Gateways Configuration, refer to the charts/gateways/values.yaml

# for detailed configuration

#

gateways:

enabled: true

#

# sidecar-injector webhook configuration, refer to the

# charts/sidecarInjectorWebhook/values.yaml for detailed configuration

#

sidecarInjectorWebhook:

enabled: true

#

# galley configuration, refer to charts/galley/values.yaml

# for detailed configuration

#

galley:

enabled: true

#

# mixer configuration

#

# @see charts/mixer/values.yaml, it takes precedence

mixer:

policy:

# if policy is enabled the global.disablePolicyChecks has affect.

enabled: true

telemetry:

enabled: true

#

# pilot configuration

#

# @see charts/pilot/values.yaml

pilot:

enabled: true

#

# security configuration

#

security:

enabled: true

#

# nodeagent configuration

#

nodeagent:

enabled: false

#

# addon grafana configuration

#

grafana:

enabled: true

#

# addon prometheus configuration

#

prometheus:

enabled: true

#

# addon jaeger tracing configuration

#

tracing:

enabled: true

#

# addon kiali tracing configuration

#

kiali:

enabled: true

#

# addon certmanager configuration

#

certmanager:

enabled: false

#

# Istio CNI plugin enabled

# This must be enabled to use the CNI plugin in Istio. The CNI plugin is installed separately.

# If true, the privileged initContainer istio-init is not needed to perform the traffic redirect

# settings for the istio-proxy.

#

istio_cni:

enabled: true

# addon Istio CoreDNS configuration

#

istiocoredns:

enabled: false

# Common settings used among istio subcharts.

global:

# Default hub for Istio images.

# Releases are published to docker hub under 'istio' project.

# Daily builds from prow are on gcr.io, and nightly builds from circle on docker.io/istionightly

hub: docker.io/istio

# Default tag for Istio images.

tag: 1.2.5

# Comma-separated minimum per-scope logging level of messages to output, in the form of <scope>:<level>,<scope>:<level>

# The control plane has different scopes depending on component, but can configure default log level across all components

# If empty, default scope and level will be used as configured in code

logging:

level: "default:info"

# monitoring port used by mixer, pilot, galley

monitoringPort: 15014

k8sIngress:

enabled: true

# Gateway used for k8s Ingress resources. By default it is

# using 'istio:ingressgateway' that will be installed by setting

# 'gateways.enabled' and 'gateways.istio-ingressgateway.enabled'

# flags to true.

gatewayName: ingressgateway

# enableHttps will add port 443 on the ingress.

# It REQUIRES that the certificates are installed in the

# expected secrets - enabling this option without certificates

# will result in LDS rejection and the ingress will not work.

enableHttps: false

proxy:

image: proxyv2

# 修改成自己配置k8s 域名

# cluster domain. Default value is "cluster.local".

clusterDomain: "cluster.local"

# Resources for the sidecar.

resources:

requests:

cpu: 100m

memory: 128Mi

limits:

cpu: 2000m

memory: 1024Mi

# Controls number of Proxy worker threads.

# If set to 0 (default), then start worker thread for each CPU thread/core.

concurrency: 2

# Configures the access log for each sidecar.

# Options:

# "" - disables access log

# "/dev/stdout" - enables access log

accessLogFile: "/dev/stdout"

# Configure how and what fields are displayed in sidecar access log. Setting to

# empty string will result in default log format

accessLogFormat: ""

# Configure the access log for sidecar to JSON or TEXT.

accessLogEncoding: TEXT

# Log level for proxy, applies to gateways and sidecars. If left empty, "warning" is used.

# Expected values are: trace|debug|info|warning|error|critical|off

logLevel: "info"

# Per Component log level for proxy, applies to gateways and sidecars. If a component level is

# not set, then the global "logLevel" will be used. If left empty, "misc:error" is used.

componentLogLevel: ""

# Configure the DNS refresh rate for Envoy cluster of type STRICT_DNS

# This must be given it terms of seconds. For example, 300s is valid but 5m is invalid.

dnsRefreshRate: 300s

#If set to true, istio-proxy container will have privileged securityContext

privileged: false

# If set, newly injected sidecars will have core dumps enabled.

enableCoreDump: false

# Default port for Pilot agent health checks. A value of 0 will disable health checking.

statusPort: 15020

# The initial delay for readiness probes in seconds.

readinessInitialDelaySeconds: 1

# The period between readiness probes.

readinessPeriodSeconds: 2

# The number of successive failed probes before indicating readiness failure.

readinessFailureThreshold: 30

# istio egress capture whitelist

# https://istio.io/docs/tasks/traffic-management/egress.html#calling-external-services-directly

# example: includeIPRanges: "172.30.0.0/16,172.20.0.0/16"

# would only capture egress traffic on those two IP Ranges, all other outbound traffic would

# be allowed by the sidecar

includeIPRanges: "*"

excludeIPRanges: ""

excludeOutboundPorts: ""

# pod internal interfaces

kubevirtInterfaces: ""

# istio ingress capture whitelist

# examples:

# Redirect no inbound traffic to Envoy: --includeInboundPorts=""

# Redirect all inbound traffic to Envoy: --includeInboundPorts="*"

# Redirect only selected ports: --includeInboundPorts="80,8080"

includeInboundPorts: "*"

excludeInboundPorts: ""

# This controls the 'policy' in the sidecar injector.

autoInject: disabled

# 当配置为enabled 会自动注入所有命名空间标签istio-injection=enabled的

# 需要在不自动注入的pod 配置:

#annotations:

# sidecar.istio.io/inject: "false"

# autoInject: disabled 开启自动注入需要在pod配置:

# annotations:

# sidecar.istio.io/inject: "true" 一定注意

# Sets the destination Statsd in envoy (the value of the "--statsdUdpAddress" proxy argument

# would be <host>:<port>).

# Disabled by default.

# The istio-statsd-prom-bridge is deprecated and should not be used moving forward.

envoyStatsd:

# If enabled is set to true, host and port must also be provided. Istio no longer provides a statsd collector.

enabled: false

host: # example: statsd-svc.istio-system

port: # example: 9125

# Sets the Envoy Metrics Service address, used to push Envoy metrics to an external collector

# via the Metrics Service gRPC API. This contains detailed stats information emitted directly

# by Envoy and should not be confused with the the Istio telemetry. The Envoy stats are also

# available to scrape via the Envoy admin port at either /stats or /stats/prometheus.

#

# See https://www.envoyproxy.io/docs/envoy/latest/api-v2/config/metrics/v2/metrics_service.proto

# for details about Envoy's Metrics Service API.

#

# Disabled by default.

envoyMetricsService:

enabled: false

host: # example: metrics-service.istio-system

port: # example: 15000

# Specify which tracer to use. One of: lightstep, zipkin, datadog

tracer: "zipkin"

proxy_init:

# Base name for the proxy_init container, used to configure iptables.

image: proxy_init

# imagePullPolicy is applied to istio control plane components.

# local tests require IfNotPresent, to avoid uploading to dockerhub.

# TODO: Switch to Always as default, and override in the local tests.

imagePullPolicy: IfNotPresent

# controlPlaneSecurityEnabled enabled. Will result in delays starting the pods while secrets are

# propagated, not recommended for tests.

controlPlaneSecurityEnabled: false

# disablePolicyChecks disables mixer policy checks.

# if mixer.policy.enabled==true then disablePolicyChecks has affect.

# Will set the value with same name in istio config map - pilot needs to be restarted to take effect.

disablePolicyChecks: false

# policyCheckFailOpen allows traffic in cases when the mixer policy service cannot be reached.

# Default is false which means the traffic is denied when the client is unable to connect to Mixer.

policyCheckFailOpen: false

# EnableTracing sets the value with same name in istio config map, requires pilot restart to take effect.

enableTracing: true

# Configuration for each of the supported tracers

tracer:

# Configuration for envoy to send trace data to LightStep.

# Disabled by default.

# address: the <host>:<port> of the satellite pool

# accessToken: required for sending data to the pool

# secure: specifies whether data should be sent with TLS

# cacertPath: the path to the file containing the cacert to use when verifying TLS. If secure is true, this is

# required. If a value is specified then a secret called "lightstep.cacert" must be created in the destination

# namespace with the key matching the base of the provided cacertPath and the value being the cacert itself.

#

lightstep:

address: "" # example: lightstep-satellite:443

accessToken: "" # example: abcdefg1234567

secure: true # example: true|false

cacertPath: "" # example: /etc/lightstep/cacert.pem

zipkin:

# Host:Port for reporting trace data in zipkin format. If not specified, will default to

# zipkin service (port 9411) in the same namespace as the other istio components.

address: ""

datadog:

# Host:Port for submitting traces to the Datadog agent.

address: "$(HOST_IP):8126"

# Default mtls policy. If true, mtls between services will be enabled by default.

mtls:

# Default setting for service-to-service mtls. Can be set explicitly using

# destination rules or service annotations.

enabled: false

# ImagePullSecrets for all ServiceAccount, list of secrets in the same namespace

# to use for pulling any images in pods that reference this ServiceAccount.

# For components that don't use ServiceAccounts (i.e. grafana, servicegraph, tracing)

# ImagePullSecrets will be added to the corresponding Deployment(StatefulSet) objects.

# Must be set for any cluster configured with private docker registry.

imagePullSecrets:

# - private-registry-key

# Specify pod scheduling arch(amd64, ppc64le, s390x) and weight as follows:

# 0 - Never scheduled

# 1 - Least preferred

# 2 - No preference

# 3 - Most preferred

arch:

amd64: 2

s390x: 2

ppc64le: 2

# Whether to restrict the applications namespace the controller manages;

# If not set, controller watches all namespaces

oneNamespace: false

# Default node selector to be applied to all deployments so that all pods can be

# constrained to run a particular nodes. Each component can overwrite these default

# values by adding its node selector block in the relevant section below and setting

# the desired values.

defaultNodeSelector: {}

# Default node tolerations to be applied to all deployments so that all pods can be

# scheduled to a particular nodes with matching taints. Each component can overwrite

# these default values by adding its tolerations block in the relevant section below

# and setting the desired values.

# Configure this field in case that all pods of Istio control plane are expected to

# be scheduled to particular nodes with specified taints.

defaultTolerations: []

# Whether to perform server-side validation of configuration.

configValidation: true

# Custom DNS config for the pod to resolve names of services in other

# clusters. Use this to add additional search domains, and other settings.

# see

# https://kubernetes.io/docs/concepts/services-networking/dns-pod-service/#dns-config

# This does not apply to gateway pods as they typically need a different

# set of DNS settings than the normal application pods (e.g., in

# multicluster scenarios).

# NOTE: If using templates, follow the pattern in the commented example below.

#podDNSSearchNamespaces:

#- global

#- "[[ valueOrDefault .DeploymentMeta.Namespace \"default\" ]].global"

# If set to true, the pilot and citadel mtls will be exposed on the

# ingress gateway

meshExpansion:

enabled: false

# If set to true, the pilot and citadel mtls and the plain text pilot ports

# will be exposed on an internal gateway

useILB: false

multiCluster:

# Set to true to connect two kubernetes clusters via their respective

# ingressgateway services when pods in each cluster cannot directly

# talk to one another. All clusters should be using Istio mTLS and must

# have a shared root CA for this model to work.

enabled: false

# A minimal set of requested resources to applied to all deployments so that

# Horizontal Pod Autoscaler will be able to function (if set).

# Each component can overwrite these default values by adding its own resources

# block in the relevant section below and setting the desired resources values.

defaultResources:

requests:

cpu: 10m

# memory: 128Mi

# limits:

# cpu: 100m

# memory: 128Mi

# enable pod distruption budget for the control plane, which is used to

# ensure Istio control plane components are gradually upgraded or recovered.

defaultPodDisruptionBudget:

enabled: true

# The values aren't mutable due to a current PodDisruptionBudget limitation

# minAvailable: 1

# Kubernetes >=v1.11.0 will create two PriorityClass, including system-cluster-critical and

# system-node-critical, it is better to configure this in order to make sure your Istio pods

# will not be killed because of low priority class.

# Refer to https://kubernetes.io/docs/concepts/configuration/pod-priority-preemption/#priorityclass

# for more detail.

priorityClassName: ""

# Use the Mesh Control Protocol (MCP) for configuring Mixer and

# Pilot. Requires galley (`--set galley.enabled=true`).

useMCP: true

# The trust domain corresponds to the trust root of a system

# Refer to https://github.com/spiffe/spiffe/blob/master/standards/SPIFFE-ID.md#21-trust-domain

# Indicate the domain used in SPIFFE identity URL

# The default depends on the environment.

# kubernetes: cluster.local

# else: default dns domain

trustDomain: ""

# Set the default behavior of the sidecar for handling outbound traffic from the application:

# ALLOW_ANY - outbound traffic to unknown destinations will be allowed, in case there are no

# services or ServiceEntries for the destination port

# REGISTRY_ONLY - restrict outbound traffic to services defined in the service registry as well

# as those defined through ServiceEntries

# ALLOW_ANY is the default in 1.1. This means each pod will be able to make outbound requests

# to services outside of the mesh without any ServiceEntry.

# REGISTRY_ONLY was the default in 1.0. If this behavior is desired, set the value below to REGISTRY_ONLY.

outboundTrafficPolicy:

mode: ALLOW_ANY

# The namespace where globally shared configurations should be present.

# DestinationRules that apply to the entire mesh (e.g., enabling mTLS),

# default Sidecar configs, etc. should be added to this namespace.

# configRootNamespace: istio-config

# set the default set of namespaces to which services, service entries, virtual services, destination

# rules should be exported to. Currently only one value can be provided in this list. This value

# should be one of the following two options:

# * implies these objects are visible to all namespaces, enabling any sidecar to talk to any other sidecar.

# . implies these objects are visible to only to sidecars in the same namespace, or if imported as a Sidecar.egress.host

#defaultConfigVisibilitySettings:

#- '*'

sds:

# SDS enabled. IF set to true, mTLS certificates for the sidecars will be

# distributed through the SecretDiscoveryService instead of using K8S secrets to mount the certificates.

enabled: false

udsPath: ""

useTrustworthyJwt: false

useNormalJwt: false

# Configure the mesh networks to be used by the Split Horizon EDS.

#

# The following example defines two networks with different endpoints association methods.

# For `network1` all endpoints that their IP belongs to the provided CIDR range will be

# mapped to network1. The gateway for this network example is specified by its public IP

# address and port.

# The second network, `network2`, in this example is defined differently with all endpoints

# retrieved through the specified Multi-Cluster registry being mapped to network2. The

# gateway is also defined differently with the name of the gateway service on the remote

# cluster. The public IP for the gateway will be determined from that remote service (only

# LoadBalancer gateway service type is currently supported, for a NodePort type gateway service,

# it still need to be configured manually).

#

# meshNetworks:

# network1:

# endpoints:

# - fromCidr: "192.168.0.1/24"

# gateways:

# - address: 1.1.1.1

# port: 80

# network2:

# endpoints:

# - fromRegistry: reg1

# gateways:

# - registryServiceName: istio-ingressgateway.istio-system.svc.cluster.local

# port: 443

#

meshNetworks: {}

# Specifies the global locality load balancing settings.

# Locality-weighted load balancing allows administrators to control the distribution of traffic to

# endpoints based on the localities of where the traffic originates and where it will terminate.

# Please set either failover or distribute configuration but not both.

#

# localityLbSetting:

# distribute:

# - from: "us-central1/*"

# to:

# "us-central1/*": 80

# "us-central2/*": 20

#

# localityLbSetting:

# failover:

# - from: us-east

# to: eu-west

# - from: us-west

# to: us-east

localityLbSetting: {}

# Specifies whether helm test is enabled or not.

# This field is set to false by default, so 'helm template ...'

# will ignore the helm test yaml files when generating the template

enableHelmTest: false

# 子模块的values 配置修改

# gateways 修改

# 删除NodePort 使用ClusterIP 如果gateways 以daemonset 方式部署最好使用hostNetwork: true 这样TCP 代理可以直接hostip 访问代理端口 代替Ingress直接对外访问

cd charts/gateways

vi values.yaml

#

# Gateways Configuration

# By default (if enabled) a pair of Ingress and Egress Gateways will be created for the mesh.

# You can add more gateways in addition to the defaults but make sure those are uniquely named

# and that NodePorts are not conflicting.

# Disable specifc gateway by setting the `enabled` to false.

#

enabled: true

istio-ingressgateway:

enabled: true

#

# Secret Discovery Service (SDS) configuration for ingress gateway.

#

sds:

# If true, ingress gateway fetches credentials from SDS server to handle TLS connections.

enabled: false

# SDS server that watches kubernetes secrets and provisions credentials to ingress gateway.

# This server runs in the same pod as ingress gateway.

image: node-agent-k8s

resources:

requests:

cpu: 100m

memory: 128Mi

limits:

cpu: 2000m

memory: 1024Mi

labels:

app: istio-ingressgateway

istio: ingressgateway

autoscaleEnabled: true

autoscaleMin: 1

autoscaleMax: 5

# specify replicaCount when autoscaleEnabled: false

# replicaCount: 1

resources:

requests:

cpu: 100m

memory: 128Mi

limits:

cpu: 2000m

memory: 1024Mi

cpu:

targetAverageUtilization: 80

loadBalancerIP: ""

loadBalancerSourceRanges: []

externalIPs: []

# 请更具自己部署的ingress 服务修改如果直接使用ingressgateway 作为ingress可以删除这行配置

serviceAnnotations: {kubernetes.io/ingress.class: traefik,traefik.ingress.kubernetes.io/affinity: "true",traefik.ingress.kubernetes.io/load-balancer-method: drr}

#serviceAnnotations: {}

podAnnotations: {}

type: ClusterIP #change to NodePort, ClusterIP or LoadBalancer if need be

#externalTrafficPolicy: Local #change to Local to preserve source IP or Cluster for default behaviour or leave commented out

ports:

## You can add custom gateway ports

# Note that AWS ELB will by default perform health checks on the first port

# on this list. Setting this to the health check port will ensure that health

# checks always work. https://github.com/istio/istio/issues/12503

- port: 15020

targetPort: 15020

name: status-port

- port: 80

targetPort: 80

name: http2

- port: 443

name: https

# Example of a port to add. Remove if not needed

- port: 31400

name: tcp

### PORTS FOR UI/metrics #####

## Disable if not needed

- port: 15029

targetPort: 15029

name: https-kiali

- port: 15030

targetPort: 15030

name: https-prometheus

- port: 15031

targetPort: 15031

name: https-grafana

- port: 15032

targetPort: 15032

name: https-tracing

# This is the port where sni routing happens

- port: 15443

targetPort: 15443

name: tls

#### MESH EXPANSION PORTS ########

# Pilot and Citadel MTLS ports are enabled in gateway - but will only redirect

# to pilot/citadel if global.meshExpansion settings are enabled.

# Delete these ports if mesh expansion is not enabled, to avoid

# exposing unnecessary ports on the web.

# You can remove these ports if you are not using mesh expansion

meshExpansionPorts:

- port: 15011

targetPort: 15011

name: tcp-pilot-grpc-tls

- port: 15004

targetPort: 15004

name: tcp-mixer-grpc-tls

- port: 8060

targetPort: 8060

name: tcp-citadel-grpc-tls

- port: 853

targetPort: 853

name: tcp-dns-tls

####### end MESH EXPANSION PORTS ######

##############

secretVolumes:

- name: ingressgateway-certs

secretName: istio-ingressgateway-certs

mountPath: /etc/istio/ingressgateway-certs

- name: ingressgateway-ca-certs

secretName: istio-ingressgateway-ca-certs

mountPath: /etc/istio/ingressgateway-ca-certs

### Advanced options ############

# Ports to explicitly check for readiness. If configured, the readiness check will expect a

# listener on these ports. A comma separated list is expected, such as "80,443".

#

# Warning: If you do not have a gateway configured for the ports provided, this check will always

# fail. This is intended for use cases where you always expect to have a listener on the port,

# such as 80 or 443 in typical setups.

applicationPorts: ""

env:

# A gateway with this mode ensures that pilot generates an additional

# set of clusters for internal services but without Istio mTLS, to

# enable cross cluster routing.

ISTIO_META_ROUTER_MODE: "sni-dnat"

nodeSelector: {}

tolerations: []

# Specify the pod anti-affinity that allows you to constrain which nodes

# your pod is eligible to be scheduled based on labels on pods that are

# already running on the node rather than based on labels on nodes.

# There are currently two types of anti-affinity:

# "requiredDuringSchedulingIgnoredDuringExecution"

# "preferredDuringSchedulingIgnoredDuringExecution"

# which denote “hard” vs. “soft” requirements, you can define your values

# in "podAntiAffinityLabelSelector" and "podAntiAffinityTermLabelSelector"

# correspondingly.

# For example:

# podAntiAffinityLabelSelector:

# - key: security

# operator: In

# values: S1,S2

# topologyKey: "kubernetes.io/hostname"

# This pod anti-affinity rule says that the pod requires not to be scheduled

# onto a node if that node is already running a pod with label having key

# “security” and value “S1”.

podAntiAffinityLabelSelector: []

podAntiAffinityTermLabelSelector: []

istio-egressgateway:

enabled: true

labels:

app: istio-egressgateway

istio: egressgateway

autoscaleEnabled: true

autoscaleMin: 1

autoscaleMax: 5

# specify replicaCount when autoscaleEnabled: false

# replicaCount: 1

resources:

requests:

cpu: 100m

memory: 128Mi

limits:

cpu: 2000m

memory: 256Mi

cpu:

targetAverageUtilization: 80

serviceAnnotations: {}

podAnnotations: {}

type: ClusterIP #change to NodePort or LoadBalancer if need be

ports:

- port: 80

name: http2

- port: 443

name: https

# This is the port where sni routing happens

- port: 15443

targetPort: 15443

name: tls

secretVolumes:

- name: egressgateway-certs

secretName: istio-egressgateway-certs

mountPath: /etc/istio/egressgateway-certs

- name: egressgateway-ca-certs

secretName: istio-egressgateway-ca-certs

mountPath: /etc/istio/egressgateway-ca-certs

#### Advanced options ########

env:

# Set this to "external" if and only if you want the egress gateway to

# act as a transparent SNI gateway that routes mTLS/TLS traffic to

# external services defined using service entries, where the service

# entry has resolution set to DNS, has one or more endpoints with

# network field set to "external". By default its set to "" so that

# the egress gateway sees the same set of endpoints as the sidecars

# preserving backward compatibility

# ISTIO_META_REQUESTED_NETWORK_VIEW: ""

# A gateway with this mode ensures that pilot generates an additional

# set of clusters for internal services but without Istio mTLS, to

# enable cross cluster routing.

ISTIO_META_ROUTER_MODE: "sni-dnat"

nodeSelector: {}

tolerations: []

# Specify the pod anti-affinity that allows you to constrain which nodes

# your pod is eligible to be scheduled based on labels on pods that are

# already running on the node rather than based on labels on nodes.

# There are currently two types of anti-affinity:

# "requiredDuringSchedulingIgnoredDuringExecution"

# "preferredDuringSchedulingIgnoredDuringExecution"

# which denote “hard” vs. “soft” requirements, you can define your values

# in "podAntiAffinityLabelSelector" and "podAntiAffinityTermLabelSelector"

# correspondingly.

# For example:

# podAntiAffinityLabelSelector:

# - key: security

# operator: In

# values: S1,S2

# topologyKey: "kubernetes.io/hostname"

# This pod anti-affinity rule says that the pod requires not to be scheduled

# onto a node if that node is already running a pod with label having key

# “security” and value “S1”.

podAntiAffinityLabelSelector: []

podAntiAffinityTermLabelSelector: []

# Mesh ILB gateway creates a gateway of type InternalLoadBalancer,

# for mesh expansion. It exposes the mtls ports for Pilot,CA as well

# as non-mtls ports to support upgrades and gradual transition.

istio-ilbgateway:

enabled: false

labels:

app: istio-ilbgateway

istio: ilbgateway

autoscaleEnabled: false

autoscaleMin: 1

autoscaleMax: 10

# specify replicaCount when autoscaleEnabled: false

# replicaCount: 1

cpu:

targetAverageUtilization: 80

resources:

requests:

cpu: 800m

memory: 512Mi

#limits:

# cpu: 1800m

# memory: 256Mi

loadBalancerIP: ""

serviceAnnotations:

cloud.google.com/load-balancer-type: "internal"

podAnnotations: {}

type: ClusterIP

ports:

## You can add custom gateway ports - google ILB default quota is 5 ports,

- port: 15011

name: grpc-pilot-mtls

# Insecure port - only for migration from 0.8. Will be removed in 1.1

- port: 15010

name: grpc-pilot

- port: 8060

targetPort: 8060

name: tcp-citadel-grpc-tls

# Port 5353 is forwarded to kube-dns

- port: 5353

name: tcp-dns

secretVolumes:

- name: ilbgateway-certs

secretName: istio-ilbgateway-certs

mountPath: /etc/istio/ilbgateway-certs

- name: ilbgateway-ca-certs

secretName: istio-ilbgateway-ca-certs

mountPath: /etc/istio/ilbgateway-ca-certs

nodeSelector: {}

tolerations: []

# 修改kial

ingress:

enabled: true

## Used to create an Ingress record.

hosts:

- kiali.xxxxx.com

annotations:

# kubernetes.io/ingress.class: nginx

# kubernetes.io/tls-acme: "true"

tls:

# Secrets must be manually created in the namespace.

# - secretName: kiali-tls

# hosts:

# - kiali.local

# 设置密码登陆

createDemoSecret: true

# tracing 修改

ingress:

enabled: true

# Used to create an Ingress record.

hosts:

# - tracing.xxxx.com

annotations:

# kubernetes.io/ingress.class: nginx

# kubernetes.io/tls-acme: "true"

tls:

# Secrets must be manually created in the namespace.

# - secretName: tracing-tls

# hosts:

# - tracing.local

修改 mixer

policy:

# if policy is enabled, global.disablePolicyChecks has affect.

enabled: true # 启用策略检查cd helm/istio/charts/gateways/templates

vi service.yaml

{{- range $key, $spec := .Values }}

{{- if ne $key "enabled" }}

{{- if $spec.enabled }}

apiVersion: v1

kind: Service

metadata:

name: {{ $key }}

namespace: {{ $spec.namespace | default $.Release.Namespace }}

annotations:

{{- range $key, $val := $spec.serviceAnnotations }}

{{ $key }}: {{ $val | quote }}

{{- end }}

labels:

chart: {{ template "gateway.chart" $ }}

heritage: {{ $.Release.Service }}

release: {{ $.Release.Name }}

{{- range $key, $val := $spec.labels }}

{{ $key }}: {{ $val }}

{{- end }}

spec:

{{- if $spec.loadBalancerIP }}

loadBalancerIP: "{{ $spec.loadBalancerIP }}"

{{- end }}

{{- if $spec.loadBalancerSourceRanges }}

loadBalancerSourceRanges:

{{ toYaml $spec.loadBalancerSourceRanges | indent 4 }}

{{- end }}

{{- if $spec.externalTrafficPolicy }}

externalTrafficPolicy: {{$spec.externalTrafficPolicy }}

{{- end }}

{{- if $spec.externalIPs }}

externalIPs:

{{ toYaml $spec.externalIPs | indent 4 }}

{{- end }}

type: {{ .type }}

selector:

release: {{ $.Release.Name }}

{{- range $key, $val := $spec.labels }}

{{ $key }}: {{ $val }}

{{- end }}

# 使用POD IP 直接对外服务器不生成clusterIP 不然前端在放一个代理tcp 方式每次修改service 就很不方便

clusterIP: None

ports:

{{- range $key, $val := $spec.ports }}

-

{{- range $pkey, $pval := $val }}

{{ $pkey}}: {{ $pval }}

{{- end }}

{{- end }}

{{- if $.Values.global.meshExpansion.enabled }}

{{- range $key, $val := $spec.meshExpansionPorts }}

-

{{- range $pkey, $pval := $val }}

{{ $pkey}}: {{ $pval }}

{{- end }}

{{- end }}

{{- end }}

---

{{- end }}

{{- end }}

{{- end }}cd istio-1.2.5/install/kubernetes/helm/istio-cni

hub: docker.io/istio

tag: 1.2.5

pullPolicy: Always

logLevel: info

# Configuration file to insert istio-cni plugin configuration

# by default this will be the first file found in the cni-conf-dir

# Example

# cniConfFileName: 10-calico.conflist

# CNI bin and conf dir override settings

# defaults:

# cni 二进制目录 宿主机

cniBinDir: /apps/cni/bin

# cni 配置文件地址宿主机

cniConfDir: /etc/cni/net.d

# 下面修改要排除的命名空间

excludeNamespaces:

- istio-system

- monitoring

- clusterstorage

- consul

- kubernetes-dashboard

- kube-system

# 修改istio-cni.yaml 添加tolerations # 如果没做tolerations 可以bu不用修改

#101 行添加内容

- effect: NoSchedule

key: node-role.kubernetes.io/ingress

operator: Equal# 不开启certmanager

cd istio-1.2.5/

# 创建命名空间

kubectl apply -f install/kubernetes/namespace.yaml

# 部署crd

helm install install/kubernetes/helm/istio-init --name-template istio-init --namespace istio-system

# 验证crd 是否部署完成

kubectl get crds | grep 'istio.io\|certmanager.k8s.io' | wc -l

# [root@]~]# kubectl get crds | grep 'istio.io\|certmanager.k8s.io' | wc -l

# 23

# 部署istio

helm install install/kubernetes/helm/istio --name-template istio --namespace istio-system \

--set istio_cni.enabled=true \

--set istio-cni.cniBinDir=/apps/cni/bin \

--set istio-cni.excludeNamespaces={"istio-system,monitoring,clusterstorage,consul,kubernetes-dashboard,kube-system"}

# 这几个参数不能省略 这个configmap istio-sidecar-injector 会用到

# 等待部署完成

# 查看部署状态

[root@]~]#kubectl get svc -n istio-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

grafana ClusterIP 10.64.194.111 <none> 3000/TCP 20h

istio-citadel ClusterIP 10.64.247.166 <none> 8060/TCP,15014/TCP 20h

istio-egressgateway ClusterIP None <none> 80/TCP,443/TCP,15443/TCP 20h

istio-galley ClusterIP 10.64.180.50 <none> 443/TCP,15014/TCP,9901/TCP 20h

istio-ingressgateway ClusterIP None <none> 15020/TCP,80/TCP,443/TCP,31400/TCP,15029/TCP,15030/TCP,15031/TCP,15032/TCP,15443/TCP 20h

istio-pilot ClusterIP 10.64.71.214 <none> 15010/TCP,15011/TCP,8080/TCP,15014/TCP 20h

istio-policy ClusterIP 10.64.235.77 <none> 9091/TCP,15004/TCP,15014/TCP 20h

istio-sidecar-injector ClusterIP 10.64.127.238 <none> 443/TCP 20h

istio-telemetry ClusterIP 10.64.218.132 <none> 9091/TCP,15004/TCP,15014/TCP,42422/TCP 20h

jaeger-agent ClusterIP None <none> 5775/UDP,6831/UDP,6832/UDP 20h

jaeger-collector ClusterIP 10.64.176.255 <none> 14267/TCP,14268/TCP 20h

jaeger-query ClusterIP 10.64.25.179 <none> 16686/TCP 20h

kiali ClusterIP 10.64.19.25 <none> 20001/TCP 20h

prometheus ClusterIP 10.64.81.141 <none> 9090/TCP 20h

tracing ClusterIP 10.64.196.70 <none> 80/TCP 20h

zipkin ClusterIP 10.64.2.197 <none> 9411/TCP 20h

# 确保部署了相应的 Kubernetes pod 并且 STATUS 是 Running的:

[root@]~]#kubectl get pods -n istio-system

NAME READY STATUS RESTARTS AGE

grafana-846c699c5c-xdm42 1/1 Running 0 20h

istio-citadel-68776b8c6f-k6dxd 1/1 Running 0 20h

istio-cni-node-4d2f2 1/1 Running 0 26h

istio-cni-node-68jzs 1/1 Running 0 26h

istio-cni-node-lptml 1/1 Running 0 26h

istio-cni-node-p74bp 1/1 Running 0 26h

istio-cni-node-p7v2j 1/1 Running 0 26h

istio-cni-node-z6mfn 1/1 Running 0 26h

istio-cni-node-zdzf2 1/1 Running 0 26h

istio-egressgateway-b488867bf-dzd6l 1/1 Running 0 20h

istio-galley-9795c8ff8-62cpz 1/1 Running 0 20h

istio-ingressgateway-54cf955579-227qt 1/1 Running 0 20h

istio-init-crd-10-bmndt 0/1 Completed 0 26h

istio-init-crd-11-rv85n 0/1 Completed 0 26h

istio-init-crd-12-w77hp 0/1 Completed 0 26h

istio-pilot-5f67fbd648-jr5wl 2/2 Running 0 20h

istio-policy-65fb44b85b-z2j5q 2/2 Running 2 20h

istio-sidecar-injector-55ff84f69f-kqxl5 1/1 Running 0 20h

istio-telemetry-799c785c8d-nzlf8 2/2 Running 3 20h

istio-tracing-6748c7c4f5-nb94f 1/1 Running 0 20h

kiali-56b5466944-d7rrp 1/1 Running 0 20h

prometheus-5b68448dc9-92cg2 1/1 Running 0 20h

# 部署istio-cni

helm install install/kubernetes/helm/istio-cni --name-template istio-cni --namespace istio-system

# 等待部署正常

自动注入的话给namespace 打标签

kubectl label namespace default istio-injection=enabled

kubectl get namespace -L istio-injection

# 删除标签

kubectl label namespace default istio-injection-

# 启用策略检查

kubectl -n istio-system get cm istio -o jsonpath="{@.data.mesh}" | grep disablePolicyChecks

#返回:disablePolicyChecks: true 可以修改 disablePolicyChecks: false 不修改

kubectl -n istio-system get cm istio -o jsonpath="{@.data.mesh}" | sed -e "s/disablePolicyChecks: true/disablePolicyChecks: false/" > /tmp/mesh.yaml

kubectl -n istio-system create cm istio -o yaml --dry-run --from-file=mesh=/tmp/mesh.yaml | kubectl replace -f -

rm -f /tmp/mesh.yaml

kubectl -n istio-system get cm istio -o jsonpath="{@.data.mesh}" | grep disablePolicyChecks以nginx 为例

修改 nginx-deployment.yaml

# 添加istio: ingressgateway

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

spec:

replicas: 2

selector:

matchLabels:

k8s-app: nginx

template:

metadata:

labels:

k8s-app: nginx

istio: ingressgateway

spec:

containers:

- name: nginx

##############################

修改Service

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: nginx

istio: ingressgateway

name: nginx

annotations:

kubernetes.io/ingress.class: traefik

traefik.ingress.kubernetes.io/affinity: "true"

traefik.ingress.kubernetes.io/load-balancer-method: drr

"consul.hashicorp.com/service-port": "http-metrics"

spec:

sessionAffinity: ClientIP

sessionAffinityConfig:

clientIP:

timeoutSeconds: 10800

selector:

k8s-app: nginx

istio: ingressgateway

ports:

- protocol: TCP

port: 80

name: web

- protocol: TCP

port: 8080

name: vts

- protocol: TCP

port: 9913

name: http-metrics

type: ClusterIP

######################

istioctl kube-inject -f nginx-deployment.yaml --injectConfigMapName istio-sidecar-injector | kubectl apply -f - -n default

或者生成文件

istioctl kube-inject -f nginx-deployment.yaml -o deployment-injected.yaml --injectConfigMapName istio-sidecar-injector

kubectl apply -f deployment-injected.yaml -n default

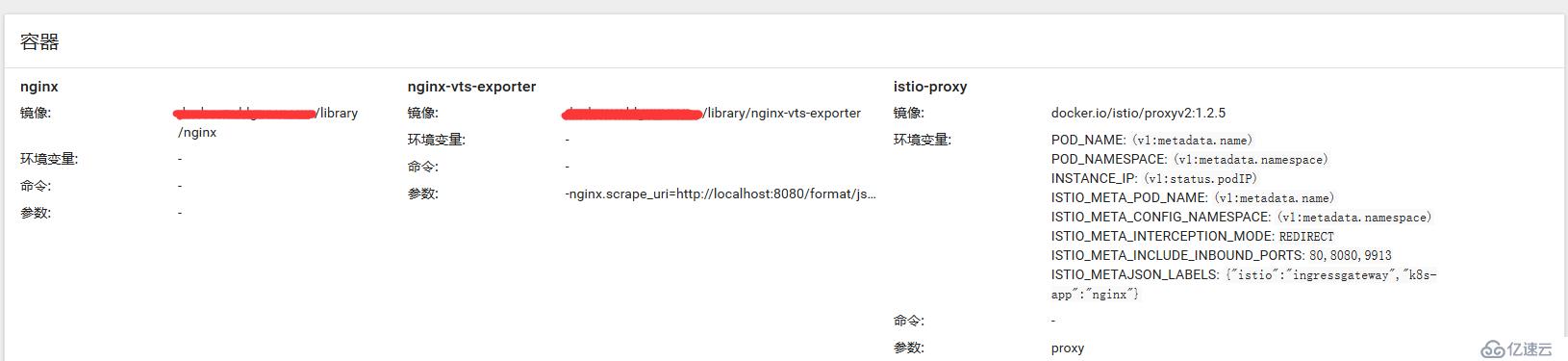

# 查看是否部署正常

[root@]~]#kubectl get pod -n default | grep nginx

nginx-6db8f49c8c-8m2zh 3/3 Running 0 25h

nginx-6db8f49c8c-d4kws 3/3 Running 0 25h

# 删除 旧ingress

kubectl delete -f nginx-ingress.yaml

创建 gateway 服务

vi nginx-web-gateway.yaml

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: nginx-web-gateway

namespace: default

spec:

selector:

istio: ingressgateway # use Istio default gateway implementation

servers:

- port:

number: 80

name: web

protocol: HTTP

hosts:

- "nginx.xxxx.com"

---

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: nginx-web

namespace: default

spec:

hosts:

- "nginx.xxxx.com"

gateways:

- nginx-web-gateway

http:

- match:

- port: 80

route:

- destination:

host: nginx

weight: 100

---

# 后端转发负载均衡方式

# 参考https://istio.io/zh/docs/reference/config/istio.networking.v1alpha3/

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: nginx-ratings

namespace: default

spec:

host: nginx

trafficPolicy:

loadBalancer:

consistentHash:

httpCookie:

name: user

ttl: 0s

# 执行

kubectl apply -f nginx-web-gateway.yaml

# 查看是否能对外访问

[root@]~]#kubectl get pod -o wide -n istio-system | grep istio-ingressgateway

istio-ingressgateway-54cf955579-227qt 1/1 Running 0 21h 10.65.5.52 node03 <none> <none>

# host 绑定IP

10.65.5.52 nginx.xxxx.com

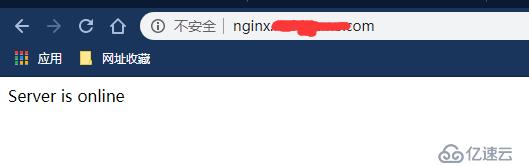

# 查看浏览器是否打开

# 能正常打开

#这个服务还不能对外提供访问修改nginx Ingress 使服务能对外提供服务

# 修改Ingress

vi nginx-ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: nginx

namespace: istio-system # istio 网关所在的命名空间

annotations:

kubernetes.io/ingress.class: traefik

traefik.frontend.rule.type: PathPrefixStrip

spec:

rules:

- host: nginx.xxxx.com

http:

paths:

- path: /

backend:

serviceName: istio-ingressgateway # 现在服务变成 istio 网关服务

servicePort: 80

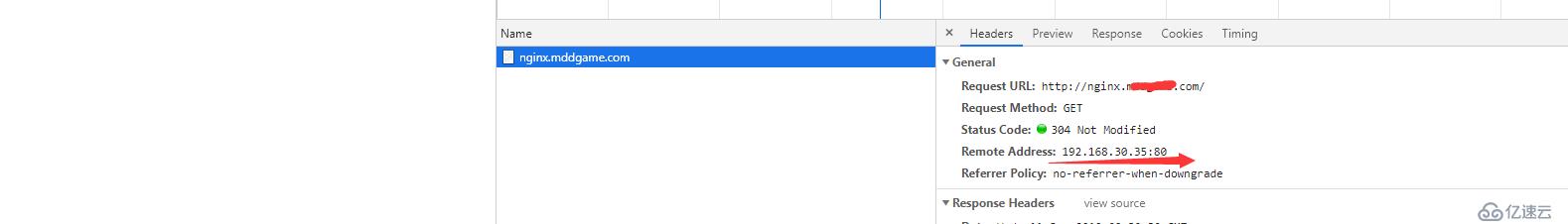

# 删除刚刚绑定host 直接dns 解析访问

已经正常走Ingress

nginx 日志

192.168.20.94 - [10/Sep/2019:16:02:20 +0800] nginx.xxxx.com "GET / HTTP/1.1" 304 0 "" "Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:69.0) Gecko/20100101 Firefox/69.0" "192.168.20.94,192.168.30.35" 127.0.0.1 ups_add: ups_resp_time: request_time: 0.000 ups_status: request_body: upstream_response_length []

# istio-ingressgateway 服务日志

[2019-09-11T09:30:30.820Z] "GET / HTTP/1.1" 304 - "-" "-" 0 0 0 0 "192.168.20.94,192.168.30.35" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/76.0.3809.132 Safari/537.36" "34555d82-f202-4ae0-8896-f87105c562e1" "nginx.xxxx.com" "10.65.5.30:80" outbound|80||nginx.default.svc.xxxx.local - 10.65.5.52:80 192.168.30.35:46178 -

istio-ingressgateway 可以配置多个域名

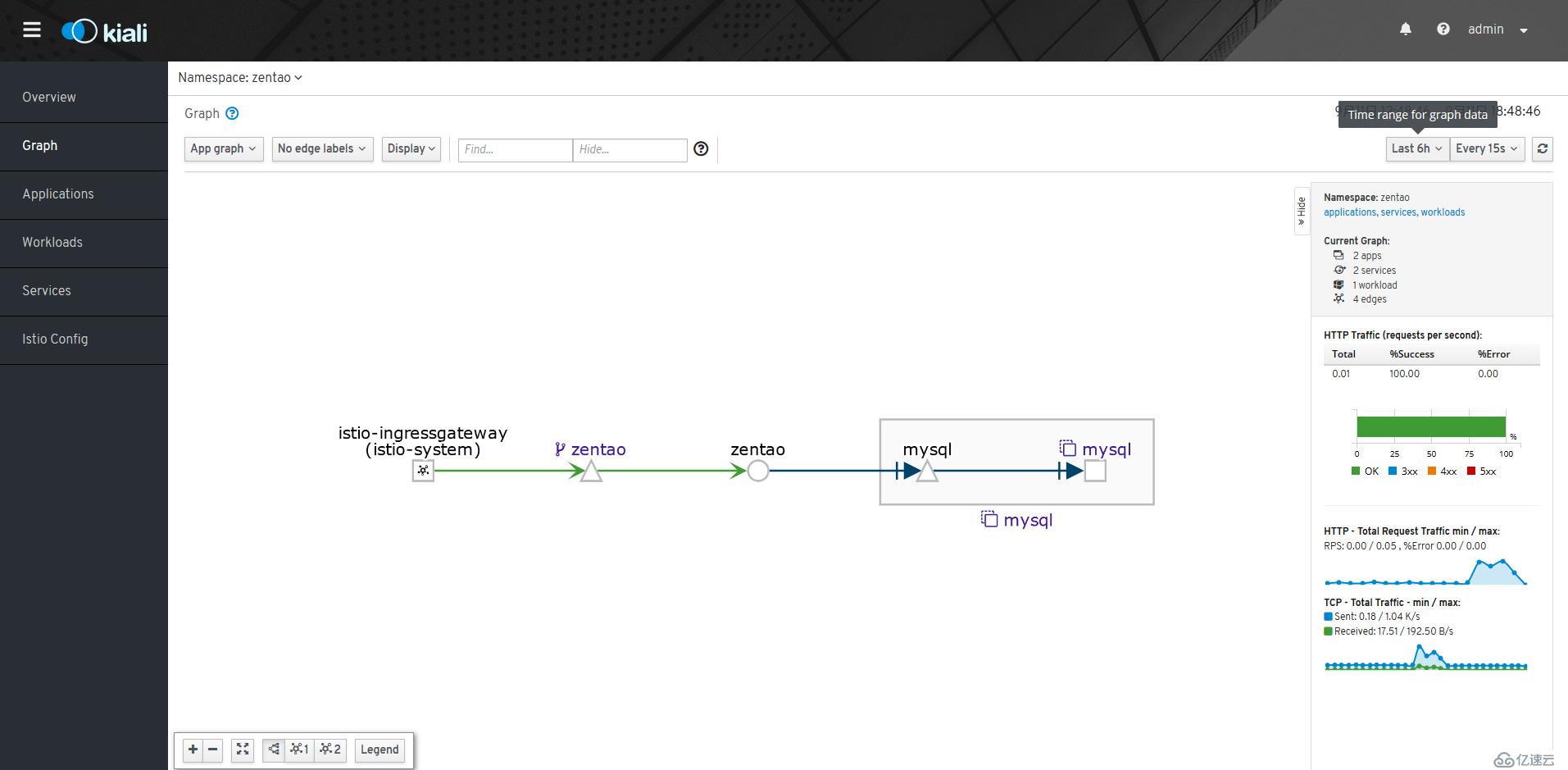

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: zentao-web-gateway

namespace: zentao

spec:

selector:

istio: ingressgateway # use Istio default gateway implementation

servers:

- port:

number: 80

name: http

protocol: HTTP

hosts:

- "zentao.xxxx.com"

---

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: zentao-web

namespace: zentao

spec:

hosts:

- "zentao.xxxx.com"

gateways:

- zentao-web-gateway

http:

- match:

- port: 80

route:

- destination:

host: zentao

weight: 100

---

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: zentao-ratings

namespace: zentao

spec:

host: zentao

trafficPolicy:

loadBalancer:

consistentHash:

httpCookie:

name: user

ttl: 0s

# Ingress

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: zentao

namespace: istio-system

annotations:

kubernetes.io/ingress.class: traefik

traefik.frontend.rule.type: PathPrefixStrip

spec:

rules:

- host: zentao.xxxx.com

http:

paths:

- path: /

backend:

serviceName: istio-ingressgateway

servicePort: 80

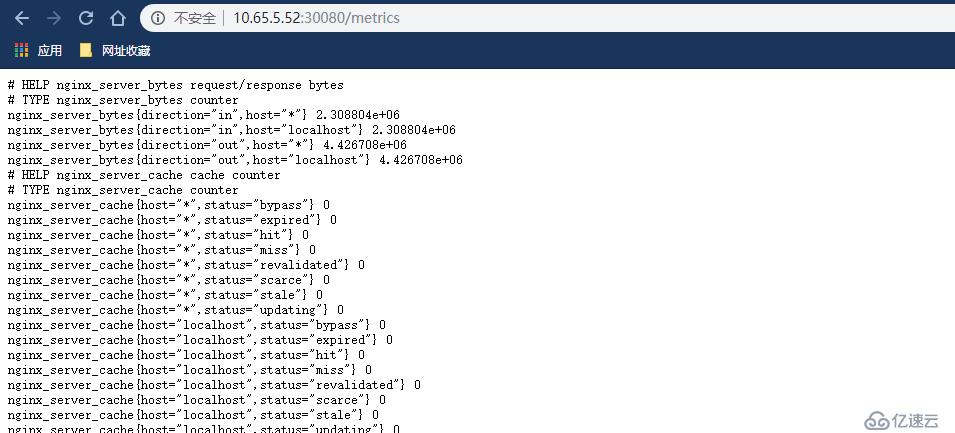

# 非80 端口提供服务

#代理nginx metrics 监控

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: metrics-gateway

namespace: default

spec:

selector:

istio: ingressgateway # use Istio default gateway implementation

servers:

- port:

number: 30080

name: http-metrics

protocol: HTTP

hosts:

- "*"

---

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: metrics-tcp

namespace: default

spec:

hosts:

- "*"

gateways:

- metrics-gateway

http:

- match:

- port: 30080

route:

- destination:

host: nginx

port:

number: 9913

weight: 100

# 查看gateway pod 30080 端口是否监听

[root@]~]#kubectl get pod -o wide -n istio-system | grep istio-ingressgateway

istio-ingressgateway-54cf955579-227qt 1/1 Running 0 21h 10.65.5.52 node03 <none> <none>

# 进入POD

[root@]~]#kubectl exec -ti istio-ingressgateway-54cf955579-227qt /bin/bash -n istio-system

root@istio-ingressgateway-54cf955579-227qt:/# netstat -tnlp

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 127.0.0.1:15000 0.0.0.0:* LISTEN 98/envoy

tcp 0 0 0.0.0.0:30080 0.0.0.0:* LISTEN 98/envoy

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 98/envoy

tcp 0 0 0.0.0.0:15090 0.0.0.0:* LISTEN 98/envoy

tcp6 0 0 :::15020 :::* LISTEN 1/pilot-agent

#30080 端口已经监听

ip:端口访问

http://10.65.5.52:30080/metrics

能够正常访问

istio-ingressgateway 打印日志

[2019-09-11T09:43:42.947Z] "GET /favicon.ico HTTP/1.1" 200 - "-" "-" 0 152 1 1 "192.168.20.94" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/76.0.3809.132 Safari/537.36" "1c2b2699-6167-4b9f-ba7a-4071216dc742" "10.65.5.52:30080" "10.65.6.216:9913" outbound|9913||nginx.default.svc.xxxx.local - 10.65.5.52:30080 192.168.20.94:63024 -

这样就能使用haproxy + dns 对外提供tcp 服务 如果使用ClusterIP 一定要修改istio-ingressgateway service 对外暴露IP

以daemonset 方式部署最好使用hostNetwork: true 这样TCP 代理可以直接hostip 访问代理端口 代替Ingress直接对外访问

# istio-1.2.5/samples 为示例 可以测试做金丝雀发布helm uninstall istio --namespace istio-system

helm uninstall istio-init --namespace istio-system

helm uninstall istio-cni --namespace istio-system

kubectl delete ns istio-system

免责声明:本站发布的内容(图片、视频和文字)以原创、转载和分享为主,文章观点不代表本网站立场,如果涉及侵权请联系站长邮箱:is@yisu.com进行举报,并提供相关证据,一经查实,将立刻删除涉嫌侵权内容。