一:pom.xml配置:去掉Spring boot 默认的spring-boot-starter-logging,引入spring-boot-starter-log4j2

<dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-web</artifactId> <exclusions> <exclusion> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-logging</artifactId> </exclusion> </exclusions> </dependency> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-log4j2</artifactId> </dependency>

二:应用配置application.yaml

# logging logging: config: classpath:log4j2.xml

三:log42j.xml配置

<?xml version="1.0" encoding="UTF-8"?>

<Configuration status="OFF">

<Properties>

<property name="LOG_HOME">D:/logs</property>

</Properties>

<Appenders>

<Console name="console" target="SYSTEM_OUT" immediateFlush="true">

<ThresholdFilter level="debug" onMatch="ACCEPT"

onMismatch="DENY" />

<PatternLayout pattern="[%-5p] [%d{yyyy-MM-dd HH:mm:ss SSS}] %c - %m%n" />

</Console>

<RollingRandomAccessFile name="infoLog"

immediateFlush="true" fileName="${LOG_HOME}/infoService.log"

filePattern="${LOG_HOME}/infoService.log.%d{yyyy-MM-dd}.log.gz">

<PatternLayout>

<pattern>[%-5p] [%d{yyyy-MM-dd HH:mm:ss SSS}] %c - %m%n</pattern>

</PatternLayout>

<Policies>

<TimeBasedTriggeringPolicy interval="1"

modulate="true" />

</Policies>

<DefaultRolloverStrategy>

<Delete basePath="${LOG_HOM}" maxDepth="1">

<IfFileName glob="infoService*.gz" />

<IfLastModified age="7d" />

</Delete>

</DefaultRolloverStrategy>

<ThresholdFilter level="info" onMatch="ACCEPT"

onMismatch="DENY" />

</RollingRandomAccessFile>

<RollingRandomAccessFile name="errorLog"

immediateFlush="true" fileName="${LOG_HOME}/errorService.log"

filePattern="${LOG_HOME}/errorService.log.%d{yyyy-MM-dd}.log.gz">

<PatternLayout>

<pattern>[%-5p] [%d{yyyy-MM-dd HH:mm:ss SSS}] %c - %m%n</pattern>

</PatternLayout>

<Policies>

<TimeBasedTriggeringPolicy interval="1"

modulate="true" />

</Policies>

<DefaultRolloverStrategy>

<Delete basePath="${LOG_HOM}" maxDepth="1">

<IfFileName glob="errorService*.gz" />

<IfLastModified age="7d" />

</Delete>

</DefaultRolloverStrategy>

<ThresholdFilter level="error" onMatch="ACCEPT"

onMismatch="DENY" />

</RollingRandomAccessFile>

<RollingRandomAccessFile name="fatalLog"

immediateFlush="true" fileName="${LOG_HOME}/fatalService.log"

filePattern="${LOG_HOME}/fatalService.log.%d{yyyy-MM-dd}.log.gz">

<PatternLayout>

<pattern>[%-5p] [%d{yyyy-MM-dd HH:mm:ss SSS}] %c - %m%n</pattern>

</PatternLayout>

<Policies>

<TimeBasedTriggeringPolicy interval="1"

modulate="true" />

</Policies>

<DefaultRolloverStrategy>

<Delete basePath="${LOG_HOM}" maxDepth="1">

<IfFileName glob="fatalService*.gz" />

<IfLastModified age="7d" />

</Delete>

</DefaultRolloverStrategy>

<ThresholdFilter level="fatal" onMatch="ACCEPT"

onMismatch="DENY" />

</RollingRandomAccessFile>

<RollingRandomAccessFile name="druidSqlLog"

immediateFlush="true" fileName="${LOG_HOME}/druidSql.log"

filePattern="${LOG_HOME}/druidSql.log.%d{yyyy-MM-dd}.log.gz">

<PatternLayout>

<pattern>[%-5p] [%d{yyyy-MM-dd HH:mm:ss SSS}] %c - %m%n</pattern>

</PatternLayout>

<Policies>

<TimeBasedTriggeringPolicy interval="1"

modulate="true" />

</Policies>

<DefaultRolloverStrategy>

<Delete basePath="${LOG_HOM}" maxDepth="1">

<IfFileName glob="druidSql*.gz" />

<IfLastModified age="7d" />

</Delete>

</DefaultRolloverStrategy>

</RollingRandomAccessFile>

</Appenders>

<Loggers>

<!-- 根logger的设置 -->

<root level="info">

<appender-ref ref="console" />

<appender-ref ref="infoLog" />

<appender-ref ref="errorLog" />

<appender-ref ref="fatalLog" />

</root>

<logger name="druid.sql.Statement" level="debug" additivity="false">

<appender-ref ref="druidSqlLog" />

</logger>

<logger name="druid.sql.ResultSet" level="debug" additivity="false">

<appender-ref ref="druidSqlLog" />

</logger>

</Loggers>

</Configuration>

四:Druid配置

# mysql,druid spring: datasource: type: com.alibaba.druid.pool.DruidDataSource driverClassName: com.mysql.jdbc.Driver url: jdbc:mysql://localhost:3306/upin_charge?allowMultiQueries=true&useUnicode=true&characterEncoding=UTF-8 username: username: password: password: druid: # 连接池的配置信息 initial-size: 10 max-active: 100 min-idle: 10 max-wait: 60000 # 打开PSCache,并且指定每个连接上PSCache的大小 pool-prepared-statements: true max-pool-prepared-statement-per-connection-size: 20 # 配置间隔多久才进行一次检测,检测需要关闭的空闲连接,单位是毫秒 time-between-eviction-runs-millis: 60000 # 配置一个连接池中最小生存的时间,单位是毫秒 min-evictable-idle-time-millis: 300000 validation-query: SELECT 1 FROM DUAL test-while-idle: true test-on-borrow: false test-on-return: false #配置DruidStatViewServlet stat-view-servlet: enabled: true url-pattern: /druid/* #login-username: admin #login-password: admin # # 配置监控统计拦截的filters,去掉后,监控界面sql无法统计,'wall'用于防火墙 filters: stat,wall,log4j2 filter: stat: log-slow-sql: true slow-sql-millis: 1000 merge-sql: true wall: config: multi-statement-allow: true log4j2: enabled: true result-set-log-enabled: true statement-executable-sql-log-enable: true statement-create-after-log-enabled: false statement-close-after-log-enabled: false result-set-open-after-log-enabled: false result-set-close-after-log-enabled: false

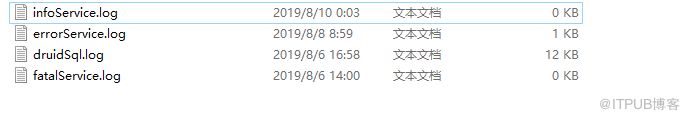

五:日志文件查看

[DEBUG] [2019-08-06 16:58:20 570] druid.sql.Statement - {conn-10010, pstmt-20000} created. SELECT push_id,uuid,user_id,user_name,msg_type,msg_object,msg_title,msg_content,status,read_status,read_date,receive_date,receive_user_id,create_date FROM upin_msg.msg_push_info

WHERE status='01'

[DEBUG] [2019-08-06 16:58:20 577] druid.sql.Statement - {conn-10010, pstmt-20000} Parameters : []

[DEBUG] [2019-08-06 16:58:20 577] druid.sql.Statement - {conn-10010, pstmt-20000} Types : []

[DEBUG] [2019-08-06 16:58:20 595] druid.sql.Statement - {conn-10010, pstmt-20000} executed. SELECT push_id,uuid,user_id,user_name,msg_type,msg_object,msg_title,msg_content,status,read_status,read_date,receive_date,receive_user_id,create_date FROM upin_msg.msg_push_info

WHERE status='01'

[DEBUG] [2019-08-06 16:58:20 595] druid.sql.Statement - {conn-10010, pstmt-20000} executed. 19.4173 millis. SELECT push_id,uuid,user_id,user_name,msg_type,msg_object,msg_title,msg_content,status,read_status,read_date,receive_date,receive_user_id,create_date FROM upin_msg.msg_push_info

WHERE status='01'

[DEBUG] [2019-08-06 16:58:20 601] druid.sql.ResultSet - {conn-10010, pstmt-20000, rs-50000} Result: [2, null, 1, test, 01, test, null, test, 01, 00, null, null, null, 2019-08-05 13:41:12.0]

[DEBUG] [2019-08-06 16:58:20 603] druid.sql.ResultSet - {conn-10010, pstmt-20000, rs-50000} Result: [3, null, 1, test, 01, test, null, test, 01, 00, null, null, null, 2019-08-05 13:52:05.0]

[DEBUG] [2019-08-06 16:58:20 603] druid.sql.ResultSet - {conn-10010, pstmt-20000, rs-50000} Result: [4, null, 1, test, 01, test, null, test, 01, 00, null, null, null, 2019-08-05 13:53:50.0]

[DEBUG] [2019-08-06 16:58:20 603] druid.sql.ResultSet - {conn-10010, pstmt-20000, rs-50000} Result: [5, null, 1, test, 01, test, null, test, 01, 00, null, null, null, 2019-08-06 10:41:10.0]

[DEBUG] [2019-08-06 16:58:20 604] druid.sql.ResultSet - {conn-10010, pstmt-20000, rs-50000} Result: [6, null, 1, test, 01, test, null, test, 01, 00, null, null, null, 2019-08-06 13:49:58.0]

[DEBUG] [2019-08-06 16:58:20 605] druid.sql.Statement - {conn-10010, pstmt-20000} clearParameters.

[DEBUG] [2019-08-06 16:58:20 608] druid.sql.Statement - {conn-10010, pstmt-20000} Parameters : []

[DEBUG] [2019-08-06 16:58:20 608] druid.sql.Statement - {conn-10010, pstmt-20000} Types : []

免责声明:本站发布的内容(图片、视频和文字)以原创、转载和分享为主,文章观点不代表本网站立场,如果涉及侵权请联系站长邮箱:is@yisu.com进行举报,并提供相关证据,一经查实,将立刻删除涉嫌侵权内容。