这篇文章主要介绍Python中用numpy解决梯度下降最小值的方法,文中介绍的非常详细,具有一定的参考价值,感兴趣的小伙伴们一定要看完!

问题描述:求解y1 = xx -2 x +3 + 0.01*(-1到1的随机值) 与 y2 = 0 的最小距离点(x,y)

给定x范围(0,3)

不使用学习框架,手动编写梯度下降公式求解,提示:x = x - alp*(y1-y2)导数(alp为学习率)

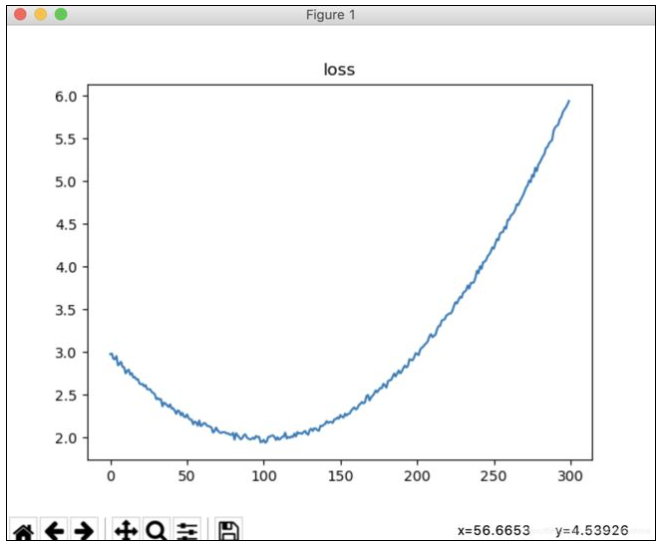

函数图像为:

代码内容:

import numpy as np

import matplotlib.pyplot as plt

def get_loss(x):

c,r = x.shape

loss = (x**2 - 2*x + 3) + (0.01*(2*np.random.rand(c,r)-1))

return(loss)

x = np.arange(0,3,0.01).reshape(-1,1)

"""plt.title("loss")

plt.plot(get_loss(np.array(x)))

plt.show()"""

def get_grad(x):

grad = 2 * x -2

return(grad)

np.random.seed(31415)

x_ = np.random.rand(1)*3

x_s = []

alp = 0.001

print("X0",x_)

for e in range(2000):

x_ = x_ - alp*(get_grad(x_))

x_s.append(x_)

if(e%100 == 0):

print(e,"steps,x_ = ",x_)

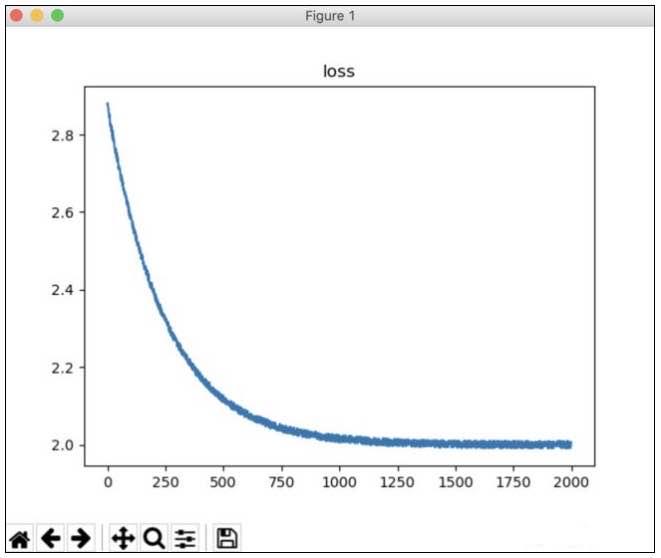

plt.title("loss")

plt.plot(get_loss(np.array(x_s)))

plt.show()运行结果:

X0 [1.93745582]

0 steps,x_ = [1.93558091]

100 steps,x_ = [1.76583547]

200 steps,x_ = [1.6268875]

300 steps,x_ = [1.51314929]

400 steps,x_ = [1.42004698]

500 steps,x_ = [1.34383651]

600 steps,x_ = [1.28145316]

700 steps,x_ = [1.23038821]

800 steps,x_ = [1.18858814]

900 steps,x_ = [1.15437199]

1000 steps,x_ = [1.12636379]

1100 steps,x_ = [1.1034372]

1200 steps,x_ = [1.08467026]

1300 steps,x_ = [1.06930826]

1400 steps,x_ = [1.05673344]

1500 steps,x_ = [1.04644011]

1600 steps,x_ = [1.03801434]

1700 steps,x_ = [1.03111727]

1800 steps,x_ = [1.02547157]

1900 steps,x_ = [1.02085018]图片

以上是Python中用numpy解决梯度下降最小值的方法的所有内容,感谢各位的阅读!希望分享的内容对大家有帮助,更多相关知识,欢迎关注亿速云行业资讯频道!

亿速云「云服务器」,即开即用、新一代英特尔至强铂金CPU、三副本存储NVMe SSD云盘,价格低至29元/月。点击查看>>

免责声明:本站发布的内容(图片、视频和文字)以原创、转载和分享为主,文章观点不代表本网站立场,如果涉及侵权请联系站长邮箱:is@yisu.com进行举报,并提供相关证据,一经查实,将立刻删除涉嫌侵权内容。

原文链接:https://www.py.cn/jishu/jichu/20426.html