这篇文章主要介绍“怎么使用Python代码批量做素描图”的相关知识,小编通过实际案例向大家展示操作过程,操作方法简单快捷,实用性强,希望这篇“怎么使用Python代码批量做素描图”文章能帮助大家解决问题。

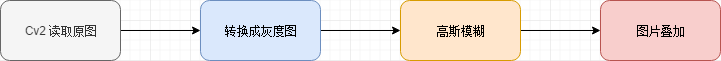

对于上面的流程来说是非常简单的,接下来我们来看看具体的实现。

安装所需要的库:

pip install opencv-python

导入所需要的库:

import cv2编写主体代码也是非常的简单的,代码如下:

import cv2

SRC = "images/image_1.jpg"

image_rgb = cv2.imread(SRC)

image_gray = cv2.cvtColor(image_rgb, cv2.COLOR_BGR2GRAY)

image_blur = cv2.GaussianBlur(image_gray, ksize=(21, 21), sigmaX=0, sigmaY=0)

image_blend = cv2.divide(image_gray, image_blur, scale=255)

cv2.imwrite("result.jpg", image_blend)那上面的代码其实并不难,那接下来为了让小伙伴们能更好的理解,我编写了如下代码:

"""

project = "Code", file_name = "study.py", author = "AI悦创"

time = "2020/5/19 8:35", product_name = PyCharm, 公众号:AI悦创

code is far away from bugs with the god animal protecting

I love animals. They taste delicious.

"""

import cv2

# 原图路径

SRC = "images/image_1.jpg"

# 读取图片

image_rgb = cv2.imread(SRC)

# cv2.imshow("rgb", image_rgb) # 原图

# cv2.waitKey(0)

# exit()

image_gray = cv2.cvtColor(image_rgb, cv2.COLOR_BGR2GRAY)

# cv2.imshow("gray", image_gray) # 灰度图

# cv2.waitKey(0)

# exit()

image_bulr = cv2.GaussianBlur(image_gray, ksize=(21, 21), sigmaX=0, sigmaY=0)

cv2.imshow("image_blur", image_bulr) # 高斯虚化

cv2.waitKey(0)

exit()

# divide: 提取两张差别较大的线条和内容

image_blend = cv2.divide(image_gray, image_bulr, scale=255)

# cv2.imshow("image_blend", image_blend) # 素描

cv2.waitKey(0)

# cv2.imwrite("result1.jpg", image_blend)那上面的代码,我们是在原有的基础上添加了,一些实时展示的代码,来方便同学们理解。

其实有同学会问,我用软件不就可以直接生成素描图吗?

那程序的好处是什么?

程序的好处就是如果你的图片量多的话,这个时候使用程序批量生成也是非常方便高效的。

这样我们的就完成,把小姐姐的图片变成了素描,skr~。

不过,这还不是我们的海量图片,为了达到海量这个词呢,我写了一个百度图片爬虫,不过本文不是教如何写爬虫代码的,这里我就直接放出爬虫代码,符和软件工程规范:

# Crawler.Spider.py

import re

import os

import time

import collections

from collections import namedtuple

import requests

from concurrent import futures

from tqdm import tqdm

from enum import Enum

BASE_URL = "https://image.baidu.com/search/acjson?tn=resultjson_com&ipn=rj&ct=201326592&is=&fp=result&queryWord={keyword}&cl=2&lm=-1&ie=utf-8&oe=utf-8&adpicid=&st=-1&z=&ic=&hd=&latest=©right=&word={keyword}&s=&se=&tab=&width=&height=&face=0&istype=2&qc=&nc=1&fr=&expermode=&force=&pn={page}&rn=30&gsm=&1568638554041="

HEADERS = {

"Referer": "http://image.baidu.com/search/index?tn=baiduimage&ipn=r&ct=201326592&cl=2&lm=-1&st=-1&fr=&sf=1&fmq=1567133149621_R&pv=&ic=0&nc=1&z=0&hd=0&latest=0©right=0&se=1&showtab=0&fb=0&width=&height=&face=0&istype=2&ie=utf-8&sid=&word=%E5%A3%81%E7%BA%B8",

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.100 Safari/537.36",

"X-Requested-With": "XMLHttpRequest", }

class BaiDuSpider:

def __init__(self, max_works, images_type):

self.max_works = max_works

self.HTTPStatus = Enum("Status", ["ok", "not_found", "error"])

self.result = namedtuple("Result", "status data")

self.session = requests.session()

self.img_type = images_type

self.img_num = None

self.headers = HEADERS

self.index = 1

def get_img(self, img_url):

res = self.session.get(img_url)

if res.status_code != 200:

res.raise_for_status()

return res.content

def download_one(self, img_url, verbose):

try:

image = self.get_img(img_url)

except requests.exceptions.HTTPError as e:

res = e.response

if res.status_code == 404:

status = self.HTTPStatus.not_found

msg = "not_found"

else:

raise

else:

self.save_img(self.img_type, image)

status = self.HTTPStatus.ok

msg = "ok"

if verbose:

print(img_url, msg)

return self.result(status, msg)

def get_img_url(self):

urls = [BASE_URL.format(keyword=self.img_type, page=page) for page in self.img_num]

for url in urls:

res = self.session.get(url, headers=self.headers)

if res.status_code == 200:

img_list = re.findall(r""thumbURL":"(.*?)"", res.text)

# 返回出图片地址,配合其他函数运行

yield {img_url for img_url in img_list}

elif res.status_code == 404:

print("-----访问失败,找不到资源-----")

yield None

elif res.status_code == 403:

print("*****访问失败,服务器拒绝访问*****")

yield None

else:

print(">>> 网络连接失败 <<<")

yield None

def download_many(self, img_url_set, verbose=False):

if img_url_set:

counter = collections.Counter()

with futures.ThreadPoolExecutor(self.max_works) as executor:

to_do_map = {}

for img in img_url_set:

future = executor.submit(self.download_one, img, verbose)

to_do_map[future] = img

done_iter = futures.as_completed(to_do_map)

if not verbose:

done_iter = tqdm(done_iter, total=len(img_url_set))

for future in done_iter:

try:

res = future.result()

except requests.exceptions.HTTPError as e:

error_msg = "HTTP error {res.status_code} - {res.reason}"

error_msg = error_msg.format(res=e.response)

except requests.exceptions.ConnectionError:

error_msg = "ConnectionError error"

else:

error_msg = ""

status = res.status

if error_msg:

status = self.HTTPStatus.error

counter[status] += 1

if verbose and error_msg:

img = to_do_map[future]

print("***Error for {} : {}".format(img, error_msg))

return counter

else:

pass

def save_img(self, img_type, image):

with open("{}/{}.jpg".format(img_type, self.index), "wb") as f:

f.write(image)

self.index += 1

def what_want2download(self):

# self.img_type = input("请输入你想下载的图片类型,什么都可以哦~ >>> ")

try:

os.mkdir(self.img_type)

except FileExistsError:

pass

img_num = input("请输入要下载的数量(1位数代表30张,列如输入1就是下载30张,2就是60张):>>> ")

while True:

if img_num.isdigit():

img_num = int(img_num) * 30

self.img_num = range(30, img_num + 1, 30)

break

else:

img_num = input("输入错误,请重新输入要下载的数量>>> ")

def main(self):

# 获取图片类型和下载的数量

total_counter = {}

self.what_want2download()

for img_url_set in self.get_img_url():

if img_url_set:

counter = self.download_many(img_url_set, False)

for key in counter:

if key in total_counter:

total_counter[key] += counter[key]

else:

total_counter[key] = counter[key]

else:

# 可以为其添加报错功能

pass

time.sleep(.5)

return total_counter

if __name__ == "__main__":

max_works = 20

bd_spider = BaiDuSpider(max_works)

print(bd_spider.main())# Sketch_the_generated_code.py

import cv2

def drawing(src, id=None):

image_rgb = cv2.imread(src)

image_gray = cv2.cvtColor(image_rgb, cv2.COLOR_BGR2GRAY)

image_blur = cv2.GaussianBlur(image_gray, ksize=(21, 21), sigmaX=0, sigmaY=0)

image_blend = cv2.divide(image_gray, image_blur, scale=255)

cv2.imwrite(f"Drawing_images/result-{id}.jpg", image_blend)# image_list.image_list_path.py

import os

from natsort import natsorted

IMAGES_LIST = []

def image_list(path):

global IMAGES_LIST

for root, dirs, files in os.walk(path):

# 按文件名排序

# files.sort()

files = natsorted(files)

# 遍历所有文件

for file in files:

# 如果后缀名为 .jpg

if os.path.splitext(file)[1] == ".jpg":

# 拼接成完整路径

# print(file)

filePath = os.path.join(root, file)

print(filePath)

# 添加到数组

IMAGES_LIST.append(filePath)

return IMAGES_LIST# main.py

import time

from Sketch_the_generated_code import drawing

from Crawler.Spider import BaiDuSpider

from image_list.image_list_path import image_list

import os

MAX_WORDS = 20

if __name__ == "__main__":

# now_path = os.getcwd()

# img_type = "ai"

img_type = input("请输入你想下载的图片类型,什么都可以哦~ >>> ")

bd_spider = BaiDuSpider(MAX_WORDS, img_type)

print(bd_spider.main())

time.sleep(10) # 这里设置睡眠时间,让有足够的时间去添加,这样读取就,去掉或者太短会报错,所以

for index, path in enumerate(image_list(img_type)):

drawing(src = path, id = index)所以最终的目录结构如下所示:

C:.

│ main.py

│ Sketch_the_generated_code.py

│

├─Crawler

│ │ Spider.py

│ │

│ └─__pycache__

│ Spider.cpython-37.pyc

│

├─drawing

│ │ result.jpg

│ │ result1.jpg

│ │ Sketch_the_generated_code.py

│ │ study.py

│ │

│ ├─images

│ │ image_1.jpg

│ │

│ └─__pycache__

│ Sketch_the_generated_code.cpython-37.pyc

│

├─Drawing_images

├─image_list

│ │ image_list_path.py

│ │

│ └─__pycache__

│ image_list_path.cpython-37.pyc

│

└─__pycache__

Sketch_the_generated_code.cpython-37.pyc关于“怎么使用Python代码批量做素描图”的内容就介绍到这里了,感谢大家的阅读。如果想了解更多行业相关的知识,可以关注亿速云行业资讯频道,小编每天都会为大家更新不同的知识点。

亿速云「云服务器」,即开即用、新一代英特尔至强铂金CPU、三副本存储NVMe SSD云盘,价格低至29元/月。点击查看>>

免责声明:本站发布的内容(图片、视频和文字)以原创、转载和分享为主,文章观点不代表本网站立场,如果涉及侵权请联系站长邮箱:is@yisu.com进行举报,并提供相关证据,一经查实,将立刻删除涉嫌侵权内容。