今天小编给大家分享一下spark编程python代码分析的相关知识点,内容详细,逻辑清晰,相信大部分人都还太了解这方面的知识,所以分享这篇文章给大家参考一下,希望大家阅读完这篇文章后有所收获,下面我们一起来了解一下吧。

ValueError: Cannot run multiple SparkContexts at once; existing SparkContext(app=PySparkShell, master=local[])

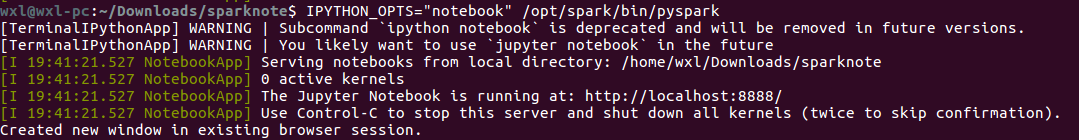

1.1.启动

IPYTHON_OPTS="notebook" /opt/spark/bin/pyspark

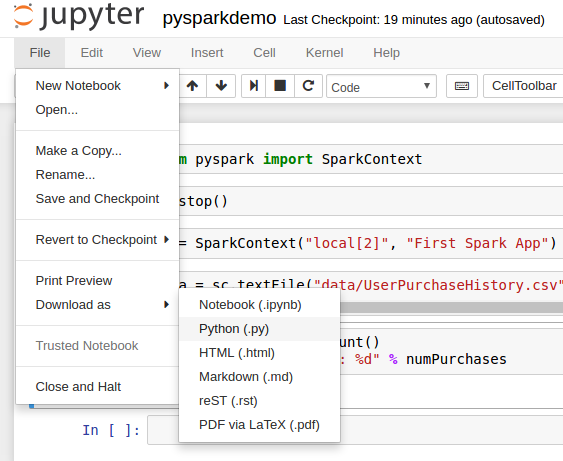

下载应用,将应用下载为.py文件(默认notebook后缀是.ipynb)

wxl@wxl-pc:/opt/spark/bin$ spark-submit /bin/spark-submit /home/wxl/Downloads/pysparkdemo.py

ValueError: Cannot run multiple SparkContexts at once; existing SparkContext(app=PySparkShell, master=local[*])

d*

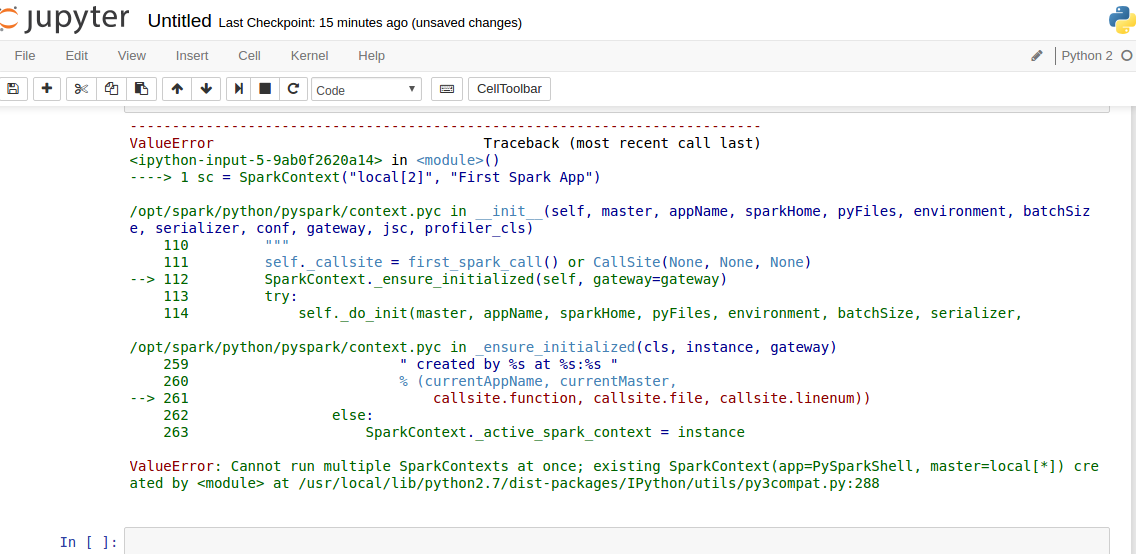

3.1.错误

ValueError: Cannot run multiple SparkContexts at once; existing SparkContext(app=PySparkShell, master=local[*])

d*

ValueError: Cannot run multiple SparkContexts at once; existing SparkContext(app=PySparkShell, master=local[*]) created by <module> at /usr/local/lib/python2.7/dist-packages/IPython/utils/py3compat.py:288

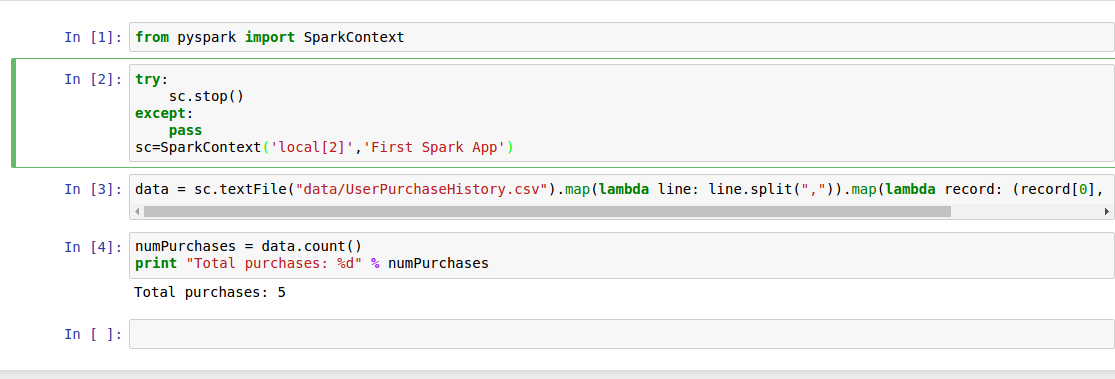

3.2.解决,成功运行

在from之后添加

try:

sc.stop()

except:

pass

sc=SparkContext('local[2]','First Spark App')

贴上错误解决方法来源StackOverFlow

pysparkdemo.ipynb

{

"cells": [

{

"cell_type": "code",

"execution_count": 1,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"from pyspark import SparkContext"

]

},

{

"cell_type": "code",

"execution_count": 2,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"try:\n",

" sc.stop()\n",

"except:\n",

" pass\n",

"sc=SparkContext('local[2]','First Spark App')"

]

},

{

"cell_type": "code",

"execution_count": 3,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"data = sc.textFile(\"data/UserPurchaseHistory.csv\").map(lambda line: line.split(\",\")).map(lambda record: (record[0], record[1], record[2]))"

]

},

{

"cell_type": "code",

"execution_count": 4,

"metadata": {

"collapsed": false,

"scrolled": true

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"Total purchases: 5\n"

]

}

],

"source": [

"numPurchases = data.count()\n",

"print \"Total purchases: %d\" % numPurchases"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": []

}

],

"metadata": {

"kernelspec": {

"display_name": "Python 2",

"language": "python",

"name": "python2"

},

"language_info": {

"codemirror_mode": {

"name": "ipython",

"version": 2

},

"file_extension": ".py",

"mimetype": "text/x-python",

"name": "python",

"nbconvert_exporter": "python",

"pygments_lexer": "ipython2",

"version": "2.7.12"

}

},

"nbformat": 4,

"nbformat_minor": 0

}pysparkdemo.py

# coding: utf-8

# In[1]:

from pyspark import SparkContext

# In[2]:

try:

sc.stop()

except:

pass

sc=SparkContext('local[2]','First Spark App')

# In[3]:

data = sc.textFile("data/UserPurchaseHistory.csv").map(lambda line: line.split(",")).map(lambda record: (record[0], record[1], record[2]))

# In[4]:

numPurchases = data.count()

print "Total purchases: %d" % numPurchases

# In[ ]:以上就是“spark编程python代码分析”这篇文章的所有内容,感谢各位的阅读!相信大家阅读完这篇文章都有很大的收获,小编每天都会为大家更新不同的知识,如果还想学习更多的知识,请关注亿速云行业资讯频道。

亿速云「云服务器」,即开即用、新一代英特尔至强铂金CPU、三副本存储NVMe SSD云盘,价格低至29元/月。点击查看>>

免责声明:本站发布的内容(图片、视频和文字)以原创、转载和分享为主,文章观点不代表本网站立场,如果涉及侵权请联系站长邮箱:is@yisu.com进行举报,并提供相关证据,一经查实,将立刻删除涉嫌侵权内容。