这篇“Spring Boot中怎么使用@KafkaListener并发批量接收消息”文章的知识点大部分人都不太理解,所以小编给大家总结了以下内容,内容详细,步骤清晰,具有一定的借鉴价值,希望大家阅读完这篇文章能有所收获,下面我们一起来看看这篇“Spring Boot中怎么使用@KafkaListener并发批量接收消息”文章吧。

###第一步,并发消费###

先看代码,重点是这我们使用的是ConcurrentKafkaListenerContainerFactory并且设置了factory.setConcurrency(4); (我的topic有4个分区,为了加快消费将并发设置为4,也就是有4个KafkaMessageListenerContainer)

@Bean

KafkaListenerContainerFactory<ConcurrentMessageListenerContainer<String, String>> kafkaListenerContainerFactory() {

ConcurrentKafkaListenerContainerFactory<String, String> factory = new ConcurrentKafkaListenerContainerFactory<>();

factory.setConsumerFactory(consumerFactory());

factory.setConcurrency(4);

factory.setBatchListener(true);

factory.getContainerProperties().setPollTimeout(3000);

return factory;

}注意也可以直接在application.properties中添加spring.kafka.listener.concurrency=3,然后使用@KafkaListener并发消费。

###第二步,批量消费###

然后是批量消费。重点是factory.setBatchListener(true);

以及 propsMap.put(ConsumerConfig.MAX_POLL_RECORDS_CONFIG, 50);

一个设启用批量消费,一个设置批量消费每次最多消费多少条消息记录。

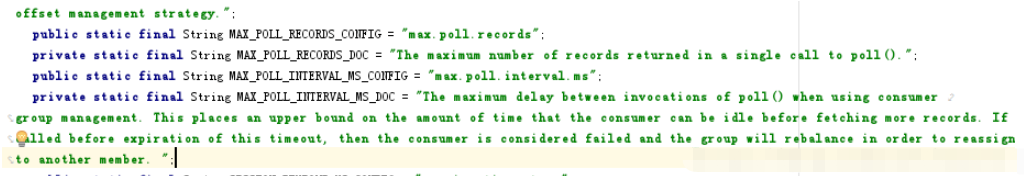

重点说明一下,我们设置的ConsumerConfig.MAX_POLL_RECORDS_CONFIG是50,并不是说如果没有达到50条消息,我们就一直等待。官方的解释是"The maximum number of records returned in a single call to poll().", 也就是50表示的是一次poll最多返回的记录数。

从启动日志中可以看到还有个 max.poll.interval.ms = 300000, 也就说每间隔max.poll.interval.ms我们就调用一次poll。每次poll最多返回50条记录。

max.poll.interval.ms官方解释是"The maximum delay between invocations of poll() when using consumer group management. This places an upper bound on the amount of time that the consumer can be idle before fetching more records. If poll() is not called before expiration of this timeout, then the consumer is considered failed and the group will rebalance in order to reassign the partitions to another member. ";

@Bean

KafkaListenerContainerFactory<ConcurrentMessageListenerContainer<String, String>> kafkaListenerContainerFactory() {

ConcurrentKafkaListenerContainerFactory<String, String> factory = new ConcurrentKafkaListenerContainerFactory<>();

factory.setConsumerFactory(consumerFactory());

factory.setConcurrency(4);

factory.setBatchListener(true);

factory.getContainerProperties().setPollTimeout(3000);

return factory;

}

@Bean

public Map<String, Object> consumerConfigs() {

Map<String, Object> propsMap = new HashMap<>();

propsMap.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, propsConfig.getBroker());

propsMap.put(ConsumerConfig.ENABLE_AUTO_COMMIT_CONFIG, propsConfig.getEnableAutoCommit());

propsMap.put(ConsumerConfig.AUTO_COMMIT_INTERVAL_MS_CONFIG, "100");

propsMap.put(ConsumerConfig.SESSION_TIMEOUT_MS_CONFIG, "15000");

propsMap.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class);

propsMap.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class);

propsMap.put(ConsumerConfig.GROUP_ID_CONFIG, propsConfig.getGroupId());

propsMap.put(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG, propsConfig.getAutoOffsetReset());

propsMap.put(ConsumerConfig.MAX_POLL_RECORDS_CONFIG, 50);

return propsMap;

}启动日志截图

关于max.poll.records和max.poll.interval.ms官方解释截图:

###第三步,分区消费###

对于只有一个分区的topic,不需要分区消费,因为没有意义。下面的例子是针对有2个分区的情况(我的完整代码中有4个listenPartitionX方法,我的topic设置了4个分区),读者可以根据自己的情况进行调整。

public class MyListener {

private static final String TPOIC = "topic02";

@KafkaListener(id = "id0", topicPartitions = { @TopicPartition(topic = TPOIC, partitions = { "0" }) })

public void listenPartition0(List<ConsumerRecord<?, ?>> records) {

log.info("Id0 Listener, Thread ID: " + Thread.currentThread().getId());

log.info("Id0 records size " + records.size());

for (ConsumerRecord<?, ?> record : records) {

Optional<?> kafkaMessage = Optional.ofNullable(record.value());

log.info("Received: " + record);

if (kafkaMessage.isPresent()) {

Object message = record.value();

String topic = record.topic();

log.info("p0 Received message={}", message);

}

}

}

@KafkaListener(id = "id1", topicPartitions = { @TopicPartition(topic = TPOIC, partitions = { "1" }) })

public void listenPartition1(List<ConsumerRecord<?, ?>> records) {

log.info("Id1 Listener, Thread ID: " + Thread.currentThread().getId());

log.info("Id1 records size " + records.size());

for (ConsumerRecord<?, ?> record : records) {

Optional<?> kafkaMessage = Optional.ofNullable(record.value());

log.info("Received: " + record);

if (kafkaMessage.isPresent()) {

Object message = record.value();

String topic = record.topic();

log.info("p1 Received message={}", message);

}

}

}以上就是关于“Spring Boot中怎么使用@KafkaListener并发批量接收消息”这篇文章的内容,相信大家都有了一定的了解,希望小编分享的内容对大家有帮助,若想了解更多相关的知识内容,请关注亿速云行业资讯频道。

亿速云「云服务器」,即开即用、新一代英特尔至强铂金CPU、三副本存储NVMe SSD云盘,价格低至29元/月。点击查看>>

免责声明:本站发布的内容(图片、视频和文字)以原创、转载和分享为主,文章观点不代表本网站立场,如果涉及侵权请联系站长邮箱:is@yisu.com进行举报,并提供相关证据,一经查实,将立刻删除涉嫌侵权内容。