这篇文章给大家介绍ElasticSearch如何进行角色分离部署,内容非常详细,感兴趣的小伙伴们可以参考借鉴,希望对大家能有所帮助。

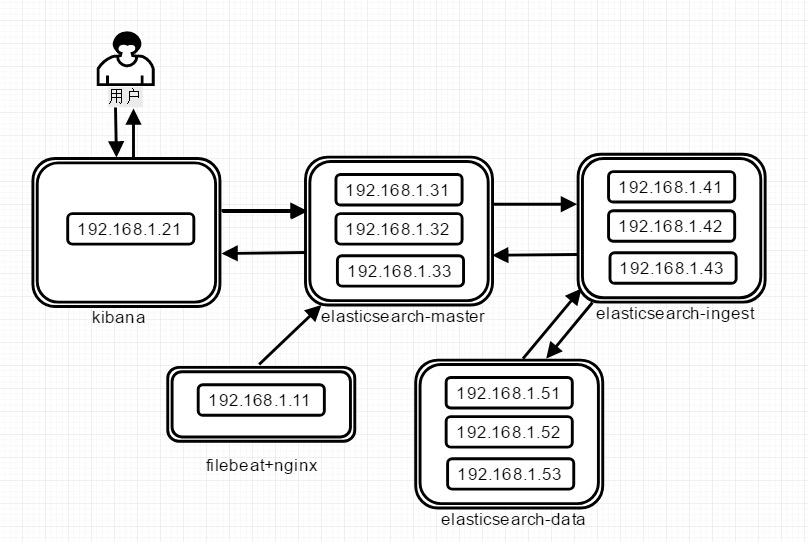

ES的架构为三节点,即master、ingest、data角色同时部署在三台服务器上。

下面将进行角色分离部署,并且每个角色分别部署三节点,在实现性能最大化的同时保障高可用。

▷ elasticsearch的master节点:用于调度,采用普通性能服务器来部署

▷ elasticsearch的ingest节点:用于数据预处理,采用性能好的服务器来部署

▷ elasticsearch的data节点:用于数据落地存储,采用存储性能好的服务器来部署

服务器配置

注意:此处的架构是之前的文章《EFK教程 - 快速入门指南》的拓展,因此请先按照《EFK教程 - 快速入门指南》完成部署

1️⃣ 部署3台data节点,加入原集群

2️⃣ 部署3台ingest节点,加入原集群

3️⃣ 将原有的es索引迁移到data节点

4️⃣ 将原有的es节点改造成master节点

之前已完成了基础的elasticsearch架构,现需要新增三台存储节点加入集群,同时关闭master和ingest功能

elasticsearch-data安装:3台均执行相同的安装步骤

tar -zxvf elasticsearch-7.3.2-linux-x86_64.tar.gz

mv elasticsearch-7.3.2 /opt/elasticsearch

useradd elasticsearch -d /opt/elasticsearch -s /sbin/nologin

mkdir -p /opt/logs/elasticsearch

chown elasticsearch.elasticsearch /opt/elasticsearch -R

chown elasticsearch.elasticsearch /opt/logs/elasticsearch -R

# 数据盘需要elasticsearch写权限

chown elasticsearch.elasticsearch /data/SAS -R

# 限制一个进程可以拥有的VMA(虚拟内存区域)的数量要超过262144,不然elasticsearch会报max virtual memory areas vm.max_map_count [65535] is too low, increase to at least [262144]

echo "vm.max_map_count = 655350" >> /etc/sysctl.conf

sysctl -pelasticsearch-data配置

▷ 192.168.1.51 /opt/elasticsearch/config/elasticsearch.yml

cluster.name: my-application

node.name: 192.168.1.51

# 数据盘位置,如果有多个硬盘位置,用","隔开

path.data: /data/SAS

path.logs: /opt/logs/elasticsearch

network.host: 192.168.1.51

discovery.seed_hosts: ["192.168.1.31","192.168.1.32","192.168.1.33"]

cluster.initial_master_nodes: ["192.168.1.31","192.168.1.32","192.168.1.33"]

http.cors.enabled: true

http.cors.allow-origin: "*"

# 关闭master功能

node.master: false

# 关闭ingest功能

node.ingest: false

# 开启data功能

node.data: true▷ 192.168.1.52 /opt/elasticsearch/config/elasticsearch.yml

cluster.name: my-application

node.name: 192.168.1.52

# 数据盘位置,如果有多个硬盘位置,用","隔开

path.data: /data/SAS

path.logs: /opt/logs/elasticsearch

network.host: 192.168.1.52

discovery.seed_hosts: ["192.168.1.31","192.168.1.32","192.168.1.33"]

cluster.initial_master_nodes: ["192.168.1.31","192.168.1.32","192.168.1.33"]

http.cors.enabled: true

http.cors.allow-origin: "*"

# 关闭master功能

node.master: false

# 关闭ingest功能

node.ingest: false

# 开启data功能

node.data: true▷ 192.168.1.53 /opt/elasticsearch/config/elasticsearch.yml

cluster.name: my-application

node.name: 192.168.1.53

# 数据盘位置,如果有多个硬盘位置,用","隔开

path.data: /data/SAS

path.logs: /opt/logs/elasticsearch

network.host: 192.168.1.53

discovery.seed_hosts: ["192.168.1.31","192.168.1.32","192.168.1.33"]

cluster.initial_master_nodes: ["192.168.1.31","192.168.1.32","192.168.1.33"]

http.cors.enabled: true

http.cors.allow-origin: "*"

# 关闭master功能

node.master: false

# 关闭ingest功能

node.ingest: false

# 开启data功能

node.data: trueelasticsearch-data启动

sudo -u elasticsearch /opt/elasticsearch/bin/elasticsearchelasticsearch集群状态

curl "http://192.168.1.31:9200/_cat/health?v"

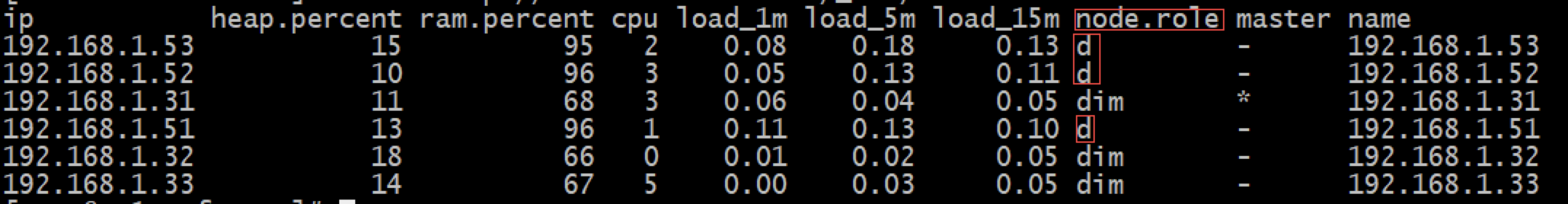

elasticsearch-data状态

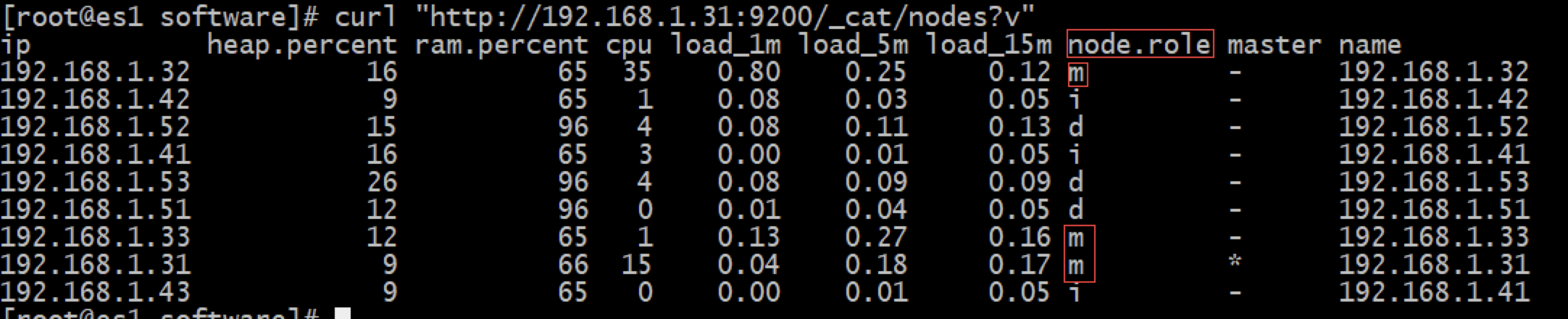

curl "http://192.168.1.31:9200/_cat/nodes?v"

elasticsearch-data参数说明

status: green # 集群健康状态

node.total: 6 # 有6台机子组成集群

node.data: 6 # 有6个节点的存储

node.role: d # 只拥有data角色

node.role: i # 只拥有ingest角色

node.role: m # 只拥有master角色

node.role: mid # 拥master、ingest、data角色现需要新增三台ingest节点加入集群,同时关闭master和data功能

elasticsearch-ingest安装:3台es均执行相同的安装步骤

tar -zxvf elasticsearch-7.3.2-linux-x86_64.tar.gz

mv elasticsearch-7.3.2 /opt/elasticsearch

useradd elasticsearch -d /opt/elasticsearch -s /sbin/nologin

mkdir -p /opt/logs/elasticsearch

chown elasticsearch.elasticsearch /opt/elasticsearch -R

chown elasticsearch.elasticsearch /opt/logs/elasticsearch -R

# 限制一个进程可以拥有的VMA(虚拟内存区域)的数量要超过262144,不然elasticsearch会报max virtual memory areas vm.max_map_count [65535] is too low, increase to at least [262144]

echo "vm.max_map_count = 655350" >> /etc/sysctl.conf

sysctl -pelasticsearch-ingest配置

▷ 192.168.1.41 /opt/elasticsearch/config/elasticsearch.yml

cluster.name: my-application

node.name: 192.168.1.41

path.logs: /opt/logs/elasticsearch

network.host: 192.168.1.41

discovery.seed_hosts: ["192.168.1.31","192.168.1.32","192.168.1.33"]

cluster.initial_master_nodes: ["192.168.1.31","192.168.1.32","192.168.1.33"]

http.cors.enabled: true

http.cors.allow-origin: "*"

# 关闭master功能

node.master: false

# 开启ingest功能

node.ingest: true

# 关闭data功能

node.data: false▷ 192.168.1.42 /opt/elasticsearch/config/elasticsearch.yml

cluster.name: my-application

node.name: 192.168.1.42

path.logs: /opt/logs/elasticsearch

network.host: 192.168.1.42

discovery.seed_hosts: ["192.168.1.31","192.168.1.32","192.168.1.33"]

cluster.initial_master_nodes: ["192.168.1.31","192.168.1.32","192.168.1.33"]

http.cors.enabled: true

http.cors.allow-origin: "*"

# 关闭master功能

node.master: false

# 开启ingest功能

node.ingest: true

# 关闭data功能

node.data: false▷ 192.168.1.43 /opt/elasticsearch/config/elasticsearch.yml

cluster.name: my-application

node.name: 192.168.1.43

path.logs: /opt/logs/elasticsearch

network.host: 192.168.1.43

discovery.seed_hosts: ["192.168.1.31","192.168.1.32","192.168.1.33"]

cluster.initial_master_nodes: ["192.168.1.31","192.168.1.32","192.168.1.33"]

http.cors.enabled: true

http.cors.allow-origin: "*"

# 关闭master功能

node.master: false

# 开启ingest功能

node.ingest: true

# 关闭data功能

node.data: falseelasticsearch-ingest启动

sudo -u elasticsearch /opt/elasticsearch/bin/elasticsearchelasticsearch集群状态

curl "http://192.168.1.31:9200/_cat/health?v"elasticsearch-ingest状态

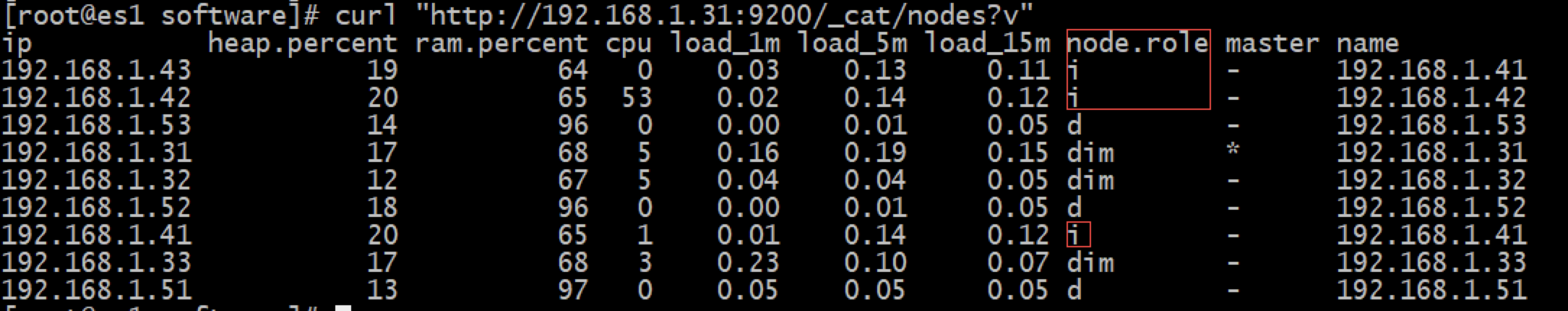

curl "http://192.168.1.31:9200/_cat/nodes?v"

elasticsearch-ingest参数说明

status: green # 集群健康状态

node.total: 9 # 有9台机子组成集群

node.data: 6 # 有6个节点的存储

node.role: d # 只拥有data角色

node.role: i # 只拥有ingest角色

node.role: m # 只拥有master角色

node.role: mid # 拥master、ingest、data角色首先,将上一篇《EFK教程 - 快速入门指南》中部署的3台es(192.168.1.31、192.168.1.32、192.168.1.33)改成只有master的功能, 因此需要先将这3台上的索引数据迁移到本次所做的data节点中

1️⃣ 索引迁移:一定要做这步,将之前的索引放到data节点上

curl -X PUT "192.168.1.31:9200/*/_settings?pretty" -H 'Content-Type: application/json' -d'

{

"index.routing.allocation.include._ip": "192.168.1.51,192.168.1.52,192.168.1.53"

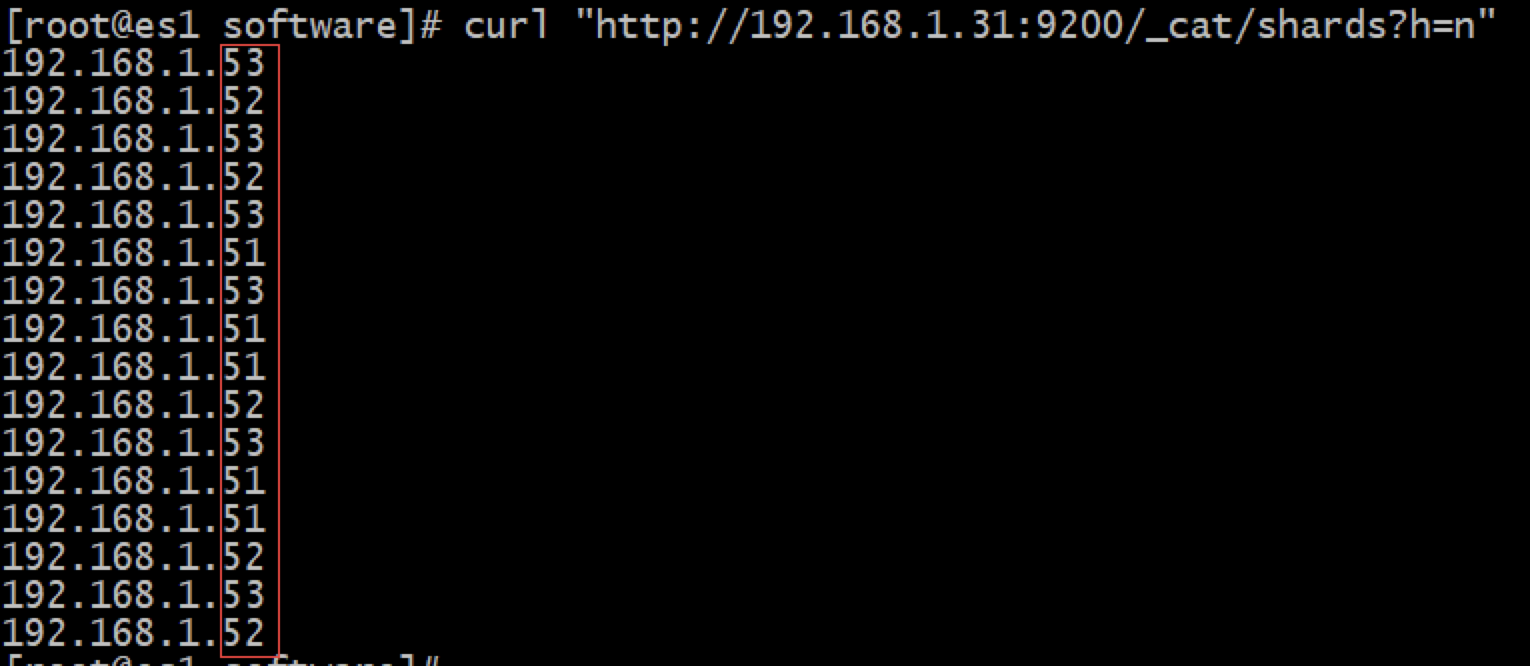

}'2️⃣ 确认当前索引存储位置:确认所有索引不在192.168.1.31、192.168.1.32、192.168.1.33节点上

curl "http://192.168.1.31:9200/_cat/shards?h=n"

elasticsearch-master配置

注意事项:修改配置,重启进程,需要一台一台执行,要确保第一台成功后,再执行下一台。重启进程的方法:由于上一篇文章《EFK教程 - 快速入门指南》里,是执行命令跑在前台,因此直接ctrl - c退出再启动即可,启动命令如下

sudo -u elasticsearch /opt/elasticsearch/bin/elasticsearch▷ 192.168.1.31 /opt/elasticsearch/config/elasticsearch.yml

cluster.name: my-application

node.name: 192.168.1.31

path.logs: /opt/logs/elasticsearch

network.host: 192.168.1.31

discovery.seed_hosts: ["192.168.1.31","192.168.1.32","192.168.1.33"]

cluster.initial_master_nodes: ["192.168.1.31","192.168.1.32","192.168.1.33"]

http.cors.enabled: true

http.cors.allow-origin: "*"

#开启master功能

node.master: true

#关闭ingest功能

node.ingest: false

#关闭data功能

node.data: false▷ 192.168.1.32 /opt/elasticsearch/config/elasticsearch.yml

cluster.name: my-application

node.name: 192.168.1.32

path.logs: /opt/logs/elasticsearch

network.host: 192.168.1.32

discovery.seed_hosts: ["192.168.1.31","192.168.1.32","192.168.1.33"]

cluster.initial_master_nodes: ["192.168.1.31","192.168.1.32","192.168.1.33"]

http.cors.enabled: true

http.cors.allow-origin: "*"

#开启master功能

node.master: true

#关闭ingest功能

node.ingest: false

#关闭data功能

node.data: false▷ 192.168.1.33 /opt/elasticsearch/config/elasticsearch.yml

cluster.name: my-application

node.name: 192.168.1.33

path.logs: /opt/logs/elasticsearch

network.host: 192.168.1.33

discovery.seed_hosts: ["192.168.1.31","192.168.1.32","192.168.1.33"]

cluster.initial_master_nodes: ["192.168.1.31","192.168.1.32","192.168.1.33"]

http.cors.enabled: true

http.cors.allow-origin: "*"

#开启master功能

node.master: true

#关闭ingest功能

node.ingest: false

#关闭data功能

node.data: falseelasticsearch集群状态

curl "http://192.168.1.31:9200/_cat/health?v"

elasticsearch-master状态

curl "http://192.168.1.31:9200/_cat/nodes?v"

至此,当node.role里所有服务器都不再出现“mid”,则表示一切顺利完成。

关于ElasticSearch如何进行角色分离部署就分享到这里了,希望以上内容可以对大家有一定的帮助,可以学到更多知识。如果觉得文章不错,可以把它分享出去让更多的人看到。

亿速云「云服务器」,即开即用、新一代英特尔至强铂金CPU、三副本存储NVMe SSD云盘,价格低至29元/月。点击查看>>

免责声明:本站发布的内容(图片、视频和文字)以原创、转载和分享为主,文章观点不代表本网站立场,如果涉及侵权请联系站长邮箱:is@yisu.com进行举报,并提供相关证据,一经查实,将立刻删除涉嫌侵权内容。

原文链接:https://my.oschina.net/fzxiaomange/blog/3129870